Artificial Intelligence (AI) has revolutionized how we interact with technology, leading to the rise of virtual assistants, chatbots, and other automated systems capable of handling complex tasks. Despite this progress, even the most advanced AI systems encounter significant limitations known as knowledge gaps. For instance, when…

Combining next-token prediction and video diffusion in computer vision and robotics

In the current AI zeitgeist, sequence models have skyrocketed in popularity for their ability to analyze data and predict what to do next. For instance, you’ve likely used next-token prediction models like ChatGPT, which anticipate each word (token) in a sequence to form answers to users’ queries. There are also full-sequence diffusion models like Sora, which convert words into dazzling, realistic visuals by successively “denoising” an entire video sequence.

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have proposed a simple change to the diffusion training scheme that makes this sequence denoising considerably more flexible.

When applied to fields like computer vision and robotics, the next-token and full-sequence diffusion models have capability trade-offs. Next-token models can spit out sequences that vary in length. However, they make these generations while being unaware of desirable states in the far future — such as steering its sequence generation toward a certain goal 10 tokens away — and thus require additional mechanisms for long-horizon (long-term) planning. Diffusion models can perform such future-conditioned sampling, but lack the ability of next-token models to generate variable-length sequences.

Researchers from CSAIL want to combine the strengths of both models, so they created a sequence model training technique called “Diffusion Forcing.” The name comes from “Teacher Forcing,” the conventional training scheme that breaks down full sequence generation into the smaller, easier steps of next-token generation (much like a good teacher simplifying a complex concept).

Diffusion Forcing

Video: MIT CSAIL

Diffusion Forcing found common ground between diffusion models and teacher forcing: They both use training schemes that involve predicting masked (noisy) tokens from unmasked ones. In the case of diffusion models, they gradually add noise to data, which can be viewed as fractional masking. The MIT researchers’ Diffusion Forcing method trains neural networks to cleanse a collection of tokens, removing different amounts of noise within each one while simultaneously predicting the next few tokens. The result: a flexible, reliable sequence model that resulted in higher-quality artificial videos and more precise decision-making for robots and AI agents.

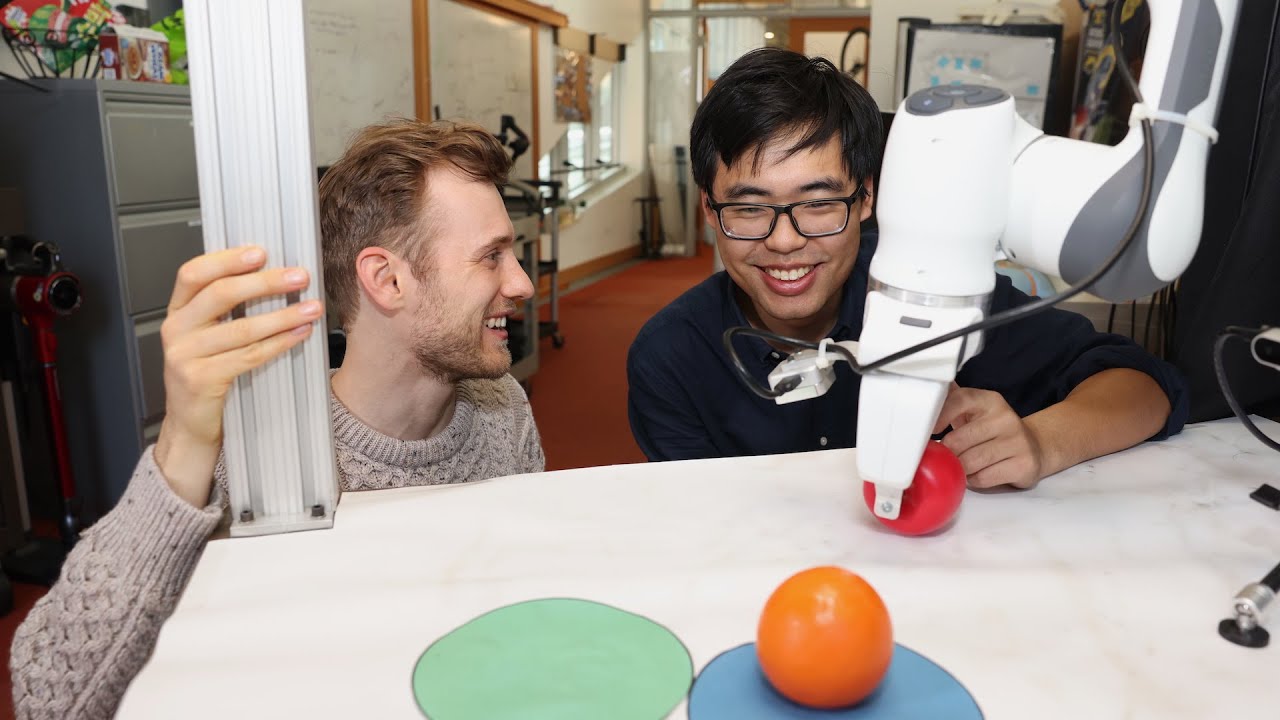

By sorting through noisy data and reliably predicting the next steps in a task, Diffusion Forcing can aid a robot in ignoring visual distractions to complete manipulation tasks. It can also generate stable and consistent video sequences and even guide an AI agent through digital mazes. This method could potentially enable household and factory robots to generalize to new tasks and improve AI-generated entertainment.

“Sequence models aim to condition on the known past and predict the unknown future, a type of binary masking. However, masking doesn’t need to be binary,” says lead author, MIT electrical engineering and computer science (EECS) PhD student, and CSAIL member Boyuan Chen. “With Diffusion Forcing, we add different levels of noise to each token, effectively serving as a type of fractional masking. At test time, our system can “unmask” a collection of tokens and diffuse a sequence in the near future at a lower noise level. It knows what to trust within its data to overcome out-of-distribution inputs.”

In several experiments, Diffusion Forcing thrived at ignoring misleading data to execute tasks while anticipating future actions.

When implemented into a robotic arm, for example, it helped swap two toy fruits across three circular mats, a minimal example of a family of long-horizon tasks that require memories. The researchers trained the robot by controlling it from a distance (or teleoperating it) in virtual reality. The robot is trained to mimic the user’s movements from its camera. Despite starting from random positions and seeing distractions like a shopping bag blocking the markers, it placed the objects into its target spots.

To generate videos, they trained Diffusion Forcing on “Minecraft” game play and colorful digital environments created within Google’s DeepMind Lab Simulator. When given a single frame of footage, the method produced more stable, higher-resolution videos than comparable baselines like a Sora-like full-sequence diffusion model and ChatGPT-like next-token models. These approaches created videos that appeared inconsistent, with the latter sometimes failing to generate working video past just 72 frames.

Diffusion Forcing not only generates fancy videos, but can also serve as a motion planner that steers toward desired outcomes or rewards. Thanks to its flexibility, Diffusion Forcing can uniquely generate plans with varying horizon, perform tree search, and incorporate the intuition that the distant future is more uncertain than the near future. In the task of solving a 2D maze, Diffusion Forcing outperformed six baselines by generating faster plans leading to the goal location, indicating that it could be an effective planner for robots in the future.

Across each demo, Diffusion Forcing acted as a full sequence model, a next-token prediction model, or both. According to Chen, this versatile approach could potentially serve as a powerful backbone for a “world model,” an AI system that can simulate the dynamics of the world by training on billions of internet videos. This would allow robots to perform novel tasks by imagining what they need to do based on their surroundings. For example, if you asked a robot to open a door without being trained on how to do it, the model could produce a video that’ll show the machine how to do it.

The team is currently looking to scale up their method to larger datasets and the latest transformer models to improve performance. They intend to broaden their work to build a ChatGPT-like robot brain that helps robots perform tasks in new environments without human demonstration.

“With Diffusion Forcing, we are taking a step to bringing video generation and robotics closer together,” says senior author Vincent Sitzmann, MIT assistant professor and member of CSAIL, where he leads the Scene Representation group. “In the end, we hope that we can use all the knowledge stored in videos on the internet to enable robots to help in everyday life. Many more exciting research challenges remain, like how robots can learn to imitate humans by watching them even when their own bodies are so different from our own!”

Chen and Sitzmann wrote the paper alongside recent MIT visiting researcher Diego Martí Monsó, and CSAIL affiliates: Yilun Du, a EECS graduate student; Max Simchowitz, former postdoc and incoming Carnegie Mellon University assistant professor; and Russ Tedrake, the Toyota Professor of EECS, Aeronautics and Astronautics, and Mechanical Engineering at MIT, vice president of robotics research at the Toyota Research Institute, and CSAIL member. Their work was supported, in part, by the U.S. National Science Foundation, the Singapore Defence Science and Technology Agency, Intelligence Advanced Research Projects Activity via the U.S. Department of the Interior, and the Amazon Science Hub. They will present their research at NeurIPS in December.

Your guide to LLMOps

Understanding the varied landscape of LLMOps is essential for harnessing the full potential of large language models in today’s digital world. …

Leveraging Human Attention Can Improve AI-Generated Images

New research from China has proposed a method for improving the quality of images generated by Latent Diffusion Models (LDMs) models such as Stable Diffusion. The method focuses on optimizing the salient regions of an image – areas most likely to attract human attention. Traditional methods,…

Scoring AI models: Endor Labs unveils evaluation tool

Endor Labs has begun scoring AI models based on their security, popularity, quality, and activity. Dubbed ‘Endor Scores for AI Models,’ this unique capability aims to simplify the process of identifying the most secure open-source AI models currently available on Hugging Face – a platform for…

Atomos Shogun Ultra for Worship Video – Videoguys

In this article from Atomos, Austin Allen, the lead filmmaker at Times Square Church in New York City, shares how the Atomos Shogun Ultra monitor has transformed the church’s video production workflow. Located in the heart of Manhattan, Times Square Church serves over 50,000 attendees annually, and is known for pushing the boundaries of filmmaking and video ministry. Allen, a long-time user of Atomos products, highlights the Shogun Ultra’s ease of use and versatility, making it a perfect solution for churches and organizations looking to improve their video production processes. Whether used for live broadcasts, studio shoots, or smaller projects, Allen emphasizes how the Shogun Ultra has made a significant difference in their workflow, especially when working with diverse production needs.

One of the standout features Allen discusses is the Camera to Cloud functionality integrated into the Shogun Ultra. This feature enables filmmakers to upload high-quality H.265 files directly to the cloud while shooting, which is particularly beneficial for remote editing and post-production workflows. For churches, this offers a tremendous advantage, as editors or social media teams working off-site can access footage immediately without the need for manual file transfers. Allen explains how this feature streamlines video production, making it faster and more efficient, especially for time-sensitive content like church services, conferences, or social media updates. This cloud-based workflow also adds redundancy, ensuring that teams always have a backup while allowing them to work on footage in real time.

Another key feature Allen praises is the Shogun Ultra’s ability to record in 6K ProRes RAW. This capability provides high-quality recording even when using smaller camera setups, which is ideal for churches or filmmakers who want top-tier production quality without needing large-scale equipment. Allen explains how the Shogun Ultra allows users to unlock the full potential of their cameras, whether they are using a RED, ARRI, or a smaller DSLR like the Canon R5. Additionally, the ability to record in ProRes RAW is crucial for color matching across multiple cameras, a common challenge in productions that involve different types of cameras. With the Shogun Ultra, churches and filmmakers can ensure consistent image quality and color matching, even when using a mix of larger and smaller cameras in the same project.

Allen highlights the Shogun Ultra as an essential tool for his team at Times Square Church. Its user-friendly design and advanced features, such as ProRes RAW recording and Camera to Cloud integration, make it a perfect fit for churches and organizations with high production needs but limited resources. By offering flexible recording options and seamless cloud integration, the Shogun Ultra helps simplify complex production tasks and accelerates post-production timelines. Whether it’s used for live church broadcasts, conferences, or social media content creation, Allen emphasizes how the Shogun Ultra has proven itself as an invaluable asset in their workflow, allowing them to push the boundaries of their video production efforts.

In conclusion, this article showcases how the Atomos Shogun Ultra monitor has revolutionized the video production workflow at Times Square Church. Its versatility across different camera setups, from high-end cinema cameras to smaller DSLRs, and its advanced features like Camera to Cloud and 6K ProRes RAW recording make it an indispensable tool for filmmakers and video teams. By simplifying video production processes and offering high-quality output, the Shogun Ultra is an excellent choice for churches, ministries, and organizations looking to elevate their video content creation.

[embedded content]

Read the full article from Atomos HERE

Beyond Chain-of-Thought: How Thought Preference Optimization is Advancing LLMs

A groundbreaking new technique, developed by a team of researchers from Meta, UC Berkeley, and NYU, promises to enhance how AI systems approach general tasks. Known as “Thought Preference Optimization” (TPO), this method aims to make large language models (LLMs) more thoughtful and deliberate in their…

An exotic materials researcher with the soul of an explorer

Riccardo Comin says the best part of his job as a physics professor and exotic materials researcher is when his students come into his office to tell him they have new, interesting data.

“It’s that moment of discovery, that moment of awe, of revelation of something that’s outside of anything you know,” says Comin, the Class of 1947 Career Development Associate Professor of Physics. “That’s what makes it all worthwhile.”

Intriguing data energizes Comin because it can potentially grant access to an unexplored world. His team has discovered materials with quantum and other exotic properties, which could find a range of applications, such as handling the world’s exploding quantities of data, more precise medical imaging, and vastly increased energy efficiency — to name just a few. For Comin, who has always been somewhat of an explorer, new discoveries satisfy a kind of intellectual wanderlust.

As a small child growing up in the city of Udine in northeast Italy, Comin loved geography and maps, even drawing his own of imaginary cities and countries. He traveled literally, too, touring Europe with his parents; his father was offered free train travel as a project manager on large projects for Italian railroads.

Comin also loved numbers from an early age, and by about eighth grade would go to the public library to delve into math textbooks about calculus and analytical geometry that were far beyond what he was being taught in school. Later, in high school, Comin enjoyed being challenged by a math and physics teacher who in class would ask him questions about extremely advanced concepts.

“My classmates were looking at me like I was an alien, but I had a lot of fun,” Comin says.

Unafraid to venture alone into more rarefied areas of study, Comin nonetheless sought community, and appreciated the rapport he had with his teacher.

“He gave me the kind of interaction I was looking for, because otherwise it would have been just me and my books,” Comin says. “He helped transform an isolated activity into a social one. He made me feel like I had a buddy.”

By the end of his undergraduate studies at the University of Trieste, Comin says he decided on experimental physics, to have “the opportunity to explore and observe physical phenomena.”

He visited a nearby research facility that houses the Elettra Synchrotron to look for a research position where he could work on his undergraduate thesis, and became interested in all of the materials science research being conducted there. Drawn to community as well as the research, he chose a group that was investigating how the atoms and molecules in a liquid can rearrange themselves to become a glass.

“This one group struck me. They seemed to really enjoy what they were doing, and they had fun outside of work and enjoyed the outdoors,” Comin says. “They seemed to be a nice group of people to be part of. I think I cared more about the social environment than the specific research topic.”

By the time Comin was finishing his master’s, also in Trieste, and wanted to get a PhD, his focus had turned to electrons inside a solid rather than the behavior of atoms and molecules. Having traveled “literally almost everywhere in Europe,” Comin says he wanted to experience a different research environment outside of Europe.

He told his academic advisor he wanted to go to North America and was connected with Andrea Damascelli, the Canada Research Chair in Electronic Structure of Quantum Materials at the University of British Columbia, who was working on high-temperature superconductors. Comin says he was fascinated by the behavior of the electrons in the materials Damascelli and his group were studying.

“It’s almost like a quantum choreography, particles that dance together” rather than moving in many different directions, Comin says.

Comin’s subsequent postdoctoral work at the University of Toronto, focusing on optoelectronic materials — which can interact with photons and electrical energy — ignited his passion for connecting a material’s properties to its functionality and bridging the gap between fundamental physics and real-world applications.

Since coming to MIT in 2016, Comin has continued to delight in the behavior of electrons. He and Joe Checkelsky, associate professor of physics, had a breakthrough with a new class of materials in which electrons, very atypically, are nearly stationary.

Such materials could be used to explore zero energy loss, such as from power lines, and new approaches to quantum computing.

“It’s a very peculiar state of matter,” says Comin. “Normally, electrons are just zapping around. If you put an electron in a crystalline environment, what that electron will want to do is hop around, explore its neighbors, and basically be everywhere at the same time.”

The more sedentary electrons occurred in materials where a structure of interlaced triangles and hexagons tended to trap the electrons on the hexagons and, because the electrons all have the same energy, they create what’s called an electronic flat band, referring to the pattern that is created when they are measured. Their existence was predicted theoretically, but they had not been observed.

Comin says he and his colleagues made educated guesses on where to find flat bands, but they were elusive. After three years of research, however, they had a breakthrough.

“We put a sample material in an experimental chamber, we aligned the sample to do the experiment and started the measurement and, literally, five to 10 minutes later, we saw this beautiful flat band on the screen,” Comin says. “It was so clear, like this thing was basically screaming, How could you not find me before?

“That started off a whole area of research that is growing and growing — and a new direction in our field.”

Comin’s later research into certain two-dimensional materials with the thickness of single atoms and an internal structural feature of chirality, or right-handedness or left-handedness similar to how a spiral has a twist in one direction or the other, has yielded another new realm to explore.

By controlling the chirality, “there are interesting prospects of realizing a whole new class of devices” that could store information in a way that’s more robust and much more energy-efficient than current methods, says Comin, who is affiliated with MIT’s Materials Research Laboratory. Such devices would be especially valuable as the amount of data available generally and technologies like artificial intelligence grow exponentially.

While investigating these previously unknown properties of certain materials, Comin is characteristically adventurous in his pursuit.

“I embrace the randomness that nature throws at you,” he says. “It appears random, but there could be something behind it, so we try variations, switch things around, see what nature serves you. Much of what we discover is due to luck — and the rest boils down to a mix of knowledge and intuition to recognize when we’re seeing something new, something that’s worth exploring.”

Equipping doctors with AI co-pilots

Most doctors go into medicine because they want to help patients. But today’s health care system requires that doctors spend hours each day on other work — searching through electronic health records (EHRs), writing documentation, coding and billing, prior authorization, and utilization management — often surpassing the time they spend caring for patients. The situation leads to physician burnout, administrative inefficiencies, and worse overall care for patients.

Ambience Healthcare is working to change that with an AI-powered platform that automates routine tasks for clinicians before, during, and after patient visits.

“We build co-pilots to give clinicians AI superpowers,” says Ambience CEO Mike Ng MBA ’16, who co-founded the company with Nikhil Buduma ’17. “Our platform is embedded directly into EHRs to free up clinicians to focus on what matters most, which is providing the best possible patient care.”

Ambience’s suite of products handles pre-charting and real-time AI scribing, and assists with navigating the thousands of rules to select the right insurance billing codes. The platform can also send after-visit summaries to patients and their families in different languages to keep everyone informed and on the same page.

Ambience is already being used across roughly 40 large institutions such as UCSF Health, the Memorial Hermann Health System, St. Luke’s Health System, John Muir Health, and more. Clinicians leverage Ambience in dozens of languages and more than 100 specialties and subspecialties, in settings like the emergency department, hospital inpatient settings, and the oncology ward.

The founders say clinicians using Ambience save two to three hours per day on documentation, report lower levels of burnout, and develop higher-quality relationships with their patients.

From problem to product to platform

Ng worked in finance until getting an up-close look at the health care system after he fractured his back in 2012. He was initially misdiagnosed and put on the wrong care plan, but he learned a lot about the U.S. health system in the process, including how the majority of clinicians’ days are spent documenting visits, selecting billing codes, and completing other administrative tasks. The average clinician only spends 27 percent of their time on direct patient care.

In 2014, Ng decided to enter the MIT Sloan School of Management. During his first week, he attended the “t=0” celebration of entrepreneurship hosted by the Martin Trust Center for MIT Entrepreneurship, where he met Buduma. The pair became fast friends, and they ended up taking classes together including 15.378 (Building an Entrepreneurial Venture) and 15.392 (Scaling Entrepreneurial Ventures).

“MIT was an incredible training ground to evaluate what makes a great company and learn the foundations of building a successful company,” Ng says.

Buduma had gone through his own journey to discover problems with the health care system. After immigrating to the U.S. from India as a child and battling persistent health issues, he had watched his parents struggle to navigate the U.S. medical system. While completing his bachelor’s degree at MIT, he was also steeped in the AI research community and wrote an early textbook on modern AI and deep learning.

In 2016, Ng and Buduma founded their first company in San Francisco — Remedy Health — which operated its own AI-powered health care platform. In the process of hiring clinicians, taking care of patients, and implementing technology themselves, they developed an even deeper appreciation for the challenges that health care organizations face.

During that time, they also got an inside look at advances in AI. Google’s Chief Scientist Jeff Dean, a major investor in Remedy and now in Ambience, led a research group inside of Google Brain to invent the transformer architecture. Ng and Buduma say they were among the first to put transformers into production to support their own clinicians at Remedy. Subsequently, several of their friends and housemates went on to start the large language model group within OpenAI. Their friends’ work formed the research foundations that ultimately led to ChatGPT.

“It was very clear that we were at this inflection point where we were going to have this class of general-purpose models that were going to get exponentially better,” Buduma says. “But I think we also noticed a big gap between those general-purpose models versus what actually would be robust enough to work in a clinic. Mike and I decided in 2020 that there should be a team that specifically focused on fine-tuning these models for health care and medicine.”

The founders started Ambience by building an AI-powered scribe that works on phones and laptops to record the details of doctor-patient visits in a HIPAA-compliant system that preserves patient privacy. They quickly saw that the models needed to be fine-tuned for each area of medicine, and they slowly expanded specialty coverage one by one in a multiyear process.

The founders also realized their scribes needed to fit within back-office operations like insurance coding and billing.

“Documentation isn’t just for the clinician, it’s also for the revenue cycle team,” Buduma says. “We had to go back and rewrite all of our algorithms to be coding-aware. There are literally tens of thousands of coding rules that change every year and differ by specialty and contract type.”

From there, the founders built out models for clinicians to make referrals and to send comprehensive summaries of visits to patients.

“In most care settings before Ambience, when a patient and their family left the clinic, whatever the patient and their family wrote down was what they remembered from the visit,” Buduma says. “That’s one of the features that physicians love most, because they are trying to create the best experience for patients and their families. By the time that patient is in the parking lot, they already have a really robust, high-quality summary of exactly what you talked about and all the shared decision-making around your visit in their portal.”

Democratizing health care

By improving physician productivity, the founders believe they’re helping the health care system manage a chronic shortage of clinicians that’s expected to grow in coming years.

“In health care, access is still a huge problem,” Ng says. “Rural Americans have a 40 percent higher risk of preventable hospitalization, and half of that is attributed to a lack of access to specialty care.”

With Ambience already helping health systems manage razor-thin margins by streamlining administrative tasks, the founders have a longer-term vision to help increase access to the best clinical information across the country.

“There’s a really exciting opportunity to make expertise at some of the major academic medical centers more democratized across the U.S.,” Ng says. “Right now, there’s just not enough specialists in the U.S. to support our rural populations. We hope to help scale the knowledge of the leading specialists in the country through an AI infrastructure layer, especially as these models become more clinically intelligent.”

miRoncol Unveils Breakthrough Blood Test to Detect 12+ Early-Stage Cancers

In a significant advancement for cancer detection, miRoncol, a medtech startup, has completed proof-of-concept studies for a groundbreaking blood test capable of detecting multiple types of cancer at early stages. This innovative test utilizes cutting-edge technologies, including microRNA (miRNA) research and machine learning. By identifying cancers…