Final Cut Pro is a popular video editing application for macOS for creating high-quality video content. One of the many features that makes Final Cut Pro so popular is the ability to use pre-designed presets and templates.

As powerful as Final Cut Pro is, there is no denying that video editing can be a long, and at times, tedious process. Still, with the right preset or template, you can significantly cut your video editing time while improving the overall quality of your video projects.

The presets and templates can include everything from color grading and visual effects to titles and transitions, making creating a polished and professional video much easier and quicker.

We’ve rounded up the best free presets and templates for Final Cut Pro that you can download and use in your own projects. Check them out below and add them to your video library today.

You might also like our collection of free Apple Motion templates.

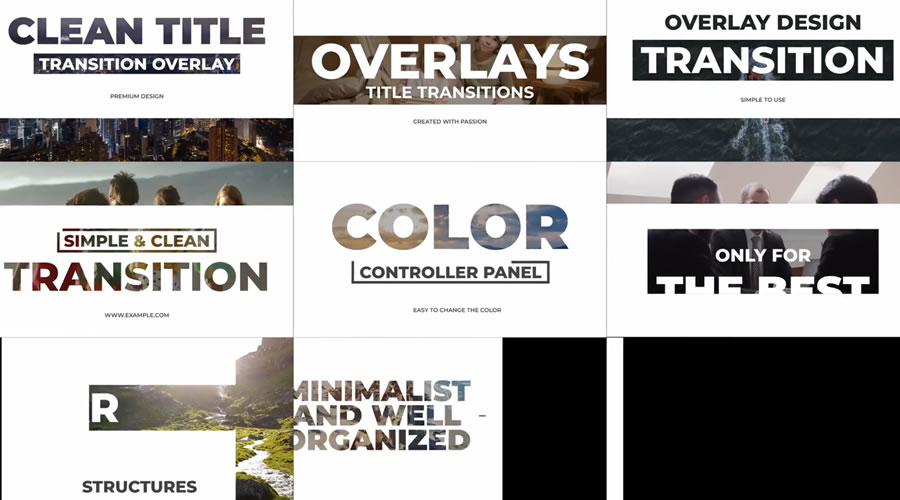

Titles Templates for Final Cut Pro

These professionally designed title templates will add a professional touch to your videos and help refine the typography of your storytelling.

Transport viewers to a galaxy far away with this intergalactic title sequence. Science fiction fans will appreciate the futuristic fonts and warp-speed animations. Use it to make an immediate impact with your videos.

Give your title a high-tech feel with these glitch-effect animations. Choose from 16 effects ranging from pixelated blurs to characters that break apart. This is a rare animation that feels both retro and futuristic.

Lyric videos are an effective way to share music without the expense of a big-budget production. This template makes it easy to create and customize a stylish feature presentation. Independent artists will want to check this one out.

This template provides four beautifully designed wedding-themed title panels for your Final Cut Pro or Final Cut Pro X videos. They are fully customizable and available in all popular resolutions.

This template saves time and money. It includes 30 different title screens for Final Cut Pro and Apple Motion 5. You can easily customize and use them to produce videos in any resolution.

Thanks to this template, you can tell your viewers that they are watching videos in high definition. They invite viewers to watch in HD, state that the videos are in HD, and even denote that your videos are in 4K resolution.

This template pack includes both titles and lower thirds for your Final Cut Pro X. With seven different animated titles to choose from, you will find the perfect version for your next video project.

These creative title templates are fully customizable and great for social media campaigns or in-house video use. No plugins are required, and they can be viewed up to 4K resolution.

This template is ideal for any Final Cut Pro X video project. It offers 24 high-quality title cards that are easy to customize and impressive to watch in action.

This free template simplifies adding and customizing text. Quickly add high-quality scrolling text for endings, lower thirds, or block text to your next Final Cut Pro or Adobe Premiere Pro project.

Thanks to these titles, you can easily create a lyric-style titles for your videos. It is perfect for music videos or if you want to add something extra to your logo opener.

Quickly generate titles that resemble your cinematic favorites, such as Star Wars, Harry Potter, or even Assassin’s Creed. This plugin makes it easy to create impactful titles.

Transition Packs for Final Cut Pro

From smooth crossfades to dynamic wipes, these presets will add style to your videos. Improve your editing with these free Final Cut Pro transition presets, and ensure your videos flow seamlessly from scene to scene.

Like the title scene in a similarly named TV show, Parks & Re-Creation is a fun and quirky transition. Showcase multiple videos at once using this clever montage. It’s also easy to customize with multiple drop zones.

Bring a bit of chaos to your video transitions with this shatter effect. The presentation breaks apart and quickly falls off the screen. It’s an excellent option for action scenes that will keep viewers engaged.

Here’s a collection of fun and modern transitions for Final Cut Pro. It features clean, rounded shapes that can travel in multiple directions. A tutorial is included to help you customize the transition to fit your needs.

These ink transitions add a beautiful touch to any video. The animation is silky smooth and reveals your next scene in style. It’s a professional-grade effect that’s easy to use in your projects.

Get your videos into shape with this set of replicator transitions. Choose from 14 options that feature geometric shapes. They’re pleasing to the eye and appropriate for all types of videos.

Before and after transitions are a handy addition to your video editing toolbox. Use them to show off your products and services. Everything from home renovations to cosmetics can benefit from this set.

With this free resource, you can create your own special transitions. Learn a valuable technique, and then you can add the free transitions to any of your Final Cut Pro video projects.

This transition toolkit gives you all the power to create incredible videos quickly, saving you time and money on your next Final Cut Pro or Final Cut Pro X video.

This resource has eight wonderfully created transitions for Final Cut Pro. With these transitions, add a touch of panache and pizzazz to your next video project.

This set of 50 fully customizable transitions for Final Cut Pro X will save you time and money. They’re easy to use, ready for all resolutions, and will integrate well with your titles.

Use this transition to easily incorporate a flashback effect, reminiscent of the old Scooby-Doo cartoons. This creative touch adds a unique flair to your Final Cut Pro video projects.

Using a glitch transition in your video editing has never been easier. This pack includes 90 glitch transitions in 4k resolution. The glitches are easy to use and customizable.

Do you need to give your video an analog film feel? This is the perfect free resource for you. Recreate the experience of flipping through an old analog television set to add a vintage touch to your Final Cut videos.

This free resource includes ten clean, easy-to-use transitions for your Final Cut Pro video projects. The transitions are customizable and available in all major resolutions.

This pack offers transitions that add a colorful and modern look to your Final Cut Pro videos. The transitions are smooth and vibrant, with adjustable durations, and are available in all major resolutions.

Here are 13 different transitions for your Final Cut Pro video projects. Each transition is customizable and suitable for both personal and commercial use.

Animated Elements for Final Cut Pro

Improve your videos with smooth transitions, engaging text animations, and powerful visual effects. These free animated presets will help you create professional-quality videos that leave a great impression.

This preset pack includes 50 color gradients you can use on any Final Cut Pro project. They will be perfect for use as backgrounds, layouts, film burns, or even light leaks.

This toolkit for Final Cut Pro and Apple Motion will save you time and energy on your next animated project. You can quickly create, customize, and animate characters to create explainer or educational videos.

This kit of 50 animated icons is perfect for any Final Cut Pro project. Each icon is fully customizable and easy to use, ensuring your projects have a high-quality, professional look and feel.

This plugin provides a fully customizable timecode that can be moved beyond the borders of your Final Cut Pro project. It shows everyone involved where the video is on the timeline, whether they’re watching on a second screen or during the editing process.

Save time using these hand-drawn, animated splash graphics in your next prject. You can quickly customize colors, add a glowing effect, pixelate them, or even do both for your next Final Cut Pro video.

With this plugin, you can quickly create and edit Instagram Story videos. You can change the duration, color, position, fonts, and font sizes. This plugin also has a built-in in-out animation and is available in all popular resolutions.

This plugin allows you to add flavor to your next Final Cut Pro or Final Cut Pro X video. Built to emulate popular social media platforms, it will make your videos come to life with animated text messages, comments, notifications, and more.

Special Effect Templates for Final Cut Pro

Unleash your creativity with these free special effects presets for Final Cut Pro. From exciting particle effects to stunning color grading, these presets will help to transform your footage into something exceptional.

Enhance the action in your videos with a strobe or stutter effect. The package contains four effects that can be dialed to different frame rates. This ensures a smoother result and a better viewing experience.

This effect adds an outline to any video or image with transparency. Customize the look by tweaking the color and thickness of the outline. It’s a fun way to draw attention to objects in your presentation.

This resource offers a powerful way to execute pan and zooms in your Final Cut Pro and Adobe Premiere Pro projects. Key features include support for all popular formats and animation syncing.

This resource offers professional-looking sliced transitions, sound effects, and a specialized slice animation for logo reveals.

This plugin adds an overlay to your Final Cut Pro projects that works best in collaboration with feature-length films. This plugin adds a header, label, and two different timers for ease of use.

This fantastic free resource is perfect for Star Wars fans. You will have complete control of these transitions. Use the circular or side transition and make your videos one with the force.

This free effect simulates the view through various binoculars and firearm scopes. From close-ups to night vision, this resource will give your Final Cut Pro videos a cinematic touch.

This free plugin redefines fade-in and fade-out transitions. Instead of using bulky blocks, it streamlines and customizes the transition as a simple dropped-in effect.

This free plugin is perfect for collaborative work. It allows you to mark videos with a timestamp and a foot and frame counter. You can customize the frame rates, start and stop frames, and the counter’s color and size.

Logo Reveal Templates for Final Cut Pro

These templates will help show off your logo in a way viewers will remember. Use them in the introduction, ending, or as a transitional piece.

The shape logo effects in this template will dress up your brand. The animations are smart and smooth – and viewers will take notice. The template includes two animation types and three shapes.

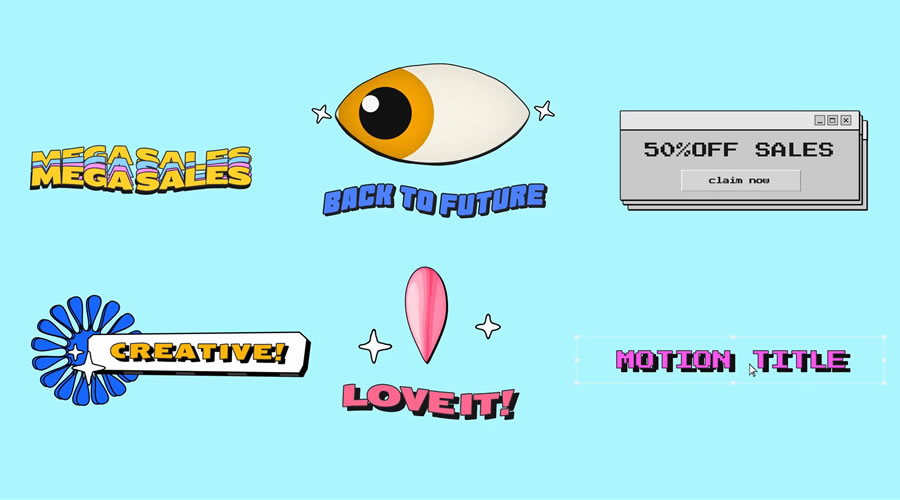

Intro Templates for Final Cut Pro

Create an attention-grabbing intro using one of these easy-to-customize templates. You’ll find unique ways to tell your story and set a tone for the rest of your video project.

Use this template to bring a retro feel to your videos. The effects are glitchy and uncannily accurate – but with a modern touch. Nostalgic viewers may go running to the attic to fetch their old VCR.

Announce your news with a classic touch. Your text and images will fit nicely into this newspaper introduction. The presentation is fun, easy to read, and will entertain viewers of all ages.

Slideshow Templates for Final Cut Pro

Slideshow templates let you share multiple photos or videos within a short presentation. The result is an attractive segment that you can use to define your message.

Level up your fashion and lifestyle videos with this incredible slideshow template. You’ll find slick transitions, bold colors, and outstanding typography. Best of all, these elements can be changed to match your brand.

Here’s a professional slideshow template that makes your images and videos look their best. It comes with fun background shapes and transitions to keep viewers engaged. There’s also space to enhance the experience with custom text.

Use this template to create an action-packed promotional video for your business. It’s designed for multi-purpose use and includes plenty of customization options. There are a lot of possibilities here.

Show off your new product line using this fashion-inspired slideshow template. It looks great and includes aspect ratios for different platforms. You’ll find versions for 4k, mobile devices, and social media platforms.

Sound Effects for Final Cut Pro

Improve the audio experience of your videos with these free sound effect presets for Final Cut Pro. From atmospheric ambiance to impactful explosions, these presets will add depth and realism to your videos.

This free resource includes ten powerful audio effects for Final Cut Pro X. Apply the right audio effect to your video project exactly when needed.

Color & Light Effect Templates for Final Cut Pro

Improve your videos with rich hues, smooth gradients, and dynamic lighting effects. From vibrant color grading to dynamic light flares, these free presets will transform your footage into something exceptional.

Ditch expensive camera filters to get the color look you need with this free resource. The filter works with Final Cut Pro x, Apple Motion 5, and Adobe Premiere Pro, and makes it easy to get a high-quality aesthetic for all your video projects.

Opener Templates for Final Cut Pro

These dynamic opener templates for Final Cut Pro will set the tone of your video, capturing the audience’s attention from the start.

Light leak effects are great for adding dynamic flair to your videos. It’s fast-paced, colorful, and modern. This template is the perfect way to grab a viewer’s attention from the opening scene.

Tear up the screen with this beautifully textured paper effect opener for Final Cut Pro. It features a realistic torn-edge look with bold movement. It’s a unique way to open your presentation with personality.

Bold typography is the calling card for this opening sequence. Combine text with your images and videos to convey a strong message. The effect may be simple, but the result is a video you can’t take your eyes off.

Let your creativity flow with this set of 10 opener templates. There are a variety of professional effects to choose from. Each includes a unique reveal effect and smooth animations. A great way to make a strong first impression.

This sports action opener is sure to fire up the fans. It features bold typography and unforgettable transitions. It’s a great fit for sports teams, journalists, podcasters, and personal trainers. Use it anywhere people are in motion.

This template offers a modern and colorful way to open all your videos. It is perfect for opening title sequences in feature films.

This rhythmic opener template would be a wonderful addition to your Final Cut Pro X toolbox. It allows you to quickly and easily edit text, color, position, and fonts. It is compatible with 720p or 1080p resolutions.

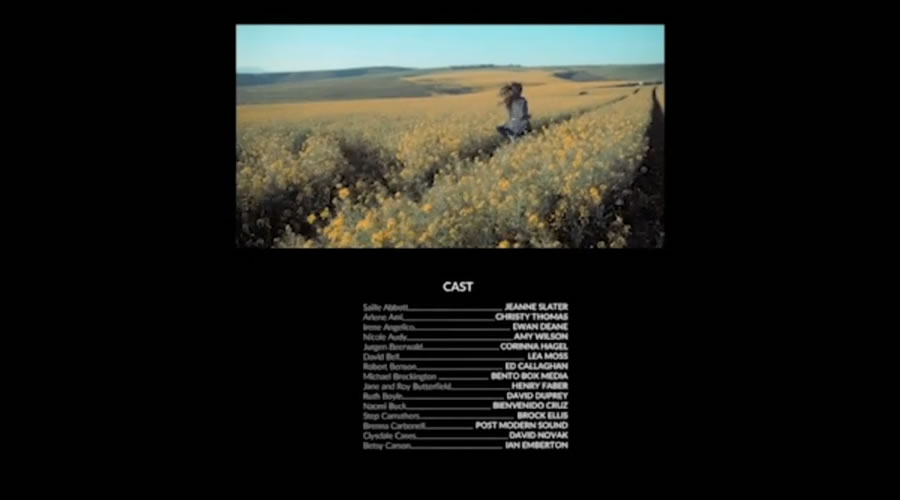

Lower Thirds Templates for Final Cut Pro

These lower thirds Final Cut Pro templates blend information and style. Perfect for adding context or highlighting details.

Promote your social media accounts with this lower-thirds template. It includes the most popular platforms and uses colorful graphics to draw attention. It’s an easy way to get more likes and followers.

Add colorful effects to your lower-thirds content. You’ll find modern typography combined with interesting shapes and movement. The transitions are top-notch and are sure to impress viewers. Use it to make any production look professional.

There are so many possibilities with this lower-thirds template. The options are fast-paced, colorful, and attention-getting. With so many styles to choose from, this suite is one that you’ll use on multiple projects.

Try this template if you want easy-to-use and customizable lower thirds in 1080p for your Final Cut Pro X projects. You can edit the animation and change the duration for each lower third as needed.

This Final Cut Pro X template provides a simple lower thirds that is quick and easy to use and customize. Adjust the duration, add animation, and use it with up to 4k resolution.

How To Install Final Cut Pro Presets

Here are the steps to install templates, presets, and plugins in Final Cut Pro:

- Unzip the downloaded preset folder. Double-click it, and the folder will be unzipped.

- Next, select all the presets in the folder and then copy them by pressing

Command+C.

- Open up

Finder and hold down the Option key. Then, go to Go > Library. Once inside the Library folder, go to Application Support > ProApps > Effects Presets.

- Paste the preset files into the

Effects Presets folder by clicking Command+V.

- The last step is to restart Final Cut Pro so you can view and use your newly installed presets.

Final Cut Pro Template FAQs

-

What is Final Cut Pro?

Final Cut Pro is a video editing application developed by Apple. It’s used for editing and creating professional video content.

-

Why Use Templates in Final Cut Pro?

Templates make editing faster and easier. They give you a ready-made layout or effect, so you don’t have to start from scratch. This is helpful, especially if you’re short on time or new to video editing.

-

Are These Templates Really Free?

Yes, the templates are free. You can download and use them without paying anything. Just check their usage rules, as some might have certain conditions.

-

Can Beginners Use These Templates?

These templates are great for beginners. They’re designed to be easy to use, which helps you learn more about video editing.

-

How Customizable Are These Templates?

They’re pretty flexible. You can tweak colors, text, and audio to match your project’s style. Each template varies, but generally, there’s a lot you can change to make it your own.

-

Do I Need Final Cut Pro to Use These Templates?

Yes, you need Final Cut Pro. It’s available for Mac users and can be purchased from the Apple Store. Sometimes, there are

free trials available if you want to try it first.

-

Can I Use These Templates for Commercial Projects?

Many templates are okay for both personal and commercial use. But always read the specific terms for each template, as there might be different rules or restrictions.

Top