You can use Lightroom Look-Up Tables (LUTs) to improve the look of any photo. They provide an easy way to add all manner of professional effects. They are a must-have addition to every designer’s toolbox.

Today, we’ll introduce you to some fantastic color-grading LUTs for Adobe’s Lightroom. These presets help you tell visual stories by adjusting aspects of your images, such as color, saturation, curves, and white balance. It’s a way to convey emotion, mood, and even time.

Our collection features a variety of color grading options. Use them to depict times of day, seasons, and color temperature, among other unique effects. The possibilities are nearly endless!

You might be surprised at what can be accomplished through these simple add-ons. Not to mention the time you’ll save by not having to edit your images manually.

Ready to get started? Keep reading to find the perfect fit for your visual storytelling project.

You may also like our free collection of Lightroom LUTs.

Add warmth to your photos with these Lightroom presets. They’re designed to bring out orange, red, and yellow tones. Use them to enhance landscapes and portraits with a sunny glowing effect.

Are you looking to add a cool touch to your shots? These LUTs accentuate cool color tones, making your image stand out to a whole new level. They’re perfect for making your subject pop.

Quickly add beautiful vintage film effects to your images with this collection. You can use these presets to bring out rich film tones and create a sense of magic. You’ll find everything you need to design a classic look.

This LUT collection offers a variety of cinematic styles. You’ll find presets for different color temperatures and moods. It’s an easy way to add a bit of Hollywood to your work.

Enhance your lifestyle photography with this set of muted tone presets. They’re great for setting a dark or serious mood. Even better, you can apply these eye-catching looks with a single click.

Create just the right mood with these LUTs. Inspired by a good cup of coffee, they bring rich, warm tones to photos. The large number of presets will help you find the perfect effect for your project.

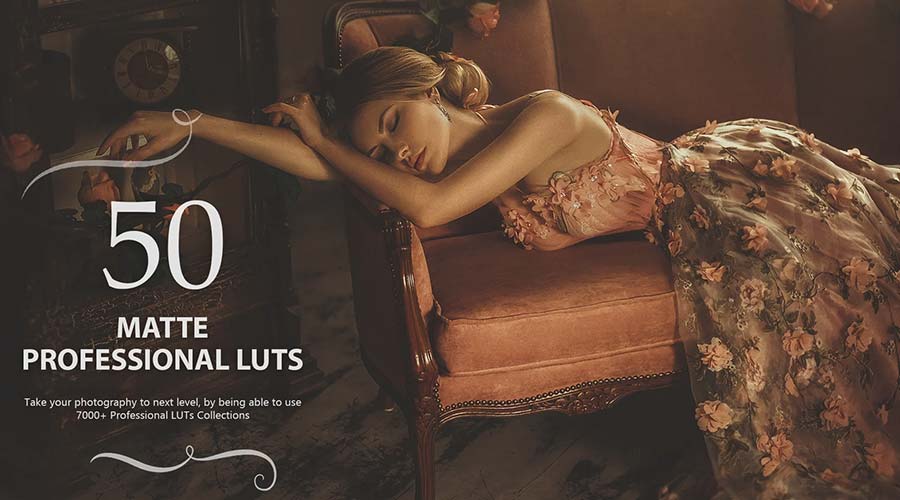

These color-grading LUTs are designed to add cinematic tones to your photographs. Bring out a dramatic matte finish in an instant. You can also adjust these presets to fit your needs.

Soft pastel tones are great for portrait and landscape photography. They add a gentle touch and create a light mood. This LUT collection will help you add a look that will produce smiles.

Enhance your urban landscapes with this set of desaturated Lightroom LUTs. Use them to create a moody and contemporary look with just a click. You’ll find a variety of presets here to achieve your desired result.

Nothing stands out more than an image with rich color contrast. These presets will help you create high-end contrast effects with minimal effort. Add them to your collection and bring out the best in your photos.

The warm glow of the “golden hour” is a longtime staple of photography. The color-grading LUTs in this pack can help you enhance or even simulate the effect. Best of all, they work well with just about any photograph.

Use this collection of presets to add cool blue tones to your photos. They can bring a moody vibe to your landscapes and portraits. The effect is gentle on the eyes and easy to implement.

Bring your images to life with a bright, sun-kissed effect. The presets in this collection can enhance even the dullest low-light photos. The gorgeous glow of a sunset is within your reach.

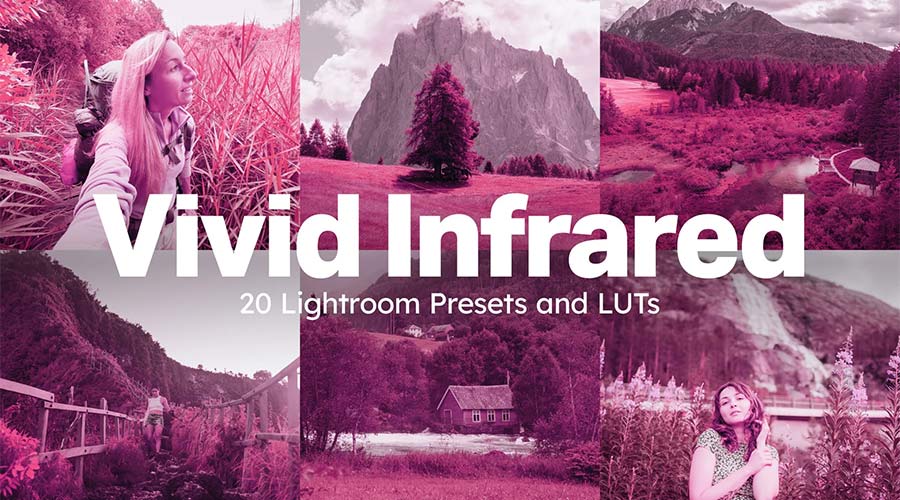

Here’s a fun collection to make your images look otherworldly. Multiple styles are available, each with a unique spin on infrared film effects. Experiment with these presets to discover a whole world of possibilities.

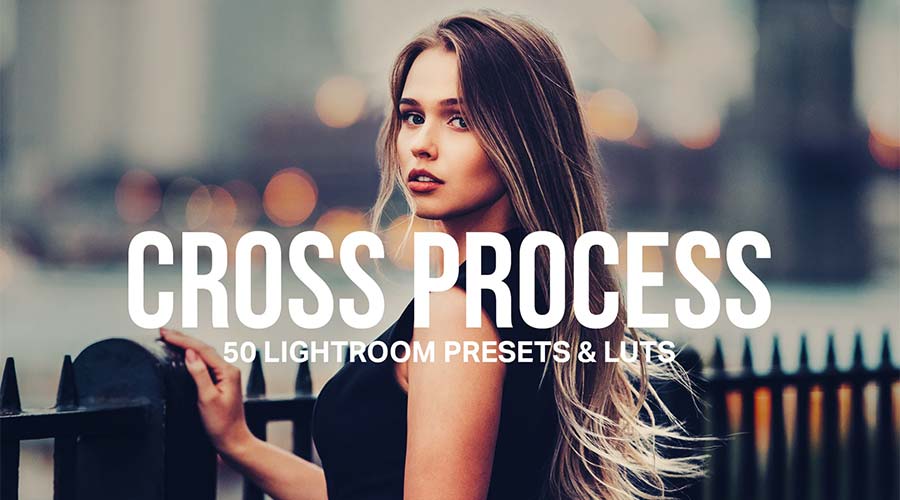

Ensure your color stands out with this set of cross-processed LUTs. Choose your desired color, and the preset will do the rest. It’s a great way to add a dominant hue to your photos.

These LUTs will desaturate your image – resulting in an understated tone. They’re an excellent choice for fashion and landscape photos where a touch of nostalgia is needed. You’ll have professional effects in no time.

Add a vibrant touch with these color-popping presets. You can turn your images from dull to vivid with just one click. Use them to create attention-grabbing social media, web, or print photos.

#

#

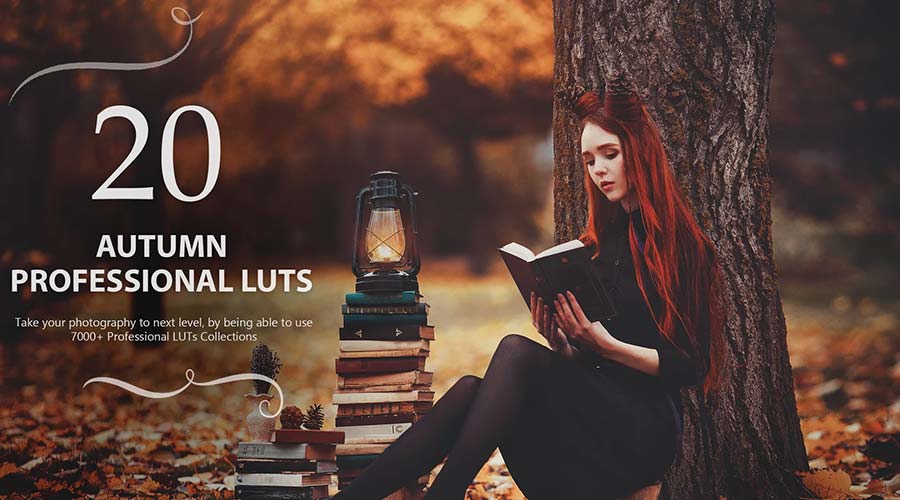

Transform your photos with rich autumn tones that evoke the season’s spirit. Each LUT offers cinematic quality with an array of options to choose from. A simple way to decorate your images for fall!

Think of lush greens, pastel yellows, and bright whites. Bring out these springtime colors with a collection that’s blooming with potential. It’s perfect for outdoor nature shots and portraits.

Turn up the intensity with this collection of summer-themed LUTs. They’re designed to accentuate the rich, warm tones of your photos. Use them to beautify your summer shots without breaking a sweat.

These presets create a sharp and cool style reminiscent of winter. Each option focuses on a different shade, giving you multiple ways to make a statement. Use them on fashion, lifestyle, and outdoor images.