The new AI model represents a milestone in gameplay idetation.

Next Week in The Sequence:

Our series about RAG continues with a deep dive into GraphRAG which was recently created by Microsoft. Speaking of Microsoft, we discuss the amazing rStar-Math technique to improve math reasoning in LLMs. The engineering section dives into Composio which has become one of the most popular stacks to integrate tools with LLMs. Finally, the opinion section discusses whether we are seeing a renaissance in reinforcement learning, or not 😉

You can subscribe to The Sequence below:

📝 Editorial: Microsoft Muse Can Generate Entire Games After Watching You Play

Games have played a monumental role in the evolution of AI. From creating training environments to simulating real world conditions, games represent incredible catalyzers on AI learning. A new field known as world action models is rapidly emerging as a field to combine games and AI. Microsoft just dropped an ecising research in this area with a model that can create games after watching human players.

Sounds crazy? Let’s discuss.

Microsoft Research has introduced Muse, a cutting-edge generative AI model designed to revolutionize gameplay ideation by generating both game visuals and controller actions. Known as a World and Human Action Model (WHAM), Muse acts as a digital collaborator that understands and extends video game dynamics using real human gameplay data. This initiative reflects a growing trend of integrating AI to enhance—not replace—the creative process within the gaming industry.

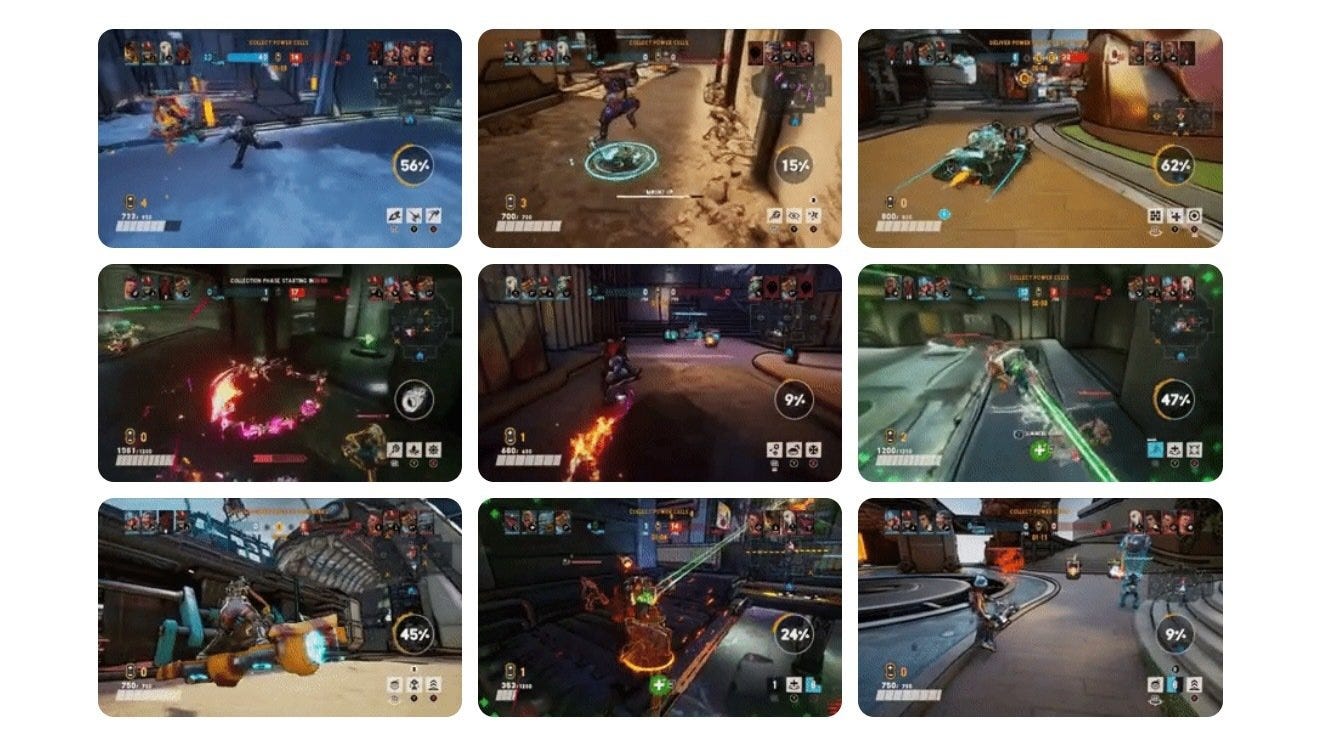

Muse is trained on human gameplay data from the Xbox game Bleeding Edge, learning from over 1 billion images and controller actions—equivalent to more than seven years of continuous gameplay. By analyzing this extensive dataset, Muse can generate complex gameplay sequences that remain consistent and engaging over extended periods. Its architecture enables it to predict how a game might evolve from an initial sequence, capturing the nuanced interplay of visuals, player actions, and game physics.

A standout feature of Muse is its interactive prototype—the WHAM Demonstrator. This tool empowers developers to load visual prompts and generate multiple gameplay continuations, allowing for hands-on exploration of different scenarios. This iterative approach helps developers quickly visualize, tweak, and refine gameplay concepts, unlocking new levels of creative experimentation.

To ensure Muse’s output meets the demands of game development, Microsoft Research evaluates the model across three key characteristics:

-

Consistency: Muse maintains realistic character movements, interactions, and environmental dynamics that align with the game’s mechanics.

-

Diversity: From a single prompt, Muse can generate a wide range of gameplay variants, encouraging creative exploration of different possibilities.

-

Persistency: The model can incorporate and retain user modifications, ensuring that new elements integrate seamlessly into the gameplay environment.

With Muse, Microsoft is paving the way for a future where AI serves as a creative partner—expanding the boundaries of what’s possible in game design while keeping human creativity at the forefront.

🔎 AI Research

SWE-Lancer

In SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering, OpenAI introduces SWE-Lancer, a benchmark designed to evaluate model performance in real-world, freelance software engineering tasks, mapping model capabilities to actual monetary value and assessing complex, full-stack software engineering and management skills. The SWE-Lancer Diamond is a public evaluation split containing $500,800 worth of tasks.

The AI Co-Scientist

Researchers from Google Cloud AI Research, Google Research, Google DeepMind, Houston Methodist, Sequome, Fleming Initiative and Imperial College London, and Stanford University introduce an AI co-scientist, a multi-agent system built on Gemini 2.0, designed to assist scientists in generating novel hypotheses and research proposals. The system uses a generate, debate, and evolve approach to improve hypothesis quality, with validations in drug repurposing, novel target discovery, and explaining mechanisms of bacterial evolution and anti-microbial resistance.

Muse

In the paper World and Human Action Models towards gameplay ideation, researchers from Microsoft Research introduce Muse, a new generative model called World and Human Action Model (WHAM). Muse is designed to generate consistent and diverse gameplay sequences and persist user modifications, which are identified as critical for creative ideation in game development.

Autellix

In the paper Autellix: An Efficient Serving Engine for LLM Agents as General Programs researchers from UC Berkeley, Google DeepMind, and Shanghai Jiao Tong University introduce Autellix, an LLM serving system designed to minimize end-to-end latencies by treating programs as first-class citizens. Autellix intercepts LLM calls, enriches schedulers with program-level context, and uses scheduling algorithms to preempt and prioritize calls based on previously completed calls, improving throughput by 4-15x compared to state-of-the-art systems.

Can Small Models Reason?

In the paper Small Models Struggle to Learn from Strong Reasoners, researchers from University of Washington, Carnegie Mellon University , Western Washington University uncover that small language models (≤3B parameters) do not consistently benefit from long chain-of-thought (CoT) reasoning or distillation from larger models3. The paper introduces Mix Distillation, a strategy that balances reasoning complexity by combining long and short CoT examples, which significantly improves small model reasoning performance3.

Qwen2.5-VL

In the paper Qwen2.5-VL Technical Reportresearchers from Alibaba Group introduce Qwen2.5-VL, the latest flagship model of the Qwen vision-language series, which demonstrates advancements in visual recognition, object localization, document parsing, and long-video comprehension. The model introduces dynamic resolution processing and absolute time encoding, allowing it to process images of varying sizes and videos of extended durations with second-level event localization.

🤖 AI Tech Releases

Grok 3

xAI unveiled Grok 3, its most advanced model with impressive benchmark results.

SmolVLM2

Hugging Face open sourced SmolVLM2, a video foundation model that can run in small devices.

PaliGemma 2 mix

Google released PaliGemma 2 mix, a vision language model built on its Gemma family.

📡AI Radar

-

Together AI announced a $305 million Series B.

-

Former OpenAI CTO Mira Murati disclosed details about her new startups: Thinking Machines.

-

Safe Superintelligence, the company stareted by Ilya Sutskever is raising more than $1 billion for his startup at a valuation of over $30 billion.

-

AI hardware startup Humane AI is shutting down and selling to HP.

-

Spotify and ElevenLabs partnered to support AI narrated audiobooks.

-

AI coding startup Codeium is raising capital at a monster valuation.

-

Meta announced LlamaCon, its first gen AI developer conference.

-

AI marketing platform Hightough announced it has raised $80 million in a new round.

-

AI legal platform Luminance raised $75 million in a Series C.

-

Sales AI platform Aomni announced a $4 million round.

-

Acenture announced a strategic investment in Voltron Data to streamline large scale data processing.

-

Industrial AI company Augury raised $75 million in new funding.