Some perspectives about how foundation models inspired a new era in reinforcement learning.

I know, I know, the title is pretty controversial but hopefully caught your attention 😉

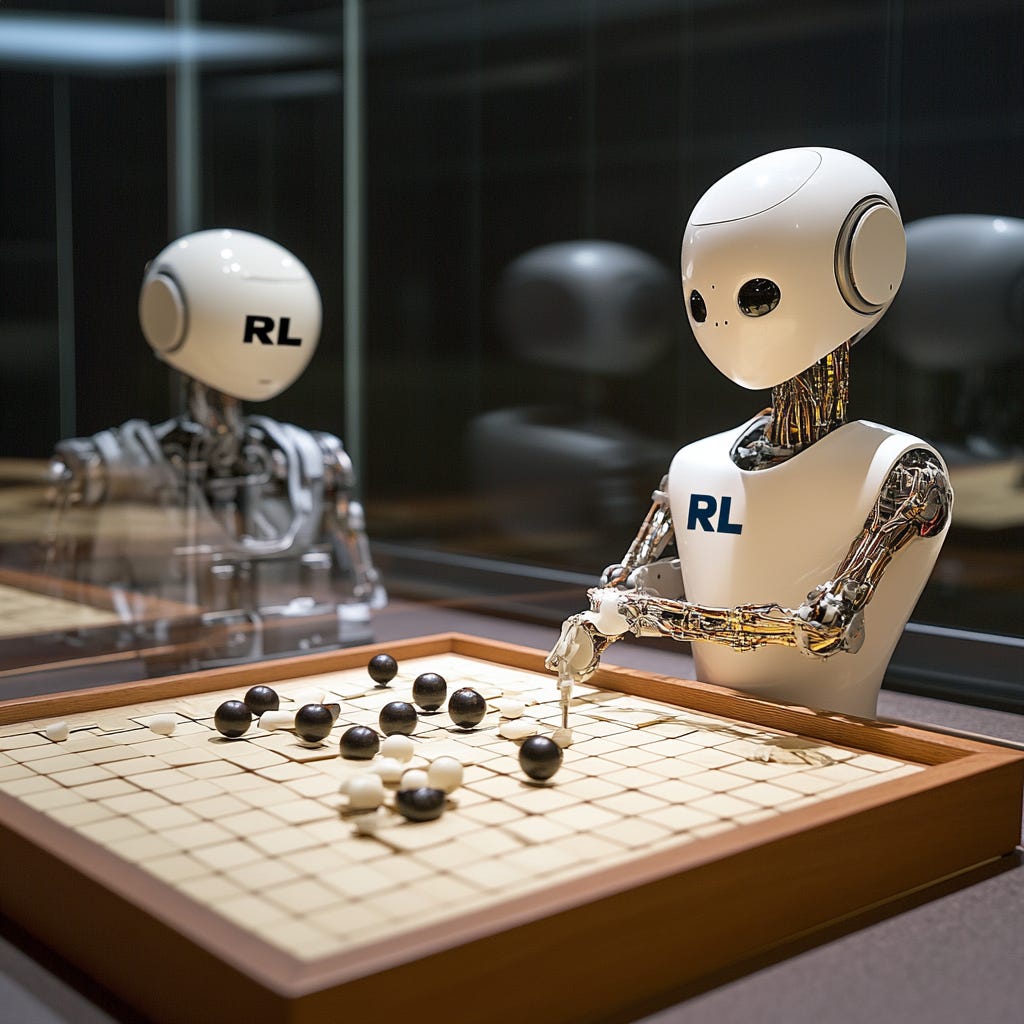

These days we are hearing more about Reinforcement Learning (RL) in the world of generative AI. To some extent, foundation models have served almost as a forcing function in the renaissance of RL which, as an AI method, experienced quite a bit of challenges over the last few years. RL has long been heralded as a general framework for achieving artificial intelligence, promising agents that learn optimal behavior through trial and error. In 2016, DeepMind’s AlphaGo victory over a world champion in the complex board game Go stunned the world and raised expectations sky-high. AlphaGo’s success suggested that deep RL techniques, combined with powerful neural networks, could crack problems once thought unattainable. Indeed, in the aftermath of this breakthrough, many viewed RL as a potential path to artificial general intelligence (AGI), fueling tremendous hype and investment Yet reality proved more sobering: after AlphaGo, RL’s impact beyond controlled settings remained limited, and progress toward broader AI applications stalled.

In recent years, however, RL has experienced a revival – not by conquering new board games, but by becoming an integral part of foundation models development. Foundation models like large language models (e.g. GPT-3, GPT-4) are pretrained on massive datasets via self-supervised learning. While these models acquire vast knowledge and linguistic capability, they initially lack alignment with human preferences and often struggle with complex reasoning or reliability. RL has reemerged as a powerful tool to fine-tune these foundation models after pre-training, aligning them with human intentions and even improving their problem-solving skills. In this essay, we explore how the RL field went from the heights of AlphaGo’s triumph, through a period of tempered expectations, to a renaissance as a critical component in the age of foundation models. We examine the high hopes and subsequent challenges post-AlphaGo, the incorporation of RL in fine-tuning large models (often via reinforcement learning from human feedback), the example of DeepSeek R1 as a landmark in this evolution, and the broader implications of this trend for AI development and deployment.