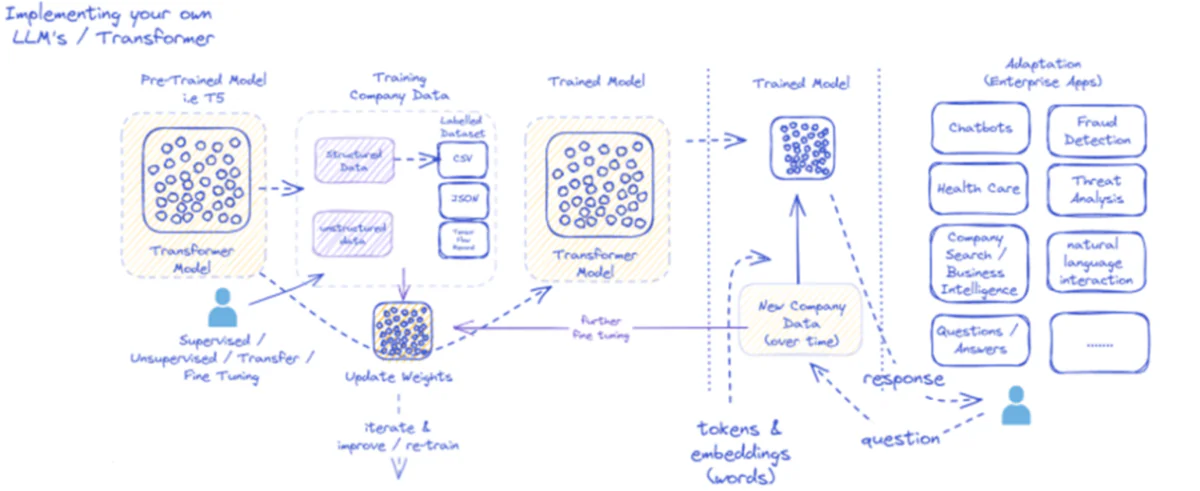

Fine-tuning large language models (LLMs) like Llama 3 involves adapting a pre-trained model to specific tasks using a domain-specific dataset. This process leverages the model’s pre-existing knowledge, making it efficient and cost-effective compared to training from scratch. In this guide, we’ll walk through the steps to fine-tune Llama 3 using QLoRA (Quantized LoRA), a parameter-efficient method that minimizes memory usage and computational costs.

Overview of Fine-Tuning

Fine-tuning involves several key steps:

- Selecting a Pre-trained Model: Choose a base model that aligns with your desired architecture.

- Gathering a Relevant Dataset: Collect and preprocess a dataset specific to your task.

- Fine-Tuning: Adapt the model using the dataset to improve its performance on specific tasks.

- Evaluation: Assess the fine-tuned model using both qualitative and quantitative metrics.

Concepts and Techniques

Full Fine-Tuning

Full fine-tuning updates all the parameters of the model, making it specific to the new task. This method requires significant computational resources and is often impractical for very large models.

Parameter-Efficient Fine-Tuning (PEFT)

PEFT updates only a subset of the model’s parameters, reducing memory requirements and computational cost. This technique prevents catastrophic forgetting and maintains the general knowledge of the model.

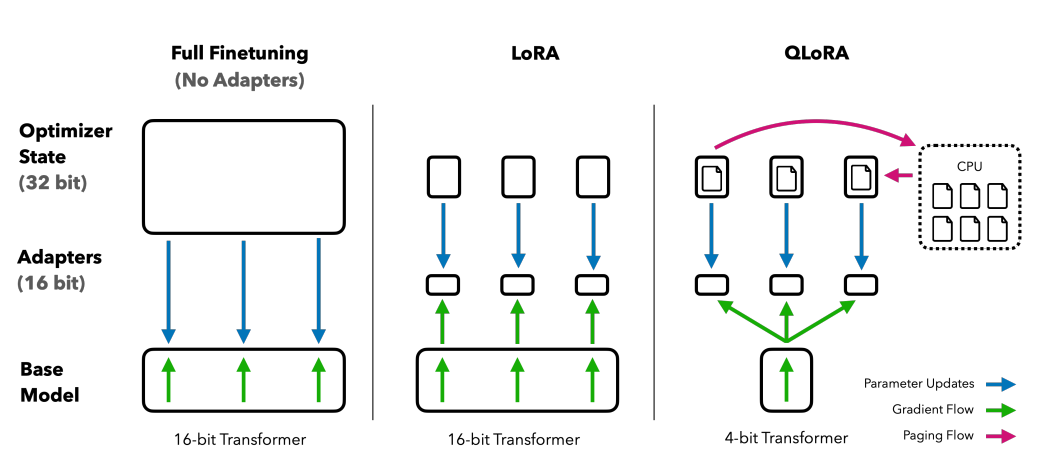

Low-Rank Adaptation (LoRA) and Quantized LoRA (QLoRA)

LoRA fine-tunes only a few low-rank matrices, while QLoRA quantizes these matrices to reduce the memory footprint further.

Fine-Tuning Methods

- Full Fine-Tuning: This involves training all the parameters of the model on the task-specific dataset. While this method can be very effective, it is also computationally expensive and requires significant memory.

- Parameter Efficient Fine-Tuning (PEFT): PEFT updates only a subset of the model’s parameters, making it more memory-efficient. Techniques like Low-Rank Adaptation (LoRA) and Quantized LoRA (QLoRA) fall into this category.

What is LoRA?

Comparing finetuning methods: QLORA enhances LoRA with 4-bit precision quantization and paged optimizers for memory spike management

LoRA is an improved fine-tuning method where, instead of fine-tuning all the weights of the pre-trained model, two smaller matrices that approximate the larger matrix are fine-tuned. These matrices constitute the LoRA adapter. This fine-tuned adapter is then loaded into the pre-trained model and used for inference.

Key Advantages of LoRA:

- Memory Efficiency: LoRA reduces the memory footprint by fine-tuning only small matrices instead of the entire model.

- Reusability: The original model remains unchanged, and multiple LoRA adapters can be used with it, facilitating handling multiple tasks with lower memory requirements.

What is Quantized LoRA (QLoRA)?

QLoRA takes LoRA a step further by quantizing the weights of the LoRA adapters to lower precision (e.g., 4-bit instead of 8-bit). This further reduces memory usage and storage requirements while maintaining a comparable level of effectiveness.

Key Advantages of QLoRA:

- Even Greater Memory Efficiency: By quantizing the weights, QLoRA significantly reduces the model’s memory and storage requirements.

- Maintains Performance: Despite the reduced precision, QLoRA maintains performance levels close to that of full-precision models.

Task-Specific Adaptation

During fine-tuning, the model’s parameters are adjusted based on the new dataset, helping it better understand and generate content relevant to the specific task. This process retains the general language knowledge gained during pre-training while tailoring the model to the nuances of the target domain.

Fine-Tuning in Practice

Full Fine-Tuning vs. PEFT

- Full Fine-Tuning: Involves training the entire model, which can be computationally expensive and requires significant memory.

- PEFT (LoRA and QLoRA): Fine-tunes only a subset of parameters, reducing memory requirements and preventing catastrophic forgetting, making it a more efficient alternative.

Implementation Steps

- Setup Environment: Install necessary libraries and set up the computing environment.

- Load and Preprocess Dataset: Load the dataset and preprocess it into a format suitable for the model.

- Load Pre-trained Model: Load the base model with quantization configurations if using QLoRA.

- Tokenization: Tokenize the dataset to prepare it for training.

- Training: Fine-tune the model using the prepared dataset.

- Evaluation: Evaluate the model’s performance on specific tasks using qualitative and quantitative metrics.

Steo by Step Guide to Fine Tune LLM

Setting Up the Environment

We’ll use a Jupyter notebook for this tutorial. Platforms like Kaggle, which offer free GPU usage, or Google Colab are ideal for running these experiments.

1. Install Required Libraries

First, ensure you have the necessary libraries installed:

!pip install -qqq -U bitsandbytes transformers peft accelerate datasets scipy einops evaluate trl rouge_score</div>

2. Import Libraries and Set Up Environment

import os

import torch

from datasets import load_dataset

from transformers import (

AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig, TrainingArguments,

pipeline, HfArgumentParser

)

from trl import ORPOConfig, ORPOTrainer, setup_chat_format, SFTTrainer

from tqdm import tqdm

import gc

import pandas as pd

import numpy as np

from huggingface_hub import interpreter_login

# Disable Weights and Biases logging

os.environ['WANDB_DISABLED'] = "true"

interpreter_login()

3. Load the Dataset

We’ll use the DialogSum dataset for this tutorial:

Preprocess the dataset according to the model’s requirements, including applying appropriate templates and ensuring the data format is suitable for fine-tuning (Hugging Face) (DataCamp).

dataset_name = "neil-code/dialogsum-test" dataset = load_dataset(dataset_name)

Inspect the dataset structure:

print(dataset['test'][0])

4. Create BitsAndBytes Configuration

To load the model in 4-bit format:

compute_dtype = getattr(torch, "float16")

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type='nf4',

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=False,

)

5. Load the Pre-trained Model

Using Microsoft’s Phi-2 model for this tutorial:

model_name = 'microsoft/phi-2'

device_map = {"": 0}

original_model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map=device_map,

quantization_config=bnb_config,

trust_remote_code=True,

use_auth_token=True

)

6. Tokenization

Configure the tokenizer:

tokenizer = AutoTokenizer.from_pretrained(

model_name,

trust_remote_code=True,

padding_side="left",

add_eos_token=True,

add_bos_token=True,

use_fast=False

)

tokenizer.pad_token = tokenizer.eos_token

Fine-Tuning Llama 3 or Other Models

When fine-tuning models like Llama 3 or any other state-of-the-art open-source LLMs, there are specific considerations and adjustments required to ensure optimal performance. Here are the detailed steps and insights on how to approach this for different models, including Llama 3, GPT-3, and Mistral.

5.1 Using Llama 3

Model Selection:

- Ensure you have the correct model identifier from the Hugging Face model hub. For example, the Llama 3 model might be identified as

meta-llama/Meta-Llama-3-8Bon Hugging Face. - Ensure to request access and log in to your Hugging Face account if required for models like Llama 3 (Hugging Face)

Tokenization:

- Use the appropriate tokenizer for Llama 3, ensuring it is compatible with the model and supports required features like padding and special tokens.

Memory and Computation:

- Fine-tuning large models like Llama 3 requires significant computational resources. Ensure your environment, such as a powerful GPU setup, can handle the memory and processing requirements. Ensure the environment can handle the memory requirements, which can be mitigated by using techniques like QLoRA to reduce the memory footprint (Hugging Face Forums)

Example:

model_name = 'meta-llama/Meta-Llama-3-8B'

device_map = {"": 0}

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

)

original_model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map=device_map,

quantization_config=bnb_config,

trust_remote_code=True,

use_auth_token=True

)

Tokenization:

Depending on the specific use case and model requirements, ensure correct tokenizer configuration without redundant settings. For example, use_fast=True is recommended for better performance (Hugging Face) (Weights & Biases).

tokenizer = AutoTokenizer.from_pretrained(

model_name,

trust_remote_code=True,

padding_side="left",

add_eos_token=True,

add_bos_token=True,

use_fast=False

)

tokenizer.pad_token = tokenizer.eos_token

5.2 Using Other Popular Models (e.g., GPT-3, Mistral)

Model Selection:

- For models like GPT-3 and Mistral, ensure you use the correct model name and identifier from the Hugging Face model hub or other sources.

Tokenization:

- Similar to Llama 3, make sure the tokenizer is correctly set up and compatible with the model.

Memory and Computation:

- Each model may have different memory requirements. Adjust your environment setup accordingly.

Example for GPT-3:

model_name = 'openai/gpt-3'

device_map = {"": 0}

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

)

original_model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map=device_map,

quantization_config=bnb_config,

trust_remote_code=True,

use_auth_token=True

)

Example for Mistral:

model_name = 'mistral-7B'

device_map = {"": 0}

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

)

original_model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map=device_map,

quantization_config=bnb_config,

trust_remote_code=True,

use_auth_token=True

)

Tokenization Considerations: Each model may have unique tokenization requirements. Ensure the tokenizer matches the model and is configured correctly.

Llama 3 Tokenizer Example:

tokenizer = AutoTokenizer.from_pretrained(

model_name,

trust_remote_code=True,

padding_side="left",

add_eos_token=True,

add_bos_token=True,

use_fast=False

)

tokenizer.pad_token = tokenizer.eos_token

GPT-3 and Mistral Tokenizer Example:

tokenizer = AutoTokenizer.from_pretrained(

model_name,

use_fast=True

)

7. Test the Model with Zero-Shot Inferencing

Evaluate the base model with a sample input:

from transformers import set_seed

set_seed(42)

index = 10

prompt = dataset['test'][index]['dialogue']

formatted_prompt = f"Instruct: Summarize the following conversation.n{prompt}nOutput:n"

# Generate output

def gen(model, prompt, max_length):

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, max_length=max_length)

return tokenizer.batch_decode(outputs, skip_special_tokens=True)

res = gen(original_model, formatted_prompt, 100)

output = res[0].split('Output:n')[1]

print(f'INPUT PROMPT:n{formatted_prompt}')

print(f'MODEL GENERATION - ZERO SHOT:n{output}')

8. Pre-process the Dataset

Convert dialog-summary pairs into prompts:

def create_prompt_formats(sample):

blurb = "Below is an instruction that describes a task. Write a response that appropriately completes the request."

instruction = "### Instruct: Summarize the below conversation."

input_context = sample['dialogue']

response = f"### Output:n{sample['summary']}"

end = "### End"

parts = [blurb, instruction, input_context, response, end]

formatted_prompt = "nn".join(parts)

sample["text"] = formatted_prompt

return sample

dataset = dataset.map(create_prompt_formats)

Tokenize the formatted dataset:

def preprocess_batch(batch, tokenizer, max_length):

return tokenizer(batch["text"], max_length=max_length, truncation=True)

max_length = 1024

train_dataset = dataset["train"].map(lambda batch: preprocess_batch(batch, tokenizer, max_length), batched=True)

eval_dataset = dataset["validation"].map(lambda batch: preprocess_batch(batch, tokenizer, max_length), batched=True)

9. Prepare the Model for QLoRA

Prepare the model for parameter-efficient fine-tuning:

original_model = prepare_model_for_kbit_training(original_model)

Hyperparameters and Their Impact

Hyperparameters play a crucial role in optimizing the performance of your model. Here are some key hyperparameters to consider:

- Learning Rate: Controls the speed at which the model updates its parameters. A high learning rate might lead to faster convergence but can overshoot the optimal solution. A low learning rate ensures steady convergence but might require more epochs.

- Batch Size: The number of samples processed before the model updates its parameters. Larger batch sizes can improve stability but require more memory. Smaller batch sizes might lead to more noise in the training process.

- Gradient Accumulation Steps: This parameter helps in simulating larger batch sizes by accumulating gradients over multiple steps before performing a parameter update.

- Number of Epochs: The number of times the entire dataset is passed through the model. More epochs can improve performance but might lead to overfitting if not managed properly.

- Weight Decay: Regularization technique to prevent overfitting by penalizing large weights.

- Learning Rate Scheduler: Adjusts the learning rate during training to improve performance and convergence.

Customize the training configuration by adjusting hyperparameters like learning rate, batch size, and gradient accumulation steps based on the specific model and task requirements. For example, Llama 3 models may require different learning rates compared to smaller models (Weights & Biases) (GitHub)

Example Training Configuration

orpo_args = ORPOConfig( learning_rate=8e-6, lr_scheduler_type="linear",max_length=1024,max_prompt_length=512, beta=0.1,per_device_train_batch_size=2,per_device_eval_batch_size=2, gradient_accumulation_steps=4,optim="paged_adamw_8bit",num_train_epochs=1, evaluation_strategy="steps",eval_steps=0.2,logging_steps=1,warmup_steps=10, report_to="wandb",output_dir="./results/",)

10. Train the Model

Set up the trainer and start training:

trainer = ORPOTrainer(

model=original_model,

args=orpo_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

tokenizer=tokenizer,)

trainer.train()

trainer.save_model("fine-tuned-llama-3")

Evaluating the Fine-Tuned Model

After training, evaluate the model’s performance using both qualitative and quantitative methods.

1. Human Evaluation

Compare the generated summaries with human-written ones to assess the quality.

2. Quantitative Evaluation

Use metrics like ROUGE to assess performance:

from rouge_score import rouge_scorer scorer = rouge_scorer.RougeScorer(['rouge1', 'rouge2', 'rougeL'], use_stemmer=True) scores = scorer.score(reference_summary, generated_summary) print(scores)

Common Challenges and Solutions

1. Memory Limitations

Using QLoRA helps mitigate memory issues by quantizing model weights to 4-bit. Ensure you have enough GPU memory to handle your batch size and model size.

2. Overfitting

Monitor validation metrics to prevent overfitting. Use techniques like early stopping and weight decay.

3. Slow Training

Optimize training speed by adjusting batch size, learning rate, and using gradient accumulation.

4. Data Quality

Ensure your dataset is clean and well-preprocessed. Poor data quality can significantly impact model performance.

Conclusion

Fine-tuning LLMs using QLoRA is an efficient way to adapt large pre-trained models to specific tasks with reduced computational costs. By following this guide, you can fine-tune PHI, Llama 3 or any other open-source model to achieve high performance on your specific tasks.