Graphs are data structures that represent complex relationships across a wide range of domains, including social networks, knowledge bases, biological systems, and many more. In these graphs, entities are represented as nodes, and their relationships are depicted as edges.

The ability to effectively represent and reason about these intricate relational structures is crucial for enabling advancements in fields like network science, cheminformatics, and recommender systems.

Graph Neural Networks (GNNs) have emerged as a powerful deep learning framework for graph machine learning tasks. By incorporating the graph topology into the neural network architecture through neighborhood aggregation or graph convolutions, GNNs can learn low-dimensional vector representations that encode both the node features and their structural roles. This allows GNNs to achieve state-of-the-art performance on tasks such as node classification, link prediction, and graph classification across diverse application areas.

While GNNs have driven substantial progress, some key challenges remain. Obtaining high-quality labeled data for training supervised GNN models can be expensive and time-consuming. Additionally, GNNs can struggle with heterogeneous graph structures and situations where the graph distribution at test time differs significantly from the training data (out-of-distribution generalization).

In parallel, Large Language Models (LLMs) like GPT-4, and LLaMA have taken the world by storm with their incredible natural language understanding and generation capabilities. Trained on massive text corpora with billions of parameters, LLMs exhibit remarkable few-shot learning abilities, generalization across tasks, and commonsense reasoning skills that were once thought to be extremely challenging for AI systems.

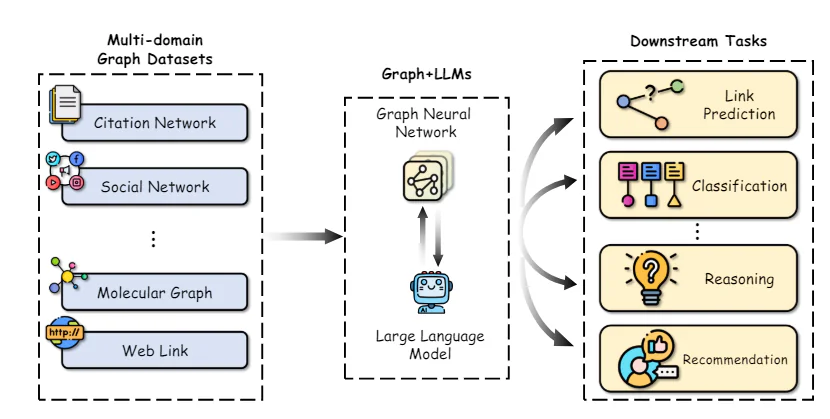

The tremendous success of LLMs has catalyzed explorations into leveraging their power for graph machine learning tasks. On one hand, the knowledge and reasoning capabilities of LLMs present opportunities to enhance traditional GNN models. Conversely, the structured representations and factual knowledge inherent in graphs could be instrumental in addressing some key limitations of LLMs, such as hallucinations and lack of interpretability.

In this article, we will delve into the latest research at the intersection of graph machine learning and large language models. We will explore how LLMs can be used to enhance various aspects of graph ML, review approaches to incorporate graph knowledge into LLMs, and discuss emerging applications and future directions for this exciting field.

Graph Neural Networks and Self-Supervised Learning

To provide the necessary context, we will first briefly review the core concepts and methods in graph neural networks and self-supervised graph representation learning.

Graph Neural Network Architectures

Graph Neural Network Architecture – source

Graph Neural Network Architecture – sourceThe key distinction between traditional deep neural networks and GNNs lies in their ability to operate directly on graph-structured data. GNNs follow a neighborhood aggregation scheme, where each node aggregates feature vectors from its neighbors to compute its own representation.

Numerous GNN architectures have been proposed with different instantiations of the message and update functions, such as Graph Convolutional Networks (GCNs), GraphSAGE, Graph Attention Networks (GATs), and Graph Isomorphism Networks (GINs) among others.

More recently, graph transformers have gained popularity by adapting the self-attention mechanism from natural language transformers to operate on graph-structured data. Some examples include GraphormerTransformer, and GraphFormers. These models are able to capture long-range dependencies across the graph better than purely neighborhood-based GNNs.

Self-Supervised Learning on Graphs

While GNNs are powerful representational models, their performance is often bottlenecked by the lack of large labeled datasets required for supervised training. Self-supervised learning has emerged as a promising paradigm to pre-train GNNs on unlabeled graph data by leveraging pretext tasks that only require the intrinsic graph structure and node features.

Some common pretext tasks used for self-supervised GNN pre-training include:

- Node Property Prediction: Randomly masking or corrupting a portion of the node attributes/features and tasking the GNN to reconstruct them.

- Edge/Link Prediction: Learning to predict whether an edge exists between a pair of nodes, often based on random edge masking.

- Contrastive Learning: Maximizing similarities between graph views of the same graph sample while pushing apart views from different graphs.

- Mutual Information Maximization: Maximizing the mutual information between local node representations and a target representation like the global graph embedding.

Pretext tasks like these allow the GNN to extract meaningful structural and semantic patterns from the unlabeled graph data during pre-training. The pre-trained GNN can then be fine-tuned on relatively small labeled subsets to excel at various downstream tasks like node classification, link prediction, and graph classification.

By leveraging self-supervision, GNNs pre-trained on large unlabeled datasets exhibit better generalization, robustness to distribution shifts, and efficiency compared to training from scratch. However, some key limitations of traditional GNN-based self-supervised methods remain, which we will explore leveraging LLMs to address next.

Enhancing Graph ML with Large Language Models

Integration of Graphs and LLM – source

Integration of Graphs and LLM – sourceThe remarkable capabilities of LLMs in understanding natural language, reasoning, and few-shot learning present opportunities to enhance multiple aspects of graph machine learning pipelines. We explore some key research directions in this space:

A key challenge in applying GNNs is obtaining high-quality feature representations for nodes and edges, especially when they contain rich textual attributes like descriptions, titles, or abstracts. Traditionally, simple bag-of-words or pre-trained word embedding models have been used, which often fail to capture the nuanced semantics.

Recent works have demonstrated the power of leveraging large language models as text encoders to construct better node/edge feature representations before passing them to the GNN. For example, Chen et al. utilize LLMs like GPT-3 to encode textual node attributes, showing significant performance gains over traditional word embeddings on node classification tasks.

Beyond better text encoders, LLMs can be used to generate augmented information from the original text attributes in a semi-supervised manner. TAPE generates potential labels/explanations for nodes using an LLM and uses these as additional augmented features. KEA extracts terms from text attributes using an LLM and obtains detailed descriptions for these terms to augment features.

By improving the quality and expressiveness of input features, LLMs can impart their superior natural language understanding capabilities to GNNs, boosting performance on downstream tasks.