OpenAI has released its latest and most advanced language model yet – GPT-4o, also known as the “Omni” model. This revolutionary AI system represents a giant leap forward, with capabilities that blur the line between human and artificial intelligence.

At the heart of GPT-4o lies its native multimodal nature, allowing it to seamlessly process and generate content across text, audio, images, and video. This integration of multiple modalities into a single model is a first of its kind, promising to reshape how we interact with AI assistants.

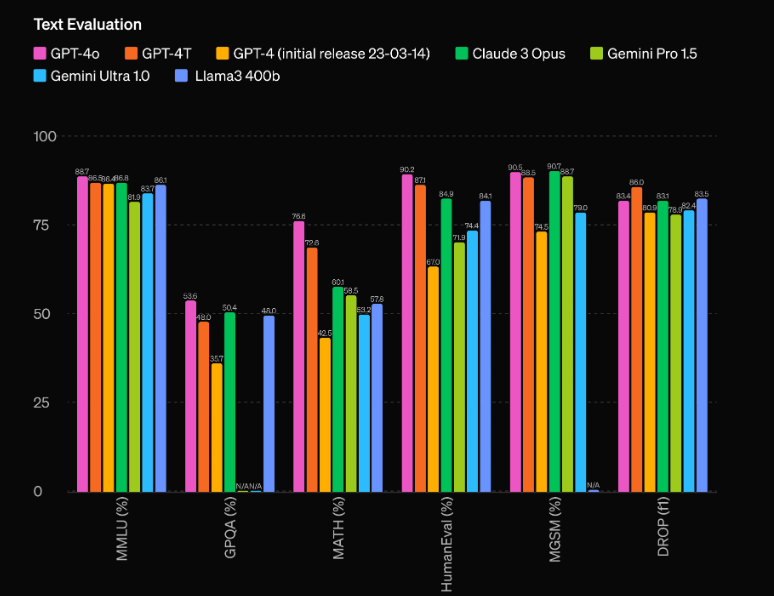

But GPT-4o is much more than just a multimodal system. It boasts a staggering performance improvement over its predecessor, GPT-4, and leaves competing models like Gemini 1.5 Pro, Claude 3, and Llama 3-70B in the dust. Let’s dive deeper into what makes this AI model truly groundbreaking.

Unparalleled Performance and Efficiency

One of the most impressive aspects of GPT-4o is its unprecedented performance capabilities. According to OpenAI’s evaluations, the model has a remarkable 60 Elo point lead over the previous top performer, GPT-4 Turbo. This significant advantage places GPT-4o in a league of its own, outshining even the most advanced AI models currently available.

But raw performance isn’t the only area where GPT-4o shines. The model also boasts impressive efficiency, operating at twice the speed of GPT-4 Turbo while costing only half as much to run. This combination of superior performance and cost-effectiveness makes GPT-4o an extremely attractive proposition for developers and businesses looking to integrate cutting-edge AI capabilities into their applications.

Multimodal Capabilities: Blending Text, Audio, and Vision

Perhaps the most groundbreaking aspect of GPT-4o is its native multimodal nature, which allows it to seamlessly process and generate content across multiple modalities, including text, audio, and vision. This integration of multiple modalities into a single model is a first of its kind, and it promises to revolutionize how we interact with AI assistants.

With GPT-4o, users can engage in natural, real-time conversations using speech, with the model instantly recognizing and responding to audio inputs. But the capabilities don’t stop there – GPT-4o can also interpret and generate visual content, opening up a world of possibilities for applications ranging from image analysis and generation to video understanding and creation.

One of the most impressive demonstrations of GPT-4o’s multimodal capabilities is its ability to analyze a scene or image in real-time, accurately describing and interpreting the visual elements it perceives. This feature has profound implications for applications such as assistive technologies for the visually impaired, as well as in fields like security, surveillance, and automation.

But GPT-4o’s multimodal capabilities extend beyond just understanding and generating content across different modalities. The model can also seamlessly blend these modalities, creating truly immersive and engaging experiences. For example, during OpenAI’s live demo, GPT-4o was able to generate a song based on input conditions, blending its understanding of language, music theory, and audio generation into a cohesive and impressive output.

Using GPT0 using Python

import openai

# Replace with your actual API key

OPENAI_API_KEY = "your_openai_api_key_here"

# Function to extract the response content

def get_response_content(response_dict, exclude_tokens=None):

if exclude_tokens is None:

exclude_tokens = []

if response_dict and response_dict.get("choices") and len(response_dict["choices"]) > 0:

content = response_dict["choices"][0]["message"]["content"].strip()

if content:

for token in exclude_tokens:

content = content.replace(token, '')

return content

raise ValueError(f"Unable to resolve response: {response_dict}")

# Asynchronous function to send a request to the OpenAI chat API

async def send_openai_chat_request(prompt, model_name, temperature=0.0):

openai.api_key = OPENAI_API_KEY

message = {"role": "user", "content": prompt}

response = await openai.ChatCompletion.acreate(

model=model_name,

messages=[message],

temperature=temperature,

)

return get_response_content(response)

# Example usage

async def main():

prompt = "Hello!"

model_name = "gpt-4o-2024-05-13"

response = await send_openai_chat_request(prompt, model_name)

print(response)

if __name__ == "__main__":

import asyncio

asyncio.run(main())

I have:

- Imported the openai module directly instead of using a custom class.

- Renamed the openai_chat_resolve function to get_response_content and made some minor changes to its implementation.

- Replaced the AsyncOpenAI class with the openai.ChatCompletion.acreate function, which is the official asynchronous method provided by the OpenAI Python library.

- Added an example main function that demonstrates how to use the send_openai_chat_request function.

Please note that you need to replace “your_openai_api_key_here” with your actual OpenAI API key for the code to work correctly.

_*]:min-w-0″ readability=”121.96583184258″>

Emotional Intelligence and Natural Interaction

Another groundbreaking aspect of GPT-4o is its ability to interpret and generate emotional responses, a capability that has long eluded AI systems. During the live demo, OpenAI engineers showcased how GPT-4o could accurately detect and respond to the emotional state of the user, adjusting its tone and responses accordingly.

In one particularly striking example, an engineer pretended to hyperventilate, and GPT-4o immediately recognized the signs of distress in their voice and breathing patterns. The model then calmly guided the engineer through a series of breathing exercises, modulating its tone to a soothing and reassuring manner until the simulated distress had subsided.

This ability to interpret and respond to emotional cues is a significant step towards truly natural and human-like interactions with AI systems. By understanding the emotional context of a conversation, GPT-4o can tailor its responses in a way that feels more natural and empathetic, ultimately leading to a more engaging and satisfying user experience.

Accessibility

OpenAI has made the decision to offer GPT-4o’s capabilities to all users, free of charge. This pricing model sets a new standard, where competitors typically charge substantial subscription fees for access to their models.

While OpenAI will still offer a paid “ChatGPT Plus” tier with benefits such as higher usage limits and priority access, the core capabilities of GPT-4o will be available to everyone at no cost.

Real-World Applications and Future Developments

The implications of GPT-4o’s capabilities are vast and far-reaching, with potential applications spanning numerous industries and domains. In the realm of customer service and support, for instance, GPT-4o could revolutionize how businesses interact with their customers, providing natural, real-time assistance across multiple modalities, including voice, text, and visual aids.

In the field of education, GPT-4o could be leveraged to create immersive and personalized learning experiences, with the model adapting its teaching style and content delivery to suit each individual student’s needs and preferences. Imagine a virtual tutor that can not only explain complex concepts through natural language but also generate visual aids and interactive simulations on the fly.

The entertainment industry is another area where GPT-4o’s multimodal capabilities could shine. From generating dynamic and engaging narratives for video games and movies to composing original music and soundtracks, the possibilities are endless.

Looking ahead, OpenAI has ambitious plans to continue expanding the capabilities of its models, with a focus on enhancing reasoning abilities and further integrating personalized data. One tantalizing prospect is the integration of GPT-4o with large language models trained on specific domains, such as medical or legal knowledge bases. This could pave the way for highly specialized AI assistants capable of providing expert-level advice and support in their respective fields.

Another exciting avenue for future development is the integration of GPT-4o with other AI models and systems, enabling seamless collaboration and knowledge sharing across different domains and modalities. Imagine a scenario where GPT-4o could leverage the capabilities of cutting-edge computer vision models to analyze and interpret complex visual data, or collaborate with robotic systems to provide real-time guidance and support in physical tasks.

Ethical Considerations and Responsible AI

As with any powerful technology, the development and deployment of GPT-4o and similar AI models raise important ethical considerations. OpenAI has been vocal about its commitment to responsible AI development, implementing various safeguards and measures to mitigate potential risks and misuse.

One key concern is the potential for AI models like GPT-4o to perpetuate or amplify existing biases and harmful stereotypes present in the training data. To address this, OpenAI has implemented rigorous debiasing techniques and filters to minimize the propagation of such biases in the model’s outputs.

Another critical issue is the potential misuse of GPT-4o’s capabilities for malicious purposes, such as generating deepfakes, spreading misinformation, or engaging in other forms of digital manipulation. OpenAI has implemented robust content filtering and moderation systems to detect and prevent the misuse of its models for harmful or illegal activities.

Furthermore, the company has emphasized the importance of transparency and accountability in AI development, regularly publishing research papers and technical details about its models and methodologies. This commitment to openness and scrutiny from the broader scientific community is crucial in fostering trust and ensuring the responsible development and deployment of AI technologies like GPT-4o.

Conclusion

OpenAI’s GPT-4o represents a true paradigm shift in the field of artificial intelligence, ushering in a new era of multimodal, emotionally intelligent, and natural human-machine interaction. With its unparalleled performance, seamless integration of text, audio, and vision, and disruptive pricing model, GPT-4o promises to democratize access to cutting-edge AI capabilities and transform how we interact with technology on a fundamental level.

While the implications and potential applications of this groundbreaking model are vast and exciting, it is crucial that its development and deployment are guided by a firm commitment to ethical principles and responsible AI practices.