A very impressive new model created by Google DeepMind is able to follow language instructions in any 3D environment.

Next Week in The Sequence:

-

Edge 379: We start the week with a summary of our long series about LLM reasoning. Next we start an awesome series about autonomous agents.

-

Edge 380: To complement our recent series, we discuss SELF-Discover, a new LLM reasoning method pionereed by DeepMind.

You can subscribe to The Sequence below:

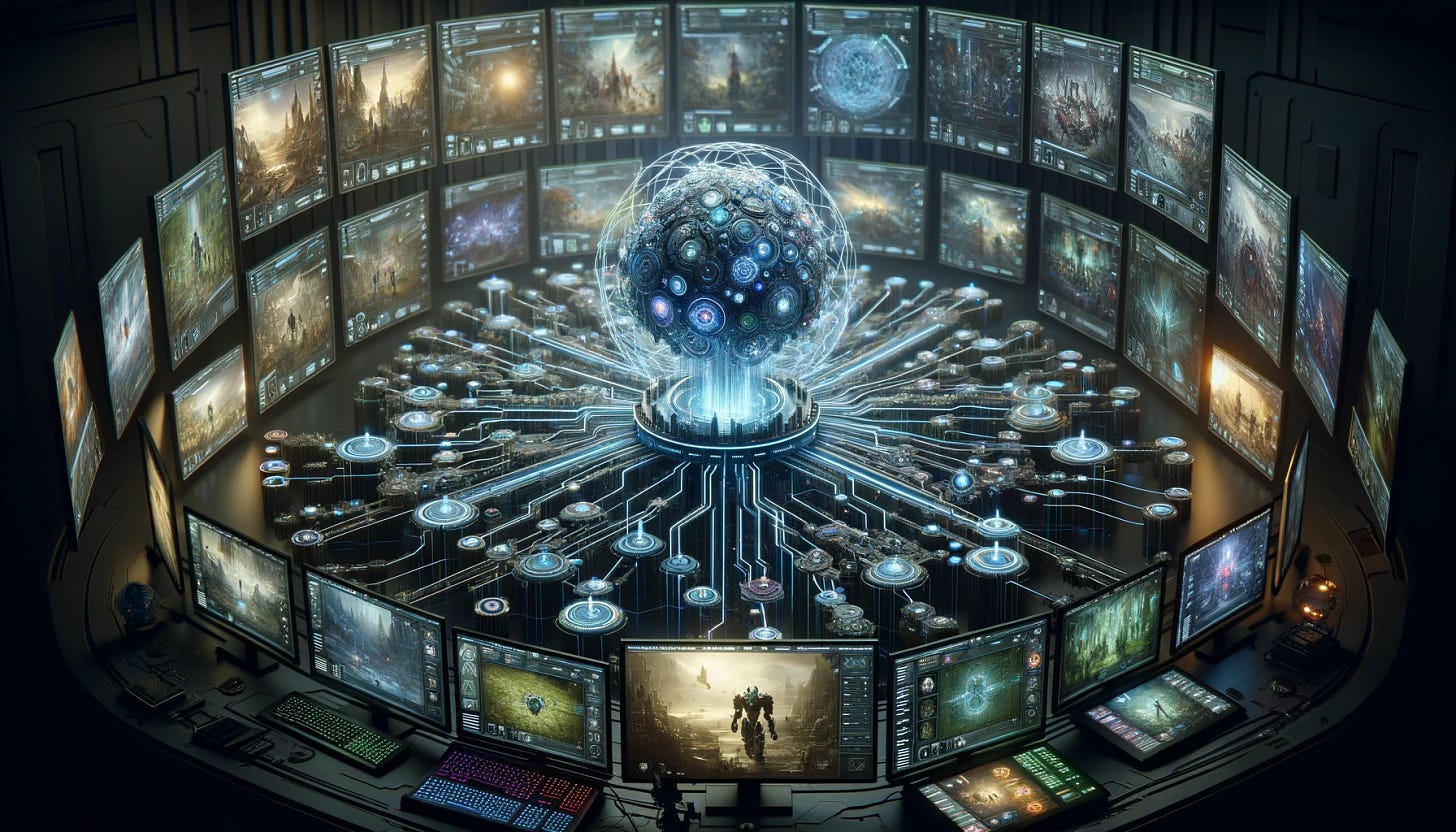

📝 Editorial: SIMA, One AI for Navigating Any 3D Environment

Video games have long served as some of the best environments for training AI agents. Since their early days, AI labs like OpenAI and DeepMind have built agents that excel at mastering video games such as Atari, Dota 2, StarCraft, and many others. The principles of many of these agents have been applied in areas such as embodied AI, self-driving cars, and many other domains that require taking action in different environments. However, most of the AI breakthroughs in 3D game environments have been constrained to one or a small number of games. Building that type of AI is really hard, but imagine if we could build agents that can understand many gaming worlds at once and follow instructions like a human player?

Last week, Google DeepMind unveiled their work on the Scalable, Instructable, Multiworld Agent (SIMA). The goal of the project was to develop instructable agents that can interact with any 3D environment just like a human by following simple language instructions. This might not seem like a big deal until we consider that the standard way to communicate instructions has been with super expensive reinforcement learning models. Language is the most powerful and yet simple abstraction for communicating instructions about the world or, in this case, a 3D virtual world. The magic of SIMA is its ability to translate those abstract instructions into mouse and keyboard actions used to navigate an environment.

DeepMind trained SIMA using a large dataset of gameplay and the corresponding mouse and keyboard interactions. By using language instructions, SIMA was able to master actions in 3D environments it hadn’t seen before.

Just like LLMs can apply language across many domains, SIMA can apply actions across many environments. Models like SIMA can have profound implications for embodied AI environments, simulations, and many other settings in which agents need to carry out physical tasks.

Another impressive achievement by DeepMind.

🔎 ML Research

SIMA

Google DeepMind published a paper introducing Scalable Instructable Multiworld Agent (SIMA), a generalist agent for 3D virtual environments. SIMA is able to understand many 3D worlds and carry out tasks within them —> Read more.

Chain Of Table

Google Research published a paper detailing Chain-of-Table, an LLM reasoning method optimized for table understanding tasks. The model transform tables into smaller and more manageable segments that can be used by the LLMs to orchestrate different tasks —> Read more.

DeepSeek-VL

DeepSeek-AI published a paper detailing DeepSeek-VL, an open source vision-language model optimized for real world applications. Specifically, DeepSeek-VL excels in areas such as screenshot understanding, OCR, charts and other specific data structures common in vision-language apps —> Read more.

LLMs and Graph Data

Google Research published a paper detailing a method to teach LLMs to reason through graph data. The paper also includes GraphQA, a benchmark designed to evaluate LLMs on graph reasoning tasks —> Read more.

DocFormer v2

Amazon Science published a paper detailing DocFormerv2, a transformer based model optimized for understanding documents. DocFormverv2 can make sense of visual and textual information in a way that mimics human reasoning —> Read more.

🤖 Cool AI Tech Releases

Command-R

Cohere released Command R, a model optimized for RAG and tool usage —> Read more.

Claude 3 Haiku

anthropic released Claude 3 Haiku, a faster and more affordable version of its marquee model —> Read more.

🛠 Real World ML

Meta’s Gen AI Infrastructure

Meta shares some details about the compute infrastructure used in their gen AI workloads —> Read more.

Inside Einstein

Salesforce discusses how the Einstein platform manages data and AI workloads —> Read more.

ML Infra at Netflix

Netflix shares some details about its Metaflow platform and complementary integrations used in its ML workloads —> Read more.

LLM-Reviews at Yelp

Yelp details the LLM-based architecture to detect inappropiate language in reviews —> Read more.

Semantic Search at Walmart

Walmart Global Tech shares some details about their semantic search architecture —> Read more.

📡AI Radar

-

Databricks invests in open source AI darling Mistral.

-

Cognition unveiled a very impressive demo of a code generation foundation model.

-

Applied Intuition raised $250 million for its AI-powered products for automotive vehicles.

-

SoftBank is exploring a new investment in Mistral.

-

ZenDesk acquired Ultimate to boost its AI-based customer service suite.

-

Pienso raised $10 million in new funding for its low-code AI training platform.

-

Nanonets raised $29 million for applying AI to business workflows.

-

Axion Ray announced a $17.5 million Series A for its AI command center for manufacturing.

-

Empathy raised $47 million to use AI in the “compassionate economy”.