Over the past few years, diffusion models have achieved massive success and recognition for image and video generation tasks. Video diffusion models, in particular, have been gaining significant attention due to their ability to produce videos with high coherence as well as fidelity. These models generate high-quality videos by employing an iterative denoising process in their architecture that gradually transforms high-dimensional Gaussian noise into real data.

Stable Diffusion is one of the most representative models for image generative tasks, relying on a Variational AutoEncoder (VAE) to map between the real image and the down-sampled latent features. This allows the model to reduce generative costs, while the cross-attention mechanism in its architecture facilitates text-conditioned image generation. More recently, the Stable Diffusion framework has built the foundation for several plug-and-play adapters to achieve more innovative and effective image or video generation. However, the iterative generative process employed by a majority of video diffusion models makes the image generation process time-consuming and comparatively costly, limiting its applications.

In this article, we will talk about AnimateLCM, a personalized diffusion model with adapters aimed at generating high-fidelity videos with minimal steps and computational costs. The AnimateLCM framework is inspired by the Consistency Model, which accelerates sampling with minimal steps by distilling pre-trained image diffusion models. Furthermore, the successful extension of the Consistency Model, the Latent Consistency Model (LCM), facilitates conditional image generation. Instead of conducting consistency learning directly on the raw video dataset, the AnimateLCM framework proposes using a decoupled consistency learning strategy. This strategy decouples the distillation of motion generation priors and image generation priors, allowing the model to enhance the visual quality of the generated content and improve training efficiency simultaneously. Additionally, the AnimateLCM model proposes training adapters from scratch or adapting existing adapters to its distilled video consistency model. This facilitates the combination of plug-and-play adapters in the family of stable diffusion models to achieve different functions without harming the sample speed.

This article aims to cover the AnimateLCM framework in depth. We explore the mechanism, the methodology, and the architecture of the framework, along with its comparison with state-of-the-art image and video generation frameworks. So, let’s get started.

Diffusion models have been the go to framework for image generation and video generation tasks owing to their efficiency and capabilities on generative tasks. A majority of diffusion models rely on an iterative denoising process for image generation that transforms a high dimensional Gaussian noise into real data gradually. Although the method delivers somewhat satisfactory results, the iterative process and the number of iterating samples slows the generation process and also adds to the computational requirements of diffusion models that are much slower than other generative frameworks like GAN or Generative Adversarial Networks. In the past few years, Consistency Models or CMs have been proposed as an alternative to iterative diffusion models to speed up the generation process while keeping the computational requirements constant.

The highlight of consistency models is that they learn consistency mappings that maintain self-consistency of trajectories introduced by the pre-trained diffusion models. The learning process of Consistency Models allows it to generate high-quality images with minimal steps, and also eliminates the need for computation-intensive iterations. Furthermore, the Latent Consistency Model or LCM built on top of the stable diffusion framework can be integrated into the web user interface with the existing adapters to achieve a host of additional functionalities like real time image to image translation. In comparison, although the existing video diffusion models deliver acceptable results, progress is still to be made in the video sample acceleration field, and is of great significance owing to the high video generation computational costs.

That leads us to AnimateLCM, a high fidelity video generation framework that needs a minimal number of steps for the video generation tasks. Following the Latent Consistency Model, AnimateLCM framework treats the reverse diffusion process as solving CFG or Classifier Free Guidance augmented probability flow, and trains the model to predict the solution of such probability flows directly in the latent space. However, instead of conducting consistency learning on raw video data directly that requires high training and computational resources, and often leads to poor quality, the AnimateLCM framework proposes a decoupled consistent learning strategy that decouples the consistency distillation of motion generation and image generation priors.

The AnimateLCM framework first conducts the consistency distillation to adapt the image base diffusion model into the image consistency model, and then conducts 3D inflation to both the image consistency and image diffusion models to accommodate 3D features. Eventually, the AnimateLCM framework obtains the video consistency model by conducting consistency distillation on video data. Furthermore, to alleviate potential feature corruption as a result of the diffusion process, the AnimateLCM framework also proposes to use an initialization strategy. Since the AnimateLCM framework is built on top of the Stable Diffusion framework, it can replace the spatial weights of its trained video consistency model with the publicly available personalized image diffusion weights to achieve innovative generation results.

Additionally, to train specific adapters from scratch or to suit publicly available adapters better, the AnimateLCM framework proposes an effective acceleration strategy for the adapters that do not require training the specific teacher models.

The contributions of the AnimateLCM framework can be very well summarized as: The proposed AnimateLCM framework aims to achieve high quality, fast, and high fidelity video generation, and to achieve this, the AnimateLCM framework proposes a decoupled distillation strategy the decouples the motion and image generation priors resulting in better generation quality, and enhanced training efficiency.

InstantID : Methodology and Architecture

At its core, the InstantID framework draws heavy inspiration from diffusion models and sampling speed strategies. Diffusion models, also known as score-based generative models have demonstrated remarkable image generative capabilities. Under the guidance of score direction, the iterative sampling strategy implemented by diffusion models denoise the noise-corrupted data gradually. The efficiency of diffusion models is one of the major reasons why they are employed by a majority of video diffusion models by training on added temporal layers. On the other hand, sampling speed and sampling acceleration strategies help tackle the slow generation speeds in diffusion models. Distillation based acceleration method tunes the original diffusion weights with a refined architecture or scheduler to enhance the generation speed.

Moving along, the InstantID framework is built on top of the stable diffusion model that allows InstantID to apply relevant notions. The model treats the discrete forward diffusion process as continuous-time Variance Preserving SDE. Furthermore, the stable diffusion model is an extension of DDPM or Denoising Diffusion Probabilistic Model, in which the training data point is perturbed gradually by the discrete Markov chain with a perturbation kennel allowing the distribution of noisy data at different time step to follow the distribution.

To achieve high-fidelity video generation with a minimal number of steps, the AnimateLCM framework tames the stable diffusion-based video models to follow the self-consistency property. The overall training structure of the AnimateLCM framework consists of a decoupled consistency learning strategy for teacher free adaptation and effective consistency learning.

Transition from Diffusion Models to Consistency Models

The AnimateLCM framework introduces its own adaptation of the Stable Diffusion Model or DM to the Consistency Model or CM following the design of the Latent Consistency Model or LCM. It is worth noting that although the stable diffusion models typically predict the noise added to the samples, they are essential sigma-diffusion models. It is in contrast with consistency models that aim to predict the solution to the PF-ODE trajectory directly. Furthermore, in stable diffusion models with certain parameters, it is essential for the model to employ a classifier-free guidance strategy to generate high quality images. The AnimateLCM framework however, employs a classifier-free guidance augmented ODE solver to sample the adjacent pairs in the same trajectories, resulting in better efficiency and enhanced quality. Furthermore, existing models have indicated that the generation quality and training efficiency is influenced heavily by the number of discrete points in the trajectory. Smaller number of discrete points accelerates the training process whereas a higher number of discrete points results in less bias during training.

Decoupled Consistency Learning

For the process of consistency distillation, developers have observed that the data used for training heavily influences the quality of the final generation of the consistency models. However, the major issue with publicly available datasets currently is that often consist of watermark data, or its of low quality, and might contain overly brief or ambiguous captions. Furthermore, training the model directly on large-resolution videos is computationally expensive, and time consuming, making it a non-feasible option for a majority of researchers.

Given the availability of filtered high quality datasets, the AnimateLCM framework proposes to decouple the distillation of the motion priors and image generation priors. To be more specific, the AnimateLCM framework first distills the stable diffusion models into image consistency models with filtered high-quality image text datasets with better resolution. The framework then trains the light LoRA weights at the layers of the stable diffusion model, thus freezing the weights of the stable diffusion model. Once the model tunes the LoRA weights, it works as a versatile acceleration module, and it has demonstrated its compatibility with other personalized models in the stable diffusion communities. For inference, the AnimateLCM framework merges the weights of the LoRA with the original weights without corrupting the inference speed. After the AnimateLCM framework gains the consistency model at the level of image generation, it freezes the weights of the stable diffusion model and LoRA weights on it. Furthermore, the model inflates the 2D convolution kernels to the pseudo-3D kernels to train the consistency models for video generation. The model also adds temporal layers with zero initialization and a block level residual connection. The overall setup helps in assuring that the output of the model will not be influenced when it is trained for the first time. The AnimateLCM framework under the guidance of open sourced video diffusion models trains the temporal layers extended from the stable diffusion models.

It’s important to recognize that while spatial LoRA weights are designed to expedite the sampling process without taking temporal modeling into account, and temporal modules are developed through standard diffusion techniques, their direct integration tends to corrupt the representation at the onset of training. This presents significant challenges in effectively and efficiently merging them with minimal conflict. Through empirical research, the AnimateLCM framework has identified a successful initialization approach that not only utilizes the consistency priors from spatial LoRA weights but also mitigates the adverse effects of their direct combination.

At the onset of consistency training, pre-trained spatial LoRA weights are integrated exclusively into the online consistency model, sparing the target consistency model from insertion. This strategy ensures that the target model, serving as the educational guide for the online model, does not generate faulty predictions that could detrimentally affect the online model’s learning process. Throughout the training period, the LoRA weights are progressively incorporated into the target consistency model via an exponential moving average (EMA) process, achieving the optimal weight balance after several iterations.

Teacher Free Adaptation

Stable Diffusion models and plug and play adapters often go hand in hand. However, it has been observed that even though the plug and play adapters work to some extent, they tend to lose control in details even when a majority of these adapters are trained with image diffusion models. To counter this issue, the AnimateLCM framework opts for teacher free adaptation, a simple yet effective strategy that either accommodates the existing adapters for better compatibility or trains the adapters from the ground up or. The approach allows the AnimateLCM framework to achieve the controllable video generation and image-to-video generation with a minimal number of steps without requiring teacher models.

AnimateLCM: Experiments and Results

The AnimateLCM framework employs a Stable Diffusion v1-5 as the base model, and implements the DDIM ODE solver for training purposes. The framework also applies the Stable Diffusion v1-5 with open sourced motion weights as the teacher video diffusion model with the experiments being conducted on the WebVid2M dataset without any additional or augmented data. Furthermore, the framework employs the TikTok dataset with BLIP-captioned brief textual prompts for controllable video generation.

Qualitative Results

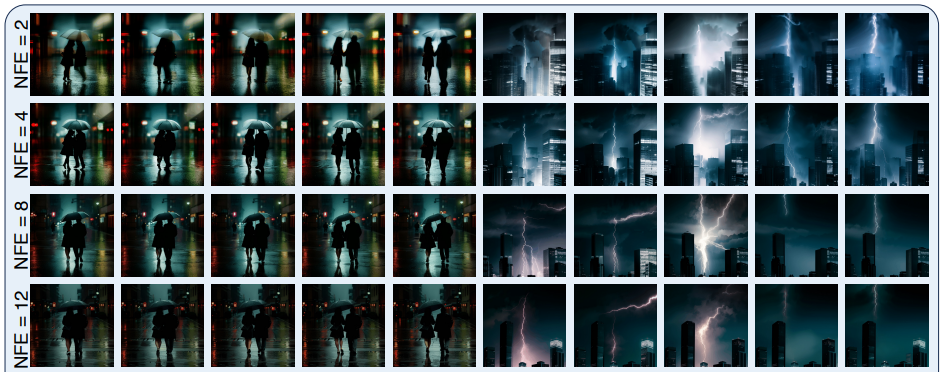

The following figure demonstrates results of the four-step generation method implemented by the AnimateLCM framework in text-to-video generation, image-to-video generation, and controllable video generation.

As it can be observed, the results delivered by each of them are satisfactory with the generated results demonstrating the ability of the AnimateLCM framework to follow the consistency property even with varying inference steps, maintaining similar motion and style.

Quantitative Results

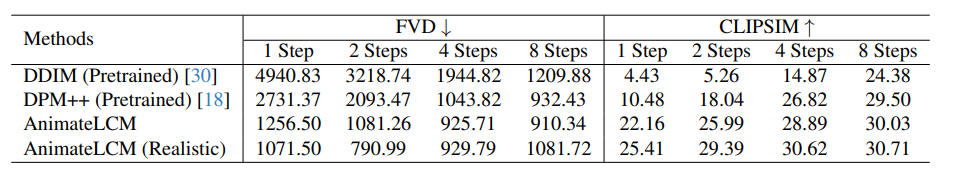

The following figure illustrates the quantitative results and comparison of the AnimateLCM framework with state of the art DDIM and DPM++ methods.

As it can be observed, the AnimateLCM framework outperforms the existing methods by a significant margin especially in the low step regime ranging from 1 to 4 steps. Furthermore, the AnimateLCM metrics displayed in this comparison are evaluated without using the CFG or classifier free guidance that allows the framework to save nearly 50% of the inference time and inference peak memory cost. Furthermore, to further validate its performance, the spatial weights within the AnimateLCM framework are replaced with a publicly available personalized realistic model that strikes a good balance between fidelity and diversity, that helps in boosting the performance further.

Final Thoughts

In this article, we have talked about AnimateLCM, a personalized diffusion model with adapters that aims to generate high-fidelity videos with minimal steps and computational costs. The AnimateLCM framework is inspired by the Consistency Model that accelerates the sampling with minimal steps by distilling pre-trained image diffusion models, and the successful extension of the Consistency Model, the Latent Consistency Model or LCM that facilitates conditional image generation. Instead of conducting consistency learning on the raw video dataset directly, the AnimateLCM framework proposes to use a decoupled consistency learning strategy that decouples the distillation of motion generation priors and image generation priors, allowing the model to enhance the visual quality of the generated content, and improve the training efficiency simultaneously.