A text-to-video model freely available to everyone.

Next Week in The Sequence:

-

Edge 405: Our series about autonomous agents starts diving into the topic of memory. We discuss a groundbreaking paper published by Google and Stanford University demonstrating that memory-augmented LLMs are computationally universal. We also provide an overview of the Camel framework for building autonomous agents.

-

The Sequence Chat: An interesting interview with one of the engineers behind the Azure OpenAI Service.

-

Edge 406: Dive into Anthropic’s new LLM interpretability method.

You can subscribe to The Sequence below:

📝 Editorial: Amazing Dream Machine

Video is highly regarded as one of the next frontiers for generative AI. Months ago, OpenAI dazzled the world with its minute-long videos, while startups such as Runway and PikaLabs are being widely adopted across different industries. The challenges with video generation are many, ranging from physics understanding to the lack of high-quality training datasets.

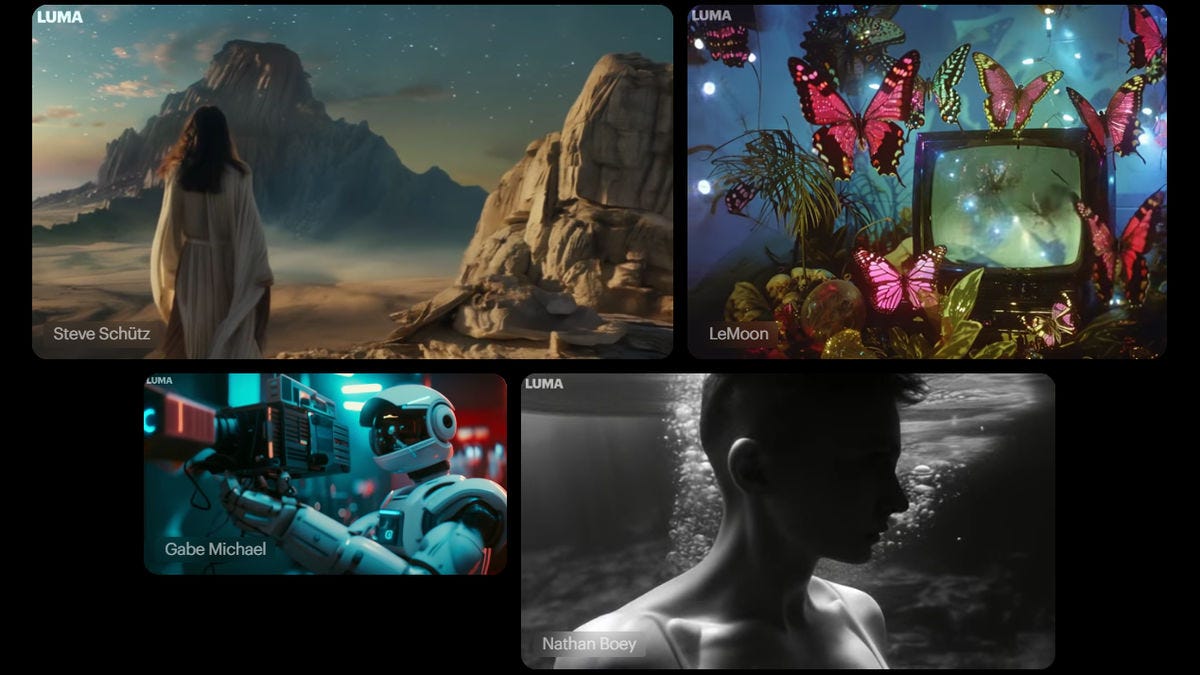

This week, we had a surprising new entrant in the generative video space. A few days ago, Luma AI unveiled Dream Machine, a text-to-video generation model that produces high-quality, physically accurate short videos. Luma claims that Dream Machine is based on a transformer architecture trained directly on videos and attributes this approach to the high quality of the outputs. Beyond the high quality, the most impressive aspect of Dream Machine is that it is freely available. This contrasts with other text-to-video models such as OpenAI’s Sora and Kuaishou’s Kling, which remain available only to a select group of partners. The distribution model is particularly impressive when we consider that video generation requires very high computational costs. This reminded me of the Stable Diffusion release at a time when most text-to-image models were still closed source.

The generative video space is in its very early stages, and my prediction is that most of the players will be acquired by the largest generative AI providers. Mistral, Anthropic, and Cohere have all raised tremendous sums of venture capital but remain constrained to language models. In order to compete with OpenAI, the acquisition of the early innovative startups working on generative video makes a lot of sense.

For now, you should definitely try Dream Machine. It makes you dream of the possibilities.

📽 Recommended webinar

Do you still rely on ‘vibe checks’ or ChatGPT for your GenAI evaluations? See the future of instant, accurate, low-cost evals in our upcoming webinar, including:

-

How to conduct GenAI evaluations

-

The problem with human and LLM-as-a-judge approaches

-

The future of ultra-low cost and latency evaluations

🔎 ML Research

LiveBench

Researchers from Abacus.AI , NVIDIA, UMD, USC and NYU published LiveBench, a new multidimensional benchmark that addresses LLM benchmark memorization. LiveBench benchmark changes regularly with new questions and objective evaluations —> Read more.

Husky

Researchers from Meta AI, Allen AI and University of Washington published a paper introducing Husky, an LLM agent for multi-step reasoning. Husky is able to reason over a given action space to perform tasks that include different datasets such as numerical, tabular or knowledge-based —> Read more.

Mixture of Agents

Researchers from Together.ai, Duke and Stanford University published a paper introducing mixture-of-agents(MoA), an architecture that combines different LLMs for a given task. Some of the initial results showed MoA outperforming GPT-4o across different benchmarks —> Read more.

Test of Time

Researchers from Google DeepMind published a paper introducing Test of Time, a benchmark for evaluating LLMs on temporal reasoning capabilities in scenarios that require temporal logic. The benchmarks use synthetic datasets to avoid previous memorization that could produce misleading results —> Read more.

TextGrad

Researchers from Stanford University published a paper unveiling TextGrad, an automatic differentiation technique for multi-LLM systems. TextGrad works similar to backpropagation in neural networks distributing textual feedback from LLMs to improve individual components of the system —> Read more.

Transformers and Neural Algorithmic Reasoners

Researchers from Google DeepMind published a paper proposing a technique that combine transformers with GNN-based neural algorithmic reasoners. The results are models that could be more effective at reasoning tasks —> Read more.

🤖 Cool AI Tech Releases

Dream Machine

Luma Labs release Dream Machine, a text-to-video model with impressive high quality —> Read more.

Stable Diffusion 3 Medium

Stability AI open sourced Stable Diffusion 3 Medium, a 2 billion parameter text-to-image model —> Read more.

Unity Catalog

Databricks open sourced its Unity Catalog for datasets and AI models —> Read more.

🛠 Real World AI

Red Teaming at Anthropic

Anthropic shares practical advice learned from red teaming its Claude models —> Read more.

Serverless Notebooks at Meta

Meta engineering discusses how they use Bento and Pyodide to enable serverless interaction of Jupyter notebooks —> Read more.

📡AI Radar

-

Apple unveiled its highly anticipated Applied Intelligence strategy.

-

Google scientist Francois Chollet announced a new $1M prize for models that beat his famous ARC benchmark.

-

GPTZero announced $10 million in new funding for its AI content detection platform.

-

Databricks unveiled new features to its Mosaic AI platform for building compound AI systems.

-

AI healthcare company Tempus AI had a stellar public market debut.

-

Camb.ai introduced Mars5, a voice cloning model with support for 140 languages.

-

Flow Computing raised $4.3 million for its parallel CPU platform.

-

Amazon announced a $230 million commitment to generative AI startups.

-

Particle, an AI news reader platform announced $10 million in ne

-

AI finance platform AccountIQ raised $65 million in new funding.

-

AI warehouse platform Retail Ready raised a $3.3 million seed round.

-

AI powered general ledger platform Light announced a $13 million round.

-

Financial research platform Lina raised $6,6 million to enable AI for financial analysts.

-

South Korean AI chip developers Robellion and Sapeon merged.