As the EU’s AI Act prepares to come into force tomorrow, industry experts are weighing in on its potential impact, highlighting its role in building trust and encouraging responsible AI adoption. Curtis Wilson, Staff Data Engineer at Synopsys’ Software Integrity Group, believes the new regulation could…

Apple opts for Google chips in AI infrastructure, sidestepping Nvidia

In a report published on Monday, it was disclosed that Apple sidestepped industry leader Nvidia in favour of chips designed by Google. Instead of employing Nvidia’s GPUs for its artificial intelligence software infrastructure, Apple will use Google chips as the cornerstone of AI-related features and tools…

UAE blocks US congressional meetings with G42 amid AI transfer concerns

There have been reports that the United Arab Emirates (UAE) has “suddenly cancelled” the ongoing series of meetings between a group of US congressional staffers and Emirati AI firm G42, after some US lawmakers raised concerns that this practice may lead to the transfer of advanced…

Canva Expands AI Capabilities with Acquisition of Leonardo.ai

Canva has announced its acquisition of Leonardo.ai, an Australian generative AI startup. This strategic purchase positions Canva to compete more aggressively in the rapidly evolving market for AI-enhanced design platforms. The acquisition of Leonardo.ai represents a major step forward for Canva in its quest to build…

Testing AI Tools? Don’t Forget to Think About the Total Cost.

In 2023, AI quickly moved from a novel and futuristic idea to a core component of enterprise strategies everywhere. While ChatGPT is one of the most popular shadow IT software applications, IT leaders are already working to formally adopt AI tools. While the average use of…

Global data breach costs hit all-time high – CyberTalk

EXECUTIVE SUMMARY:

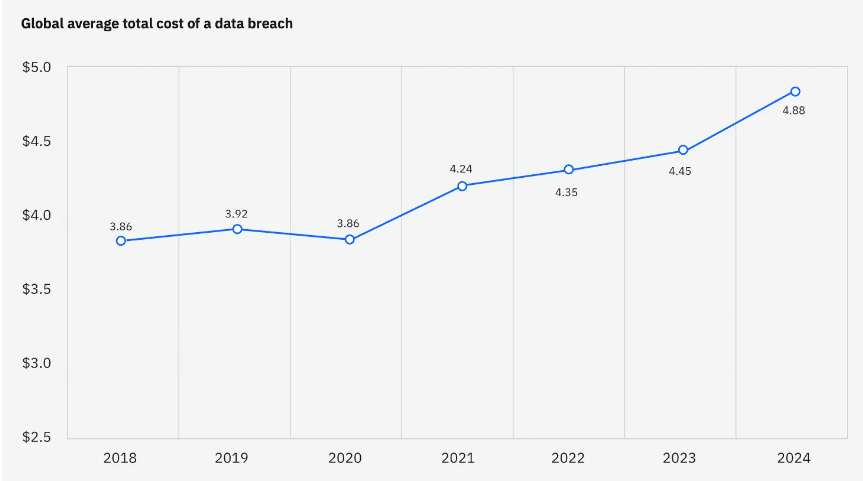

Global data breach costs have hit an all-time high, according to IBM’s annual Cost of a Data Breach report. The tech giant collaborated with the Ponemon institute to study more than 600 organizational breaches between March of 2023 and February of 2024.

The breaches affected 17 industries, across 16 countries and regions, and involved leaks of 2,000-113,000 records per breach. Here’s what researchers found…

Essential information

The global average cost of a data breach is $4.88 million, up nearly 10% from last year’s $4.5 million. Key drivers of the year-over-year cost spike included post-breach third-party expenses, along with lost business.

Over 50% of organizations that were interviewed said that they are passing the breach costs on to customers through higher prices for goods and services.

More key findings

- For the 14th consecutive year, the U.S. has the highest average data breach costs worldwide; nearly $9.4 million.

- In the last year, Canada and Japan both experienced drops in average breach costs.

- Most breaches could be traced back to one of two sources – stolen credentials or a phishing email.

- Seventy percent of organizations noted that breaches led to “significant” or “very significant” levels of disruption.

Deep-dive insights: AI

The report also observed that an increasing number of organizations are adopting artificial intelligence and automation to prevent breaches. Nearly two-thirds of organizations were found to have deployed AI and automation technologies across security operations centers.

The use of AI prevention workflows reduced the average cost of a breach by $2.2 million. Organizations without AI prevention workflows did not experience these cost savings.

Right now, only 20% of organizations report using gen AI security tools. However, those that have implemented them note a net positive effect. GenAI security tools can mitigate the average cost of a breach by more than $167,000, according to the report.

Deep-dive insights: Cloud

Multi-environment cloud breaches were found to cost more than $5 million to contend with, on average. Out of all breach types, they also took the longest time to identify and contain, reflecting the challenge that is identifying data and protecting it.

In regards to cloud-based breaches, commonly stolen data types included personal identifying information (PII) and intellectual property (IP).

As generative AI initiatives draw this data into new programs and processes, cyber security professionals are encouraged to reassess corresponding security and access controls.

The role of staffing issues

A number of organizations that contended with cyber attacks were found to have under-staffed cyber security teams. Staffing shortages are up 26% compared to last year.

Organizations with cyber security staff shortages averaged an additional $1.76 million in breach costs as compared to organizations with minimal or no staffing issues.

Staffing issues may be contributing to the increased use of AI and automation, which again, have been shown to reduce breach costs.

Further information

For more AI and cloud insights, click here. Access the Cost of a Data Breach 2024 report here. Lastly, to receive cyber security thought leadership articles, groundbreaking research and emerging threat analyses each week, subscribe to the CyberTalk.org newsletter.

Mistral Large 2: Enhanced Code Generation and Multilingual Capabilities

Mistral AI introduced Mistral Large 2 on July 24, 2024. This latest model is a significant advancement in Artificial Intelligence (AI), providing extensive support for both programming and natural languages. Designed to handle complex tasks with greater accuracy and efficiency, Mistral Large 2 supports over 80…

Chaim Linhart, PhD, Co-founder & CTO of Ibex Medical Analytics – Interview Series

Chaim Linhart, PhD is the CTO and Co-Founder of Ibex Medical Analytics. He has more than 25 years of experience in algorithm development, AI and machine learning from academia as well as serving in an elite unit in the Israeli military and at several tech companies….

AI at the International Mathematical Olympiad: How AlphaProof and AlphaGeometry 2 Achieved Silver-Medal Standard

Mathematical reasoning is a vital aspect of human cognitive abilities, driving progress in scientific discoveries and technological developments. As we strive to develop artificial general intelligence that matches human cognition, equipping AI with advanced mathematical reasoning capabilities is essential. While current AI systems can handle basic…

AI race and competition law: Balancing choice & innovation

Uncover the ongoing “AI Race” and its impact on competition law, focused on recent advancements by Apple and Microsoft, alongside UK and EU law….