In the ever-evolving landscape of technology, humanoid robotics stands as a frontier teeming with potential and promise. The concept, once confined to the realms of science fiction, is rapidly materializing into a tangible reality, thanks to the relentless advancements in artificial intelligence and robotics. This transformative…

Telemetry data, the new oil: the importance of securing IoT – CyberTalk

Miri Ofir is the Research and Development Director at Check Point Software. Antoinette Hodes is a Global Solutions Architect and an Evangelist with the Check Point Office of the CTO.

Introduction

In today’s interconnected world, the Internet of Things (IoT) has become ubiquitous, enabling the efficient exchange of data between various devices and systems. IoT devices generate a lot of valuable data, including telemetry data. What is telemetry data? Telemetry data refers to the information collected and transmitted by devices, including sensors, actuators and other connected (IoT or OT) devices. It encompasses a wide range of data, such as information about resources: disk space, CPU, memory, and data from open ports and active connections. Furthermore, environmental metrics like temperature, pressure, humidity, location and speed are collected by sensors and being sent as telemetry. Lastly, system and security events, anomalies and alerts are also telemetry. Telemetry data provides real-time insights into the status, behavior, and performance of devices and the systems that they are connected to.

In the age of Industry 4.0, collecting telemetry data has become increasingly important. This data is vital for the efficient functioning of modern industries and offers several benefits. Firstly, collecting telemetry data allows businesses to gain valuable insights into their operations and processes. By analyzing this data and pursuing in-depth monitoring, companies can identify areas for improvement, optimize resource allocation, and enhance overall productivity. Secondly, telemetry data enables predictive maintenance, where potential issues or faults in machinery can be detected in advance. This proactive approach helps prevent costly breakdowns, reduces downtime and increases equipment lifespan. Additionally, telemetry data plays a crucial role in ensuring product quality and safety. By constantly monitoring data from sensors, manufacturers can monitor and control the production process, ensuring adherence to quality standards and minimizing defects. Finally, telemetry data facilitates real-time decision-making. By obtaining up-to-date and accurate information, managers can make informed choices, react swiftly to changing conditions, and improve operational efficiency.

Securing telemetry data | The key to protecting sensitive data

Depending on the context and the specific information it contains, telemetry data can be considered sensitive. In some cases, telemetry data may not be inherently sensitive, especially if it only contains general operational information without any personally identifiable information (PII) or sensitive details. For example, telemetry data that simply indicates the temperature or power consumption of a device may not be classified as sensitive. However, certain types of telemetry data can indeed be sensitive. For instance, if telemetry data includes PII, such as user identities, email addresses, or other personal information, it would be considered sensitive data. Additionally, telemetry data that reveals intimate details about an individual’s behavior, preferences or health could also be deemed sensitive.

Importance of securing telemetry data | The safe future of IoT

1. Data privacy and confidentiality: IoT metrics and telemetry data often contain sensitive information about individuals, organizations, or critical infrastructure systems. Unauthorized access or manipulation of this data can lead to privacy breaches, industrial espionage, or even physical harm. Securing IoT metrics and telemetry data ensures the confidentiality and integrity of the information.

2. Protection against cyber threats: For cyber criminals, IoT devices are attractive targets due to their potential vulnerabilities. Compromised devices can be used as gateways to gain unauthorized access to networks or through which to launch large-scale attacks. Securing telemetry data helps mitigate these risks. Implement robust encryption, authentication, and access control measures.

3. Maintaining trust and reputation: Organizations deploying IoT devices must prioritize the security of metrics and telemetry data to maintain the trust of their customers and stakeholders. Instances of data breaches can lead to severe reputational damage and financial losses. Protecting the integrity and confidentiality of telemetry data helps build trust and credibility in IoT deployments.

Industry 4.0 use cases

In terms of Industry 4.0, several key applications have emerged to streamline operations and maximize efficiency. These use cases include:

- Predictive maintenance: By extending the lifespan of assets, organizations can minimize downtime and optimize resource allocation.

- Proactive remediation: Taking swift action to address potential issues helps minimize damage and ensures uninterrupted operations.

- Anomaly and threat detection: By identifying anomalies and threats early on, companies can reduce the impact and mitigate the risks associated with security breaches.

- Quality control: Automating the inspection process reduces the need for human intervention, resulting in improved accuracy and efficiency.

- Enhanced cyber security: Analyzing network traffic and promptly identifying and responding to threats helps ensure a secure environment.

- Improved resource optimization: Utilizing vehicle tracking, optimizing route planning, and reducing fuel consumption can enhance delivery efficiency in the transportation sector.

- Supply chain management: Efficient inventory management, real-time tracking of goods, and fast response times enable streamlined operations and customer satisfaction.

- Production planning: Optimizing production processes ensures efficient resource utilization and timely delivery of products. This results in improved customer loyalty, satisfaction and brand loyalty.

The roadmap to compliance | Telemetry data and industry regulations

There are specific regulations that pertain to telemetry data in certain industries or regions. Here are a few examples:

1. Healthcare: In the healthcare industry, the Health Insurance Portability and Accountability Act (HIPAA) in the United States and the EU’s General Data Protection Regulation (GDPR) in Europe impose specific requirements for the collection, storage, and transmission of telemetry data related to patient health information. These regulations aim to protect the privacy and security of sensitive healthcare data.

2. Automotive: Telemetry data collected from vehicles, such as GPS location, speed and vehicle diagnostics, may be subject to regulations in the automotive industry. For example, the European Union’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) have specific provisions related to the collection and use of personal data from vehicles.

3. Aviation: The aviation industry has regulations governing the collection and transmission of telemetry data from aircraft. For instance, the International Civil Aviation Organization (ICAO) sets standards for flight data monitoring and analyses, including the collection and handling of telemetry data for safety and operational purposes.

4. Telecommunications: Telecommunications companies may be subject to regulations related to telemetry data, particularly in terms of data protection and privacy. These regulations can vary by country or region, such as the General Data Protection Regulation (GDPR) in the European Union or the Telecommunications Act in the United States.

As IoT continues to expand, securing sensitive data, metrics and telemetry data become increasingly critical. Protecting IoT data ensures privacy, mitigates cyber risks and maintains trust in IoT deployments. Predefined metrics offer consistency and efficiency. By embracing these concepts, organizations can enhance the security and reliability of their IoT systems, enabling them to fully leverage the benefits of telemetry data while minimizing potential risk.

In conclusion, collecting telemetry data is essential in the age of Industry 4.0, as it enables businesses to optimize processes, enhance productivity, ensure product quality, and make data-driven decisions. And securing telemetry data is even more imperative.

Crash Bandicoot 4, Spyro Reignited Trilogy Dev Toys For Bob Is Splitting From Activision

Toys for Bob, the studio behind games like Crash Bandicoot N. Sane Trilogy, Spyro Reignited Trilogy, and Crash Bandicoot 4: It’s About Time, has announced it is splitting from its owner Activision Blizzard to go independent. It is also exploring a partnership with Microsoft.

Toys for Bob announced this in a statement released today, which explains why now is “the time to take the studio and our future games to the next level.”

[embedded content]

Here’s Toys for Bob’s statement, in full:

“We’re thrilled to announce that Toys for Bob is spinning off as an independent game development studio.

Over the years, we’ve inspired love, joy, and laughter for the inner child in all gamers. We pioneered new IP and hardware technologies in Skylanders. We raised the bar for best-in-class remasters in Spyro Reignited Trilogy. We’ve taken Crash Bandicoot to innovative, critically acclaimed new heights.

With the same enthusiasm and passion, we believe that now is the time to take the studio and our future games to the next level. This opportunity allows us to return to our roots of being a small and nimble studio.

To make this news even more exciting, we’re exploring a possible partnership between our new studio and Microsoft. And while we’re in the early days of developing our next new game and a ways away from making any announcements, our team is excited to develop new stories, new characters, and new gameplay experiences.

Our friends at Activision and Microsoft have been extremely supportive of our new direction and we’re confident that we will continue to work closely together as part of our future.

So, keep your horns and your eyes out for more news. Thank you to our community of players for always supporting us through our journey. We can’t wait to share updates on our new adventure as an indie studio. Talk to you soon.”

Toys for Bob did not disclose a price (if there is one) associated with its split from Activision Blizzard, which arrives just a few months after Microsoft purchased the company for a colossal $69 billion.

Toys for Bob joins another studio leaving the hands of its owner today: With a $500 million deal, Embracer Group has sold Saber Interactive to a group of private investors, as reported by Bloomberg. Kotaku is also reporting today that Gearbox, the makers of the Borderlands series, is looking to break away from Embracer Group as well.

What kind of game do you hope Toys for Bob makes first? Let us know in the comments below!

Look Out World! Here Comes Atomos 2.0! – Videoguys

Discover the latest developments at Atomos in this insightful blog post by Andy Stout for RedSharkNews. Explore the return of Jeromy Young as CEO, the appointment of Peter Barber as COO, enticing product updates, and speculations on future developments.

In a dynamic shift at Atomos, as observed by Andy Stout for RedSharkNews, Jeromy Young reclaims the helm as CEO, injecting new energy into the company’s trajectory. Meanwhile, Peter Barber, renowned co-founder of Blackmagic Design, steps into the role of COO, bringing a wealth of experience and enthusiasm to the team.

Amidst these leadership changes, Atomos unveils a series of product updates aimed at captivating both existing and prospective users. From the reintroduction of a three-year warranty on core products to affordable upgrade paths for Ninja V/V+ users, the company demonstrates its commitment to customer satisfaction and innovation.

Looking ahead, speculation mounts about potential enhancements, particularly regarding Blackmagic RAW recording compatibility. With Jeromy Young’s pledge to embrace new technologies and expand Atomos’ ecosystem, exciting possibilities lie on the horizon.

Furthermore, Atomos continues to expand its ProRes RAW ecosystem, with support for an impressive array of cameras. Plans for enhanced H.265 options and NDI support further underscore the company’s dedication to staying at the forefront of technological advancements.

At the corporate level, Atomos embarks on a restructuring and recapitalization journey, with plans to return to stock market quotation in the near future. Leveraging Peter Barber’s expertise in strategic acquisitions, Atomos aims to explore new markets and opportunities for growth.

In conclusion, the stage is set for Atomos to embark on a new era of innovation and expansion. With Jeromy Young and Peter Barber at the helm, backed by a suite of compelling product updates, the company is poised to redefine the boundaries of creativity and excellence in the industry. Stay tuned for the next chapter in Atomos’ journey.

Read the full article by Andy Stout for RedSharkNews HERE

Elevate Your Video Editing Game with Adobe Premiere Pro 24.2: A Compre – Videoguys

Discover the latest enhancements in Adobe Premiere Pro 24.2, including AI-powered editing tools, seamless social media integration, and optimized hardware support. Learn how these updates can streamline your workflow and elevate your video editing experience.

Are you ready to take your video editing skills to the next level? Look no further than Adobe Premiere Pro 24.2, the latest release packed with groundbreaking features designed to enhance your editing workflow. In this comprehensive overview by Madhuri Murlikrishnan, we’ll dive into the myriad enhancements that make Premiere Pro a powerhouse for content creators.

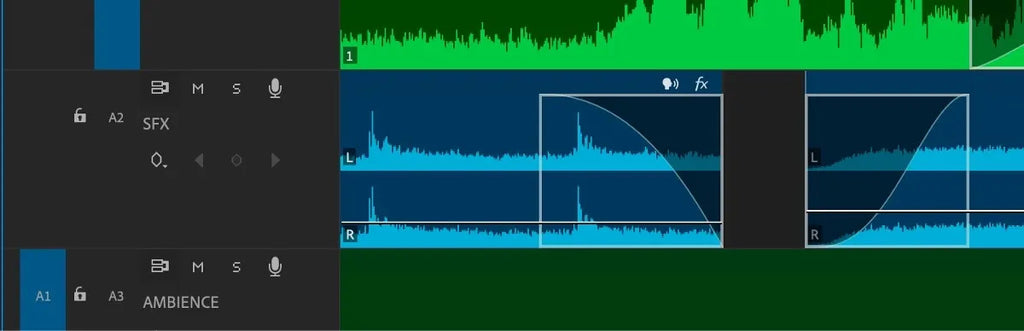

AI-Powered Editing: Streamlining Your Workflow with Precision With the advent of AI-powered editing tools like Interactive Fade Handles, Audio Category Tagging, and the fully unleashed Enhance Speech function, Premiere Pro revolutionizes the editing process. These features not only expedite editing tasks but also empower users to achieve unparalleled audio quality, all while maintaining complete control over their creative vision.

Optimized Hardware Support: Unleashing the Power of AI Harnessing the full potential of AI features requires optimized hardware support. Premiere Pro’s collaboration with leading hardware manufacturers like NVIDIA ensures that AI processing is finely tuned for speed and accuracy. Experience a significant boost in performance, especially with features like Enhance Speech, accelerated by NVIDIA RTX, delivering crystal-clear voice recordings with just a single click.

Seamless Social Media Integration: Elevate Your Presence on TikTok In today’s digital landscape, social media integration is paramount. Premiere Pro’s native support for exporting to TikTok simplifies the process of sharing content directly from the editing timeline. Built-in project templates provide insight into TikTok’s formatting requirements, ensuring your videos stand out on this popular platform.

Enhanced Subtitle Creation: Captivate Your Audience with Dynamic Subtitles Captivating your audience has never been easier with SubMachine, an innovative add-on that seamlessly integrates with Premiere Pro. By leveraging speech-to-text capabilities, SubMachine enables users to create dynamic and customizable subtitles with just a click, enhancing engagement and accessibility.

The Power of Collaboration: Integrating Third-Party Solutions Premiere Pro’s ecosystem extends beyond its core features, with integrations and enhancements aimed at streamlining collaborative workflows. From remote editing solutions like LucidLink to real-time collaboration tools like BirdDog Cloud Transmitter, Adobe’s partnerships amplify the capabilities of Premiere Pro, empowering teams to work more efficiently than ever before.

Conclusion: Unlock Your Creative Potential with Adobe Premiere Pro 24.2 As we navigate the evolving landscape of video editing, Adobe Premiere Pro 24.2 stands as a beacon of innovation and efficiency. With AI-powered editing tools, seamless social media integration, and optimized hardware support, Premiere Pro empowers creators to unleash their creativity like never before. Elevate your video editing game with Premiere Pro 24.2 and embark on a journey of limitless possibilities.

Read the full blog post by Madhuri Murlikrishnan for Adobe HERE

Ranking Final Fantasy VII Rebirth’s Minigames

Card games within larger video games can be extremely hit or miss. Inserting an optional deckbuilding TCG is one thing, but tying it to a side-quest storyline is risky. Thankfully, Queen’s Blood is downright fantastic. Within the first couple of matches, the collectible card game sunk its hooks in us. During our gameplay sessions, we spent hours poring over our decks, crafting our strategies, and challenging every player we could find in Junon, Corel, Nibelheim, and every other Final Fantasy VII Rebirth region.

And if having superb, lane-based mechanics isn’t enough, Queen’s Blood is more than just a simple card game you can choose to engage with; one of the most intriguing side-storylines unfolds as you work your way up the ranks to become the world’s number-one Queen’s Blood player. Not only that, but we loved drawing blood against every single player we could find to add various rare cards to our collections, pushing us to jump back into the deck-builder to tinker with our collection. Queen’s Blood may be intimidating at first, but if you take the time to learn, you’ll find one of the best in-game TCGs out there, and a minigame that deserves to be mentioned alongside the best minigames in Final Fantasy history.

For more, check out our tips and tricks and best Queen’s Blood deck build here.

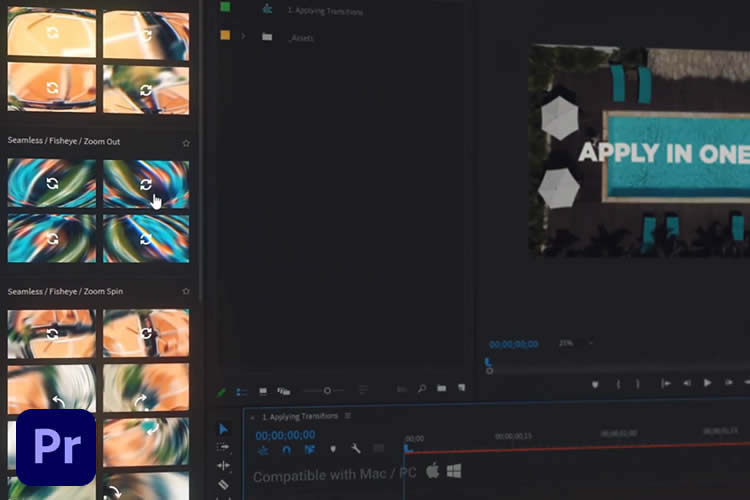

The 20+ Best Premiere Pro Transition Packs for Video Editors

In video editing, transitions do more than move viewers from one scene to the next; they play a crucial role in shaping the story and keeping your audience engaged.

A well-chosen transition can smoothly guide viewers through the narrative, subtly reinforcing the story’s pace, mood, and theme. This is why selecting the right transition pack is vital. It can dramatically enhance the storytelling and visual flow of your project, making your content not only more professional but also more compelling.

In this collection, we share the best Premiere Pro transitions currently available, and that also includes some high-quality free packs. These packs offer a wide range of effects—from the subtle to the eye-catching—designed to suit various editing needs and styles.

We’ll explore dynamic, seamless, creative, and essential transition packs, providing insights into how each can enhance your projects. Get ready to discover the video tools that will help you craft smooth, engaging video content in Premiere Pro that captivates your audience from start to finish.

The Types of Video Transitions

When editing a video, transitions are like the punctuation marks of your visual story. They help the flow from one scene to the next and can dramatically affect the mood and pace of your narrative.

Here’s a breakdown of the different types of transitions you might consider for your projects:

- Cuts: The most basic transition, a cut, is an instant change from one scene to another. It’s like a period at the end of a sentence, offering a clear break before moving on to the next idea. Cuts are perfect for fast-paced videos or to keep the audience’s attention moving forward without distraction.

- Fades: Fades slowly blend scenes together. A “fade in” might bring a scene from black to visible, signaling the beginning of a story, while a “fade out” does the opposite, often signaling the end. Fades can create a sense of time passing or a change in mood.

- Wipes: Wipes move a new scene across the old one, which can be done in various directions. This type of transition can indicate a change in location or a passage of time. Wipes add a dynamic feel to the video, keeping the viewer engaged.

- Special Effects: These are more complex transitions that include morphs, flips, spins, and more. Special effects transitions are best used sparingly, as they can be distracting. They’re great for highlighting significant moments or changes in your story.

Choosing the right type of transition depends on the story you’re telling and the emotion or reaction you want to evoke from your audience.

Essential Transition Packs for Premiere Pro

Every video editor needs a set of go-to transitions that work across various projects. Essential transition packs include versatile, widely applicable effects that can elevate anything from corporate presentations to personal vlogs. These packs are a staple in your editing toolkit, ensuring you always have the right transition at hand.

1,200+ Premiere Pro Transitions

The Essential Transitions Pack for Premiere Pro offers over 1,200 transitions across 26 categories, designed to suit every possible editing need. This pack includes sound effects, and the transitions are resizable for any project. With one-click application, it’s perfect for quickly adding professional touches to any video project.

300+ Transitions Pack for Premiere Pro

This Premiere Pro pack is a comprehensive collection of 500+ transitions, split into ten categories, including motion, zoom, glitch, light, roll, spin, stretch, VR, split, and mix. Works with Premiere Pro 2018 and above, this resizable template supports resolutions up to 8K, ensuring compatibility with projects of any scale. Complete with sound effects and boasting very fast render times, this pack comes with everything a video editor could ever need.

Media Transitions Pack for Premiere Pro

The Media Transitions Pack for Premiere Pro features 24 versatile transitions, including six directional options: Right, Left, Up, Down, LeftRight, and UpDown. This pack allows for full customization, allowing you to edit colors and adjust the transparency of elements to match your video project’s style perfectly.

Seamless Transition Packs for Premiere Pro

Seamless transition packs are the backbone of smooth storytelling. They’re perfect for narratives, documentaries, and presentations where maintaining a flow without noticeable interruptions is critical. These transitions help weave different scenes together into a single, cohesive story.

Seamless Ink Transitions for Premiere Pro

This transition pack includes 20 unique ink drawing-style animations, perfect for adding a creative touch to your videos. This modular pack allows for quick modifications and adjustments to fit your project’s needs. They’re ideal for video editors looking to infuse their videos with an artistic flair.

Seamless Transition Bundle for Premiere Pro

This Premiere Pro bundle comes with over 700 modern, seamless transitions, making it an essential download for any video editor. With an easy drag-and-drop setup, it caters to any FPS and includes sound FX for a complete audio-visual experience. This pack is perfect for editors looking for multiple options and efficiency in their workflow.

Seamless Film Transitions for Premiere Pro

This pack of 30 high-quality transitions has been designed to mimic the look of vintage 35mm, 16mm, and 8mm filmstrips, all in 4K resolution. With its fast rendering capability and synchronized sound effects, this high-quality transition collection will help to streamline your editing process, making it super easy to add a classic cinematic flair to your videos.

500+ Seamless Transitions for Premiere Pro

Download over 500 seamless transitions for Premiere Pro, all available in stunning 4K resolution. It comes with unique sound effects for each category and offers advanced control over target, motion, color, and effects. This pack is a game-changer for editors looking to elevate their video projects. Designed to work with Premiere Pro 2021 and above.

Seamless & Colorful Transitions for Premiere Pro

Dynamic Transition Packs for Premiere Pro

Dynamic transition packs are ideal for videos that demand energy and movement. They inject pace and vigor, perfect for action sequences, sports highlights, and any content that needs to keep the viewer on the edge of their seat.

Dynamic Transition Pack for Premiere Pro

This dynamic transition pack comes with ten simple, fullscreen colorful transitions that can instantly elevate any video project. With easy-to-use color controls, these transitions will add a vibrant touch to your video. This pack has been designed for editors looking for a quick way to enhance their visuals and make their content stand out. It works with Premiere Pro 2021 and above.

Energetic Seamless Transitions for Premiere Pro

Bring your videos to life with this energetic transition pack. It features ten blast-style transitions with realistic and silky, smoothly animated effects. Each transition comes with media placeholders for quick integration and full-color control, allowing for easy customization. This pack is ideal for editors aiming to add dynamic and captivating visuals to their projects. It works in Premiere Pro 2021 and above.

Clean & Fast Transitions for Premiere Pro (Free)

The Clean & Fast transitions pack for Premiere Pro offers smooth animated transitions that can quickly elevate any video project. Designed for ease of use, these transitions allow for simple drag-and-drop functionality. Just insert your media and hit render to see the magic happen.

Dynamic Transitions Premiere Pro MOGRT

This MOGRT transition pack brings your videos to life with vibrant, colorful transitions in stunning full HD. This Premiere Pro collection includes sound effects to complement your visuals and also offers full-color controls and media placeholders for quick and easy customization. Designed to work in Premiere Pro 2019 and newer.

Dynamic Light Flare Transitions for Premiere Pro

This Premiere Pro transition pack offers 12 high-energy light flare effects to make your videos pop. Available in both 4K (3840×2160) and Full HD (1920×1080) resolutions, they ensure your projects look sharp on any screen. This pack is ideal for editors looking to add a burst of brilliance to their edits quickly. Works with Premiere Pro CC 2018 and higher.

Creative Transition Packs for Premiere Pro

For editors looking to add a unique touch to their projects, creative transition packs offer a plethora of innovative effects. From whimsical wipes to fantastic fades, these packs allow for artistic expression in your edits. They’re great for projects that aim to stand out and capture the imagination of the audience.

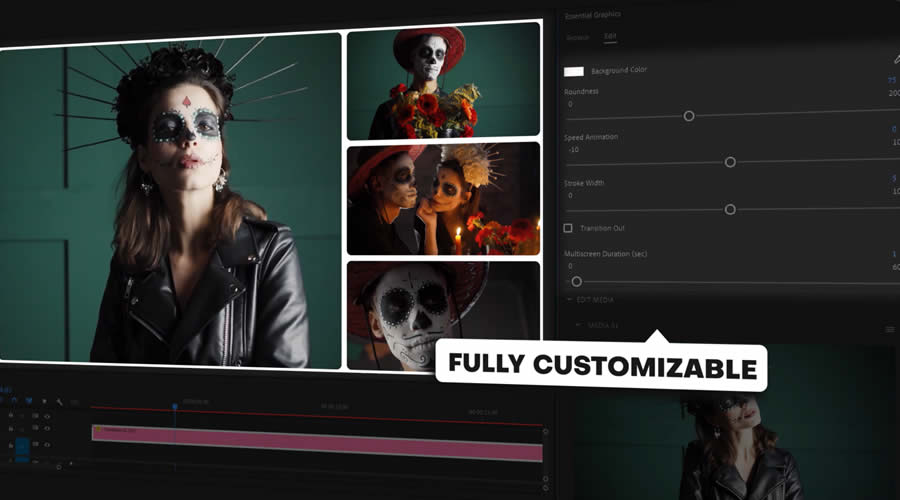

Multiscreen & Split Screen Premiere Pro Transitions

This vibrant collection of seamless, colorful transitions for Premiere Pro has been created to add a splash of creativity to your videos. These animated transitions come in 4K resolution, with color controls for quick and easy customization. Works with Premiere Pro 2021 and above. They’re perfect for giving your projects an energetic look and feel.

This transitions pack offers over 80 transitions, perfect for creating engaging montages or split-screen effects. Works with Premiere Pro CC 2023 and above, these 4K (3840×2160p) transitions are fully customizable, catering to any video’s specific needs. With drag-and-drop functionality, they provide a user-friendly editing experience, making complex multi-screen transitions simple.

Ink Transitions for Premiere Pro (Free)

This free Premiere Pro pack includes five fluid ink-style transitions, offering a unique and creative way to blend scenes together. These transitions are easily customized—simply drop your media into the shot sequence and render. It’s an ideal choice for editors looking to add an artistic touch to their videos with minimal effort.

Scratched Film Transitions for Premiere Pro

The Scratched Film Transitions pack for Premiere Pro comes with 15 unique transitions in both full HD and 4K resolutions. These transitions feature a scratched or glitchy film overlay, perfect for creating a vintage or edgy look. Short in duration, they seamlessly blend scenes together, adding texture and depth to your videos.

Textured Transitions for Premiere Pro (Free)

With this free transitions pack, you will get 15 MOGRT files, all with a unique textured style. These transitions allow you to customize the weight of the texture, the colors, and the appearance of the actual transition. Styles range from scribbled pencil and torn paper to zebra stripes and paper texture.

VHS Transition Pack for Premiere Pro

This Premiere Pro transition pack includes 24 transitions, bringing the nostalgic VHS effect to your videos. Offering both single and double versions of transitions, this pack allows for color editing and easy drag-and-drop functionality. Replace placeholders, and your retro-inspired transition is set to go.

Glitchy Transition for Premiere Pro (Free)

This free transition pack features ten unique and dynamic glitch-effect transitions. They are incredibly easy to use; simply drop the two clips you need to transition between, and you’re done. Perfect for adding a modern, edgy feel to any video project with minimal effort.

Grid Transitions Pack for Premiere Pro

The Grid Transitions Pack for Premiere Pro comes with 18 dynamic animations in 4K resolution, perfect for creating grid split screen effects. These transitions are very easy to use and edit, works with Premiere Pro CC 2021 and above, and are perfect for adding a structured, modern touch to any video project.

How to Install and Use Transition Packs in Premiere Pro

Installing and using transition packs in Premiere Pro can greatly elevate your video projects, making them smoother and more professional. Here’s a step-by-step guide to get you started:

How to Install Transition Packs:

- Download the Transition Pack: First, choose a Premiere Pro transition pack and download it.

- Open Premiere Pro: Launch Adobe Premiere Pro on your computer.

- Import the Pack: Go to the

Effectspanel, right-click, and chooseImport Presets. Navigate to where you saved your downloaded transition pack, select it, and clickOpen. The transitions will now be available in theEffectspanel underPresets.

How to Use Transitions Effectively:

- Match the Transition to the Mood: Consider what each transition conveys. Use sharp, quick cuts for energetic scenes and fades for more reflective moments.

- Don’t Overdo It: While transitions can enhance your video, using too many can distract the viewer. Stick to what serves the story best.

- Test Different Transitions: Experiment with various transitions to see which works best for your scene. Sometimes, a subtle change can make a big difference.

- Keep Your Audience in Mind: Think about your audience and the purpose of your video. A corporate presentation might require more straightforward transitions, while a creative project could benefit from something more dynamic.

- Practice Makes Perfect: The more you experiment with transitions, the better you’ll become at deciding which to use and when. Spend time getting to know the options available in your transition packs.

By following these steps and tips, you’ll be able to install and use transition packs in Premiere Pro to create videos that flow beautifully and keep your audience engaged.

Premiere Pro Transitions FAQs

-

What are Premiere Pro transition packs?

Transition packs for Premiere Pro are collections of pre-made effects used to move smoothly from one video clip to another. They range from simple fades and cuts to complex animations and effects, enhancing storytelling and visual appeal.

-

How do I choose the right transition pack for my project?

Consider the style and mood of your video. If it’s fast-paced, look for dynamic transitions. For more narrative-driven projects, seamless or subtle transitions might be best. Always align the transition style with your video’s tone.

-

Can I use transition packs in any version of Premiere Pro?

These transition packs are compatible with recent versions of Premiere Pro. However, it’s important to check the compatibility details the transition pack provides before downloading.

-

Can transitions be customized?

Many transition packs allow for customization. You can often change colors, duration, and other properties to better fit your video’s aesthetic.

-

Do I need to use transitions between all clips?

Not necessarily. Transitions should serve your storytelling, helping to create a smooth flow or emphasize a point. Use them purposefully rather than between every single clip.

-

Can I use these transition packs in other video editing software?

These transition packs have been specifically designed for Premiere Pro due to its unique features and capabilities. However, some creators might offer versions for other software. Always check the product details before downloading.

-

Do I need any special plugins to use these transition packs?

Some transition packs work directly within Premiere Pro without needing extra plugins, while others might require third-party plugins to unlock all their features. The description of the transition pack will state if you need additional software.

-

Is it possible to customize transitions to fit my project’s color scheme?

Yes, many transition packs come with customizable options allowing you to adjust colors, speed, and other elements to match your project’s aesthetic perfectly.

Conclusion

Choosing the right transition pack is crucial in video editing, as it not only enhances the visual flow but also supports and elevates the storytelling.

The Premiere Pro transition packs highlighted in this article offer a wide range of options for every type of project, from dynamic and seamless to creative and essential.

Experimenting with different transitions and creatively applying them can transform your video projects, making them more engaging and memorable. Remember, the goal is to complement your story, so let your creative instincts guide your choices.

Related Topics

Top

Weekly News for Designers № 729 – Google Bard is Now Gemini, Animating Font Palette, SVG Filter Maker

Google Bard is Now Gemini

Bard evolves into Gemini, introducing a mobile app and the advanced Ultra 1.0 for broader, more sophisticated AI collaboration.

In Loving Memory of Square Checkbox

Tonsky mourns the decline of the square checkbox in UI design, replaced by round ones, marking a significant shift from a long-standing user interface convention.

Web Development Is Getting Too Complex

Juan Diego Rodríguez explores the growing complexity in web development, highlighting the overwhelming choice of tools and frameworks, and advocates for simplicity and project-specific technology selection.

How Subscription Fatigue Impacts Web Designers

Everything’s a subscription. And it impacts web designers and their clients in numerous ways. We look at what this means for our industry.

Animating Font Palette

This tutorial explores the new feature in Chrome 121 that allows for animating transitions between font palettes in color fonts using CSS.

SVG Filter Maker

SVG Filter Maker is a tool by Chris Kirk Nielsen for easily creating SVG filters with a graphical interface, enhancing designs without coding knowledge.

Going Beyond Pixels and (R)ems in CSS

Brecht De Ruyte discusses using font-based relative length units in CSS for responsive designs, highlighting alternatives to pixels and rems that adapt to user settings.

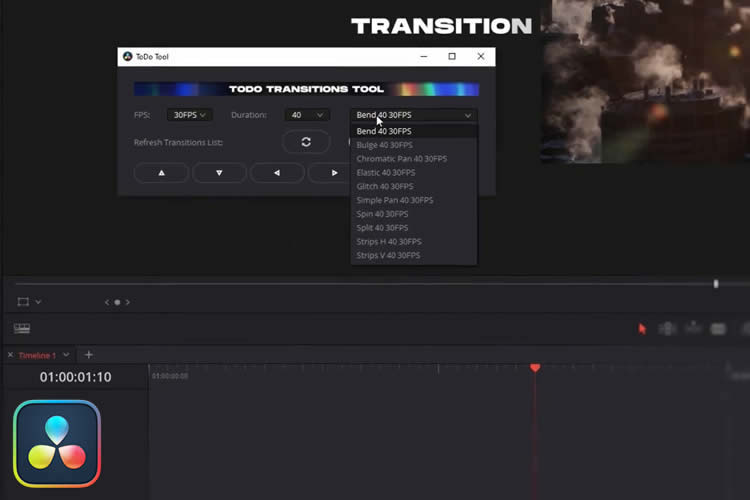

Top Transition Packs for DaVinci Resolve

A collection of the best transition packs for DaVinci Resolve, including high-quality free options. Professional-grade transition effects.

SVG Flag Icons

Over 200 optimized SVG country flag icons, crafted for perfect alignment on a 32px grid. Copy and paste.

Figicon Icon Library

A comprehensive library of high-quality, minimal, and pixel-perfect icons designed specifically for Figma projects.

Best Premiere Pro Transition Packs

A comprehensive collection of Premiere Pro transitions for video editors. Includes dynamic, seamless, creative, and essential transitions.

In Praise of Buttons

Niko Kitsakis discusses the evolution of button designs in UI, advocating for buttons that clearly indicate their function over minimalist designs for better usability.

Best Premiere Pro LUT Packs

We share the best LUTs for Premiere Pro (both free and premium). Add a professional touch to your color grading!

Performance Lab WordPress Plugin

This new plugin by the WordPress Performance Team, enhances website speed with modules for image optimization, WebP support, and performance checks, aiming for future WordPress core integration.

Lessons Learned in 25 Years of Freelancing

Embarking on a freelance web design career means embracing uncertainty. You’ll have to book enough gigs to keep you financially afloat. You’ll also need to weather the ups and downs. Most importantly, you’ll have to adjust to a changing landscape.

Nothing is guaranteed. Thus, I was astonished and amused when the calendar flipped to 2024. This year marks the 25th anniversary of my freelance business. How time flies.

I had no such milestones in mind back in 1999. My goals were relatively simple. I wanted to apply what I’d learned working for others. And I wanted to do things my way. Longevity wasn’t a priority for that 21-year-old kid.

Maybe I’ve beaten the odds. But I’m still working with clients. Some have even been with me for the entire run.

My goal today is to share some valuable lessons I’ve learned. Things that I hope will help you on your journey in this ever-changing industry. Here we go!

You Can Run Your Business Your Way

The world is full of copycats. Freelance web designers are no exception. We look at our peers and want to keep up with them. It’s a competitive field, after all.

Still, blending in makes it hard to stand out. Thus, trying to be like everyone else won’t get you far.

Think about what you want to accomplish. Ask yourself:

- What kinds of projects do I prefer?

- What technologies will I use?

- What’s my ideal work environment?

- What will my work schedule look like?

You can build the business you want. Now, you may need to make some sacrifices along the way. Not everything will go according to plan. However, you have an opportunity to work towards the future you envision.

The same principle applies to marketing. Your website doesn’t have to look anyone else’s. Nor do you have to hide your personality.

Show the world the best version of yourself. And don’t be afraid to do things your way. You’ll position yourself for happiness in the long term.

You’ll Change When the Time Is Right

The web design industry has come a long way in 25 years. We went from hand-coding HTML to content management systems (CMS) like WordPress. We went from employing layout hacks to using native CSS specifications. And that’s just the tip of the iceberg.

There’s a constant pressure to change and adapt. A steady flow of new tools and techniques arrives almost daily. It’s easy to feel left behind.

However, I’ve found that changes occur organically. There’s a time when making a change makes sense. You may outgrow your current workflow. Or you might book a project that would benefit from a different approach.

WordPress is a prime example. I didn’t shift my business to WordPress the moment it became popular. I came to it out of need.

Clients began asking for more complex websites. WordPress allowed me to build them more efficiently. Eventually, I decided to focus on it exclusively.

You don’t have to force yourself into changing. Instead, take action when it feels right.

Make Time for Personal and Professional Growth

Busy freelancers can become overwhelmed with projects. Spending all of your time working could lead to burnout. Not to mention fewer opportunities to learn.

It’s easy to fall into this trap. Making money and crossing items off your to-do list are priorities. But at what cost?

We rely heavily on our brains. Crafting designs and writing code requires mental fitness. A tired mind is less creative and less productive.

You need space to breathe. But no one will tell you to slow down. The responsibility is yours alone.

Schedule some time out of the office. That could be anything from a vacation to a daily walk. It’s a chance for a mental and physical reset.

Also, consider the importance of education. Learning a new skill or improving an existing one makes you better. It raises confidence and increases your earning potential.

The idea is to be mindful of your time. Use it wisely and allow yourself to rest and grow.

Be Careful Who You Work With

There is a temptation to book every gig that comes your way. On the surface, it makes sense. You build websites. The client needs a website. Everybody’s happy.

Freelancing is a long-term commitment, however. The project you accept today could be with you for years. Sometimes that’s a positive. But it can also be a drag on your business.

The issue can take a couple of forms. One is the classic “difficult” client. They’re picky and argue about every dollar. You dread your encounters with them. Do you want to be stuck in this situation for a decade or more?

On the other side, not all projects will fit your business. Maybe it uses a tool you no longer work with. Or the revenue doesn’t match the required effort.

It’s OK to be choosy when it comes to clients. So, keep the future in mind when considering a project. A little foresight can save you a lot of headaches.

Customer Service Makes All the Difference

There is no shortage of web designers out there. Everyone from freelancers to agencies is looking to boost their bottom line. And there are only so many projects to go around.

There’s a myth that you must be the best designer or coder to succeed. Sure, skill and talent mean a lot. However, clients may not be able to discern your skills from others. To them, you might be one of many options.

Therefore, the customer experience is another way to stand out. Give your clients more than they expect. It will keep them happy and encourage them to refer others to you.

Providing top-notch customer service is easier than you think. For example, these simple practices can make a positive impact:

- Answer client inquiries promptly and politely;

- Be honest with your project assessments;

- Explain technical concepts in plain language;

- Listen to your clients and help them determine their needs;

- Keep an open line of communication;

- Deliver on your promises;

Customer service can pay dividends for years to come. And it’s as important as any technical skill.

Some of your competitors don’t measure up in this area. Take advantage and give yourself an edge.

The Freelance Experience Is What You Make It

Being a freelancer takes a lot of effort. You must be willing to earn your way to success. And you’ll have to start building from the ground up.

However, you’ll also have a chance to define your vision for success. From there, you can craft a plan to achieve your goals. It could take years.

Thus, the lifestyle may not be a fit for everyone. But it’s possible for those who want to make that commitment. I’m living proof.

What will freelancing look like in another 25 years? Will I still be working then? Time will tell. My experiences so far have given me hope.

Good luck – wherever you are in your freelance journey. Keep going and make the most out of the opportunity. Take time to celebrate your milestones – you’ve earned it!

Related Topics

Top

Ukraine Did Not Get as Much Ammunition as You May Think: More Supplies Are Needed – Technology Org

The war in Ukraine has passed into the third year and the end is nowhere to be seen….