It’s no secret that people love to save money. That explains the continued popularity of coupons and vouchers. They’re still used everywhere, from local grocery stores to big-box retailers.

A well-designed coupon entices customers to visit your store or website. They’re an excellent vehicle for getting leads as well. They spark interest and get people thinking about your brand.

It sounds great, right? If you want to create coupons or vouchers, we have you covered. We’ve rounded up a collection of attractive and easy-to-customize print templates.

The templates below work for multiple use cases. They also work with popular editing applications like Photoshop, InDesign, Illustrator, and Figma. There are great options no matter which app you prefer using.

So, check out these outstanding templates and find one to match your needs. Customers will rush to your location (or website) before you know it.

Coupon & Voucher Templates for Photoshop

Vacay Voucher & Gift Template

This template will make customers think of sunny days in paradise. It includes a tropical flair with beautiful type and graphic effects. It’s a great choice for resorts, hotels, and travel agents.

Voucher Photoshop Template

Here’s a template that adds color and fun to the mix. The included files are print-ready and layered for easier editing. The look is professional, friendly, and sure to attract new customers.

Gift Voucher PSD Template

You’ll find a classic retro theme with this Photoshop voucher template. The layout is top-notch and features gorgeous vector shapes. Use it for restaurants and bars, or customize it to match your brand.

Movie Gift Voucher Photoshop Template

Get your popcorn ready and give the gift of a movie. It features an instantly recognizable look and is reminiscent of a movie ticket. It’s a unique way to say thank you to customers.

Elegant Gift Voucher Template

This colorful voucher card template will put a smile on customer’s faces. It evokes a hand-drawn artistic style that’s fun and functional. It suits any business that values a bit of flair in its promotions.

Fun Voucher Gift Voucher Template

Here’s a cheery option for giving customers a discount. The font and color schemes are bold but you can easily customize them. There’s also room for an eye-catching photo on each side of the document.

Digital Voucher Template for Photoshop

A high-contrast color scheme is the star of the show of this Photoshop template. Customers can’t help but take notice of the fun look and layout. Nightclubs, salons, and boutiques are perfect choices for this one.

Resto Voucher PSD Template

You can use this yummy voucher template to activate your customer’s appetite. Add some delicious photos, customize the text, and keep your kitchen humming. Everyone loves a good deal on good food. Is anyone else hungry?

Coupon & Voucher Templates for InDesign

InDesign Gift Voucher Template

This gift voucher template for InDesign is clean and minimal. It’s perfect for businesses that eschew gimmicks and over-the-top design. Instead, you’ll have something simple and functional. The no-nonsense approach will be a winner with customers.

Clean & Modern InDesign Gift Voucher Template

Here’s a great way to add a touch of class to your gift voucher. Spas, salons, and any place that pampers guests will want to check out this template. The simple pleasures are on full display here.

Minimal Gift Voucher Template for InDesign

Are you looking for a versatile template with a classic style? This package includes vouchers in both horizontal and vertical layouts. There are plenty of possibilities to make this one your own.

Discount & Gift InDesign Voucher Template

You’ll find bold typography and space for attention-grabbing images on this InDesign template. The design is modern and cleverly uses rectangular shapes. The result is a professional document that aims to impress.

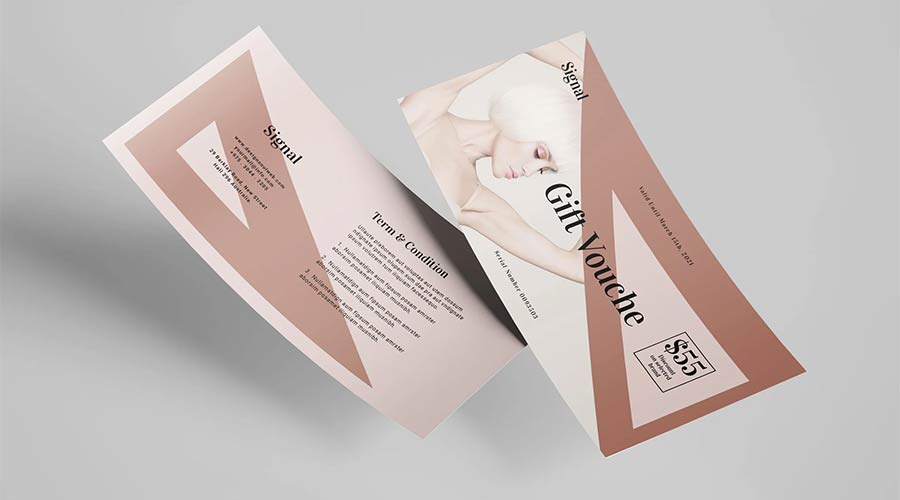

Stylish InDesign Gift Voucher Template

Here’s a different take on the previous template. It features triangular shapes and beautiful earth tones. Of course, you can also customize these elements to your heart’s content. It’s a high-end look that goes well with fashion-forward shops.

Coupon & Voucher Templates for Illustrator

Modern Gift Voucher Illustrator Template

A colorful contrast makes this gift certificate a winner. The dark background will make your can’t-miss deal stand out from the competition. Add your logo and a custom image to complete the look.

Minimal Voucher Template for Illustrator

Give your promotions a simple and modern style with this Illustrator template. It’s clean, easy to read, and includes space for all the details. Customize, print, and watch as happy customers come to your door.

Special Offer & Gift Voucher Template

There’s a fun retro vibe happening with this template. The text-based layout lends itself to emphasizing your promotion. There’s no fuss or fancy effects – just a highly versatile document to boost business.

Professional Gift Voucher Illustrator Template

Grab this template, open Adobe Illustrator, and create an attractive coupon in minutes. There is space for your promotional details, contact information, and social media links. And don’t forget your logo and a custom image or two.

Line Art Voucher Template for Illustrator

This template provides an unforgettable artsy feel to your coupons and vouchers. The swirling line art border gives way to a pastel background for text and images. It’s a cool option for any business seeking a modern way to promote its services.

The Bakry Gift Card & Voucher Template

Give the gift of a tasty treat with this bakery-inspired Illustrator template. The lighthearted design is a fun way to promote a café, coffee shop, or restaurant. Your customers will hurry on over once they have this coupon in hand.

Coupon & Voucher Figma Templates

Autumn Fall Voucher Template for Figma

Use this template to celebrate the fall season. It features an autumn color scheme – but you can change it up for use any time of the year. Figma makes customization easy, after all.

Black Friday Voucher Figma Template

Prepare for the biggest day of the year with this Black Friday template for Figma. Offer your best deal, and this stand-out document will do the rest. Its modern look is perfect for just about any use case.

Templates That Bring Style to Your Brand

Distributing coupons or vouchers is still an effective marketing strategy. It’s a great way to reward loyal customers and attract new ones. And it’s well worth the effort.

The templates above provide you with a great head-start. They already feature attention-getting designs. Customize them and get them into the hands of customers. It’s a win-win scenario.

We hope you found this collection useful. Now that you have outstanding templates within reach, it’s time to get creative!