Vectorize, a pioneering startup in the AI-driven data space, has secured $3.6 million in seed funding led by True Ventures. This financing marks a significant milestone for the company, as it launches its innovative Retrieval Augmented Generation (RAG) platform. Designed to optimize how businesses access and…

How AI is Amplifying Human Potential in Sales and Marketing

Artificial intelligence (AI) is revolutionizing how professionals approach marketing and sales in every sector. By embracing AI, professionals in the field are enhancing efficiency, boosting outcomes and driving faster, more informed decisions. Sales and marketing’s AI evolution signifies not just a shift in tools, but an…

How AI is Redefining Team Dynamics in Collaborative Software Development

While artificial intelligence is transforming various industries worldwide, its impact on software development is especially significant. AI-powered tools are enhancing code quality and efficiency and redefining how teams work together in collaborative environments. As AI continues to evolve, it’s becoming a key player in reconfiguring team…

Artificial intelligence meets “blisk” in new DARPA-funded collaboration

A recent award from the U.S. Defense Advanced Research Projects Agency (DARPA) brings together researchers from Massachusetts Institute of Technology (MIT), Carnegie Mellon University (CMU), and Lehigh University (Lehigh) under the Multiobjective Engineering and Testing of Alloy Structures (METALS) program. The team will research novel design tools for the simultaneous optimization of shape and compositional gradients in multi-material structures that complement new high-throughput materials testing techniques, with particular attention paid to the bladed disk (blisk) geometry commonly found in turbomachinery (including jet and rocket engines) as an exemplary challenge problem.

“This project could have important implications across a wide range of aerospace technologies. Insights from this work may enable more reliable, reusable, rocket engines that will power the next generation of heavy-lift launch vehicles,” says Zachary Cordero, the Esther and Harold E. Edgerton Associate Professor in the MIT Department of Aeronautics and Astronautics (AeroAstro) and the project’s lead principal investigator. “This project merges classical mechanics analyses with cutting-edge generative AI design technologies to unlock the plastic reserve of compositionally graded alloys allowing safe operation in previously inaccessible conditions.”

Different locations in blisks require different thermomechanical properties and performance, such as resistance to creep, low cycle fatigue, high strength, etc. Large scale production also necessitates consideration of cost and sustainability metrics such as sourcing and recycling of alloys in the design.

“Currently, with standard manufacturing and design procedures, one must come up with a single magical material, composition, and processing parameters to meet ‘one part-one material’ constraints,” says Cordero. “Desired properties are also often mutually exclusive prompting inefficient design tradeoffs and compromises.”

Although a one-material approach may be optimal for a singular location in a component, it may leave other locations exposed to failure or may require a critical material to be carried throughout an entire part when it may only be needed in a specific location. With the rapid advancement of additive manufacturing processes that are enabling voxel-based composition and property control, the team sees unique opportunities for leap-ahead performance in structural components are now possible.

Cordero’s collaborators include Zoltan Spakovszky, the T. Wilson (1953) Professor in Aeronautics in AeroAstro; A. John Hart, the Class of 1922 Professor and head of the Department of Mechanical Engineering; Faez Ahmed, ABS Career Development Assistant Professor of mechanical engineering at MIT; S. Mohadeseh Taheri-Mousavi, assistant professor of materials science and engineering at CMU; and Natasha Vermaak, associate professor of mechanical engineering and mechanics at Lehigh.

The team’s expertise spans hybrid integrated computational material engineering and machine-learning-based material and process design, precision instrumentation, metrology, topology optimization, deep generative modeling, additive manufacturing, materials characterization, thermostructural analysis, and turbomachinery.

“It is especially rewarding to work with the graduate students and postdoctoral researchers collaborating on the METALS project, spanning from developing new computational approaches to building test rigs operating under extreme conditions,” says Hart. “It is a truly unique opportunity to build breakthrough capabilities that could underlie propulsion systems of the future, leveraging digital design and manufacturing technologies.”

This research is funded by DARPA under contract HR00112420303. The views, opinions, and/or findings expressed are those of the author and should not be interpreted as representing the official views or policies of the Department of Defense or the U.S. government and no official endorsement should be inferred.

Study finds mercury pollution from human activities is declining

MIT researchers have some good environmental news: Mercury emissions from human activity have been declining over the past two decades, despite global emissions inventories that indicate otherwise.

In a new study, the researchers analyzed measurements from all available monitoring stations in the Northern Hemisphere and found that atmospheric concentrations of mercury declined by about 10 percent between 2005 and 2020.

They used two separate modeling methods to determine what is driving that trend. Both techniques pointed to a decline in mercury emissions from human activity as the most likely cause.

Global inventories, on the other hand, have reported opposite trends. These inventories estimate atmospheric emissions using models that incorporate average emission rates of polluting activities and the scale of these activities worldwide.

“Our work shows that it is very important to learn from actual, on-the-ground data to try and improve our models and these emissions estimates. This is very relevant for policy because, if we are not able to accurately estimate past mercury emissions, how are we going to predict how mercury pollution will evolve in the future?” says Ari Feinberg, a former postdoc in the Institute for Data, Systems, and Society (IDSS) and lead author of the study.

The new results could help inform scientists who are embarking on a collaborative, global effort to evaluate pollution models and develop a more in-depth understanding of what drives global atmospheric concentrations of mercury.

However, due to a lack of data from global monitoring stations and limitations in the scientific understanding of mercury pollution, the researchers couldn’t pinpoint a definitive reason for the mismatch between the inventories and the recorded measurements.

“It seems like mercury emissions are moving in the right direction, and could continue to do so, which is heartening to see. But this was as far as we could get with mercury. We need to keep measuring and advancing the science,” adds co-author Noelle Selin, an MIT professor in the IDSS and the Department of Earth, Atmospheric and Planetary Sciences (EAPS).

Feinberg and Selin, his MIT postdoctoral advisor, are joined on the paper by an international team of researchers that contributed atmospheric mercury measurement data and statistical methods to the study. The research appears this week in the Proceedings of the National Academy of Sciences.

Mercury mismatch

The Minamata Convention is a global treaty that aims to cut human-caused emissions of mercury, a potent neurotoxin that enters the atmosphere from sources like coal-fired power plants and small-scale gold mining.

The treaty, which was signed in 2013 and went into force in 2017, is evaluated every five years. The first meeting of its conference of parties coincided with disheartening news reports that said global inventories of mercury emissions, compiled in part from information from national inventories, had increased despite international efforts to reduce them.

This was puzzling news for environmental scientists like Selin. Data from monitoring stations showed atmospheric mercury concentrations declining during the same period.

Bottom-up inventories combine emission factors, such as the amount of mercury that enters the atmosphere when coal mined in a certain region is burned, with estimates of pollution-causing activities, like how much of that coal is burned in power plants.

“The big question we wanted to answer was: What is actually happening to mercury in the atmosphere and what does that say about anthropogenic emissions over time?” Selin says.

Modeling mercury emissions is especially tricky. First, mercury is the only metal that is in liquid form at room temperature, so it has unique properties. Moreover, mercury that has been removed from the atmosphere by sinks like the ocean or land can be re-emitted later, making it hard to identify primary emission sources.

At the same time, mercury is more difficult to study in laboratory settings than many other air pollutants, especially due to its toxicity, so scientists have limited understanding of all chemical reactions mercury can undergo. There is also a much smaller network of mercury monitoring stations, compared to other polluting gases like methane and nitrous oxide.

“One of the challenges of our study was to come up with statistical methods that can address those data gaps, because available measurements come from different time periods and different measurement networks,” Feinberg says.

Multifaceted models

The researchers compiled data from 51 stations in the Northern Hemisphere. They used statistical techniques to aggregate data from nearby stations, which helped them overcome data gaps and evaluate regional trends.

By combining data from 11 regions, their analysis indicated that Northern Hemisphere atmospheric mercury concentrations declined by about 10 percent between 2005 and 2020.

Then the researchers used two modeling methods — biogeochemical box modeling and chemical transport modeling — to explore possible causes of that decline. Box modeling was used to run hundreds of thousands of simulations to evaluate a wide array of emission scenarios. Chemical transport modeling is more computationally expensive but enables researchers to assess the impacts of meteorology and spatial variations on trends in selected scenarios.

For instance, they tested one hypothesis that there may be an additional environmental sink that is removing more mercury from the atmosphere than previously thought. The models would indicate the feasibility of an unknown sink of that magnitude.

“As we went through each hypothesis systematically, we were pretty surprised that we could really point to declines in anthropogenic emissions as being the most likely cause,” Selin says.

Their work underscores the importance of long-term mercury monitoring stations, Feinberg adds. Many stations the researchers evaluated are no longer operational because of a lack of funding.

While their analysis couldn’t zero in on exactly why the emissions inventories didn’t match up with actual data, they have a few hypotheses.

One possibility is that global inventories are missing key information from certain countries. For instance, the researchers resolved some discrepancies when they used a more detailed regional inventory from China. But there was still a gap between observations and estimates.

They also suspect the discrepancy might be the result of changes in two large sources of mercury that are particularly uncertain: emissions from small-scale gold mining and mercury-containing products.

Small-scale gold mining involves using mercury to extract gold from soil and is often performed in remote parts of developing countries, making it hard to estimate. Yet small-scale gold mining contributes about 40 percent of human-made emissions.

In addition, it’s difficult to determine how long it takes the pollutant to be released into the atmosphere from discarded products like thermometers or scientific equipment.

“We’re not there yet where we can really pinpoint which source is responsible for this discrepancy,” Feinberg says.

In the future, researchers from multiple countries, including MIT, will collaborate to study and improve the models they use to estimate and evaluate emissions. This research will be influential in helping that project move the needle on monitoring mercury, he says.

This research was funded by the Swiss National Science Foundation, the U.S. National Science Foundation, and the U.S. Environmental Protection Agency.

Apple’s Solution to Translating Gendered Languages

Apple has just published a paper, in collaboration with USC, that explores the machine learning methods employed to give users of its iOS18 operating system more choice about gender when it comes to translation. Though the issues tackled in the work (which Apple has announced here)…

Bubble findings could unlock better electrode and electrolyzer designs

Industrial electrochemical processes that use electrodes to produce fuels and chemical products are hampered by the formation of bubbles that block parts of the electrode surface, reducing the area available for the active reaction. Such blockage reduces the performance of the electrodes by anywhere from 10 to 25 percent.

But new research reveals a decades-long misunderstanding about the extent of that interference. The findings show exactly how the blocking effect works and could lead to new ways of designing electrode surfaces to minimize inefficiencies in these widely used electrochemical processes.

It has long been assumed that the entire area of the electrode shadowed by each bubble would be effectively inactivated. But it turns out that a much smaller area — roughly the area where the bubble actually contacts the surface — is blocked from its electrochemical activity. The new insights could lead directly to new ways of patterning the surfaces to minimize the contact area and improve overall efficiency.

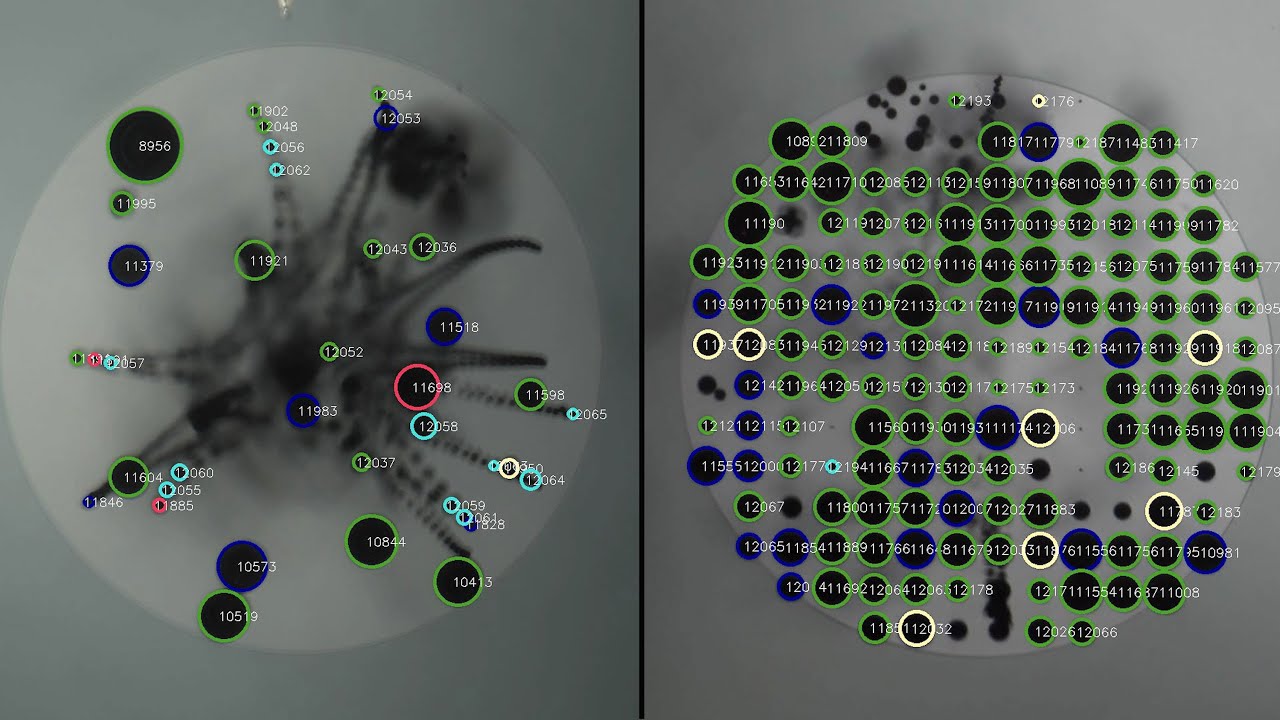

The findings are reported today in the journal Nanoscale, in a paper by recent MIT graduate Jack Lake PhD ’23, graduate student Simon Rufer, professor of mechanical engineering Kripa Varanasi, research scientist Ben Blaiszik, and six others at the University of Chicago and Argonne National Laboratory. The team has made available an open-source, AI-based software tool that engineers and scientists can now use to automatically recognize and quantify bubbles formed on a given surface, as a first step toward controlling the electrode material’s properties.

Gas-evolving electrodes, often with catalytic surfaces that promote chemical reactions, are used in a wide variety of processes, including the production of “green” hydrogen without the use of fossil fuels, carbon-capture processes that can reduce greenhouse gas emissions, aluminum production, and the chlor-alkali process that is used to make widely used chemical products.

These are very widespread processes. The chlor-alkali process alone accounts for 2 percent of all U.S. electricity usage; aluminum production accounts for 3 percent of global electricity; and both carbon capture and hydrogen production are likely to grow rapidly in coming years as the world strives to meet greenhouse-gas reduction targets. So, the new findings could make a real difference, Varanasi says.

“Our work demonstrates that engineering the contact and growth of bubbles on electrodes can have dramatic effects” on how bubbles form and how they leave the surface, he says. “The knowledge that the area under bubbles can be significantly active ushers in a new set of design rules for high-performance electrodes to avoid the deleterious effects of bubbles.”

“The broader literature built over the last couple of decades has suggested that not only that small area of contact but the entire area under the bubble is passivated,” Rufer says. The new study reveals “a significant difference between the two models because it changes how you would develop and design an electrode to minimize these losses.”

To test and demonstrate the implications of this effect, the team produced different versions of electrode surfaces with patterns of dots that nucleated and trapped bubbles at different sizes and spacings. They were able to show that surfaces with widely spaced dots promoted large bubble sizes but only tiny areas of surface contact, which helped to make clear the difference between the expected and actual effects of bubble coverage.

Developing the software to detect and quantify bubble formation was necessary for the team’s analysis, Rufer explains. “We wanted to collect a lot of data and look at a lot of different electrodes and different reactions and different bubbles, and they all look slightly different,” he says. Creating a program that could deal with different materials and different lighting and reliably identify and track the bubbles was a tricky process, and machine learning was key to making it work, he says.

Using that tool, he says, they were able to collect “really significant amounts of data about the bubbles on a surface, where they are, how big they are, how fast they’re growing, all these different things.” The tool is now freely available for anyone to use via the GitHub repository.

By using that tool to correlate the visual measures of bubble formation and evolution with electrical measurements of the electrode’s performance, the researchers were able to disprove the accepted theory and to show that only the area of direct contact is affected. Videos further proved the point, revealing new bubbles actively evolving directly under parts of a larger bubble.

The researchers developed a very general methodology that can be applied to characterize and understand the impact of bubbles on any electrode or catalyst surface. They were able to quantify the bubble passivation effects in a new performance metric they call BECSA (Bubble-induced electrochemically active surface), as opposed to ECSA (electrochemically active surface area), that is used in the field. “The BECSA metric was a concept we defined in an earlier study but did not have an effective method to estimate until this work,” says Varanasi.

The knowledge that the area under bubbles can be significantly active ushers in a new set of design rules for high-performance electrodes. This means that electrode designers should seek to minimize bubble contact area rather than simply bubble coverage, which can be achieved by controlling the morphology and chemistry of the electrodes. Surfaces engineered to control bubbles can not only improve the overall efficiency of the processes and thus reduce energy use, they can also save on upfront materials costs. Many of these gas-evolving electrodes are coated with catalysts made of expensive metals like platinum or iridium, and the findings from this work can be used to engineer electrodes to reduce material wasted by reaction-blocking bubbles.

Varanasi says that “the insights from this work could inspire new electrode architectures that not only reduce the usage of precious materials, but also improve the overall electrolyzer performance,” both of which would provide large-scale environmental benefits.

The research team included Jim James, Nathan Pruyne, Aristana Scourtas, Marcus Schwarting, Aadit Ambalkar, Ian Foster, and Ben Blaiszik at the University of Chicago and Argonne National Laboratory. The work was supported by the U.S. Department of Energy under the ARPA-E program.

Edge 437: Inside BlackMamba, One of the Most Important SSM Models Ever Created

The model combines SSMs, MoEs in a single architecture….

Have we been duped or dumped: Is AI here to stay?

Are we being duped into believing AI is scalable enough to solve all of our problems? Or will it stand the test of time, proving itself sustainable to humanity and our evolving world?…