Discover how AI is revolutionising digital marketing with success stories and key strategies. Learn about personalisation, predictive analytics, content creation, and more. The rapid evolution of AI is revolutionising digital marketing, offering unprecedented opportunities for personalisation, efficiency, and customer engagement. By leveraging advanced algorithms and machine…

Zoom now wants to be known ‘as an AI-first collaboration platform’

COVID-19 has, in a sense, transformed Zoom from a business-only tool into a household name. Now, the $19 billion video-calling giant is looking to redefine itself, which means leaving behind much of what has made it a mainstay throughout its decade-plus history. Graeme Geddes, Zoom’s chief…

50+ Best Photoshop Actions for Stunning Art Effects

Editing photos can be time-consuming, but Photoshop actions can streamline your workflow and produce stunning, professional results quickly.

To help you find the best options, we’ve curated a collection of art-inspired Photoshop actions to transform your photos. Whether you’re a digital designer, illustrator, or photographer, these actions will add creativity and style to your work.

Our selection includes effects to suit every taste. There’s something for everyone, from pencil sketches and watercolors to modern and pop art. These actions turn your photos into works of art, reflecting traditional painting and drawing techniques.

With just a few clicks, you can convert ordinary photos and graphics into oil paintings or create the nostalgic feel of vintage film. With these actions, you can have the artistic results you’ve always wanted!

The Top Art Effect Photoshop Actions for Creatives

Enamel Pin Photoshop Action

This Photoshop action styles photos into fun, colorful metal pins. With three metal colors to choose from, you have plenty of options here. This action works with text layers, vector shapes, and much more.

Cartoon Paint Photoshop Action

A cartoon action like this transforms everyday photos into fantastic artwork, adding a stylish aesthetic to your favorite shots. It works best with 2000x4000px dimension photos, delivering maximum style. It’s quick and easy to use, requiring no artistic talent!

Pencil Sketch Art Photoshop Action

Pencil sketches are a creative way to illustrate. This Photoshop action set lets you quickly add a sketching style to your photos. The set also includes brushes and patterns for added effects.

GlowArt Photoshop Action

Are you looking for a funky, contemporary feel in your photography? GlowArt is the Photoshop action for you. With a single click, it will add a vibrant glow to any photo.

Vintage Art Photoshop Action

Vintage effects add a timeless aesthetic to your favorite photos. They never go out of style and work well with portraits and landscapes. By applying this action, you will see your photos transform before your eyes.

Photo Art Gaming Photoshop Action

These Photoshop actions will style your images and graphics to look like video game covers. They’re especially useful for gamers sharing their artwork on social media. The pack also includes pattern sets.

Creative Art Photoshop Action

For an abstract effect, the Creative Art Photoshop action is hard to beat. It is well-suited for action and sports photos. With a few clicks, you’ll create stunning effects that almost seem as if they are in motion.

Vintage Effect Photoshop Action

Here is another Photoshop action with vintage styling. It converts photos to a faded, sepia tone and adds retro hues to every image you edit.

Movie Poster Art Photoshop Action

Movie posters have a unique style. With these Photoshop actions, you can bring that style to your favorite photos. Prepare to see colors pop and contrasts sharpen when you apply these effects.

Cyborg Photoshop Action

The Cyborg Photoshop actions offer a sci-fi effect for portrait photos. With two actions included, you can choose your preferred character design. The pack also includes Photoshop brushes and various color options.

Halloween Photoshop Action

Are you searching for spooky effects for your favorite Halloween photos? This is a purpose-built action pack for scary photos. Choose from various resolution options while editing. It features HDR effects that boost colors and contrast, and you can apply it with just a single click.

Sport Poster Effect Photoshop Action

Designed for sports posters, these Photoshop actions add a starry red backdrop. It works best for subjects that are in motion. The modern styling applies instantly, with geometric elements dropping into place. It’s a futuristic design for all your sports photos.

Art Work Photoshop Action

The Art Work Photoshop action pack helps the subjects of images stand out. With this pack, you’ll see backgrounds disappear, replaced with an abstract 3D concrete layout.

Posterize Effect Photoshop Action

With this Photoshop action pack, you can create elegant poster designs. With a single click, any portrait photo can be transformed into a stunning, posterized graphic.

Grunge Portrait Photoshop Action

The effect these Photoshop actions produce will turn any portrait into a decolorized, hand-drawn work of art. Brush and action files are included in the download pack. This action works best with portrait-style photos.

Old Newspaper Photoshop Action

Old newspapers have an unmistakable look and feel, from the typesetting to the slightly blurry photos. With this Photoshop action pack, you can bring this aesthetic to your photos. For maximum impact, wider photos are recommended.

Watercolor Photoshop Action

Watercolor artwork is a popular style, especially for landscape photos. With these actions, you can apply watercolor styling to any photo with a single click. Though designed for portraits, these actions will work on any image.

Plastic Wrapped Overlay Photoshop Action

The Plastic Wrapped Overlay Photoshop action pack is ideal for portrait subjects with a neutral yet stylish backdrop. This pack focuses on color contrasts. With a single click, you can apply a solid 3D background to the portrait photo of your choice.

Crack Stone Photoshop Action

These actions will add a cracked stone effect to your photos and graphics. They work with portraits and landscapes alike. It’s not a style you’ll likely use every day, but it’s handy to have in your editing toolbox. Multiple files are included, with documentation available. Single-click editing makes the process a breeze from start to finish.

Mirror Effect Photoshop Action

Mirror effects are powerful edits that work well with many types of photos. With these actions, you’ll see mirrored rings around your portrait subjects. It’s a sure way to help your photos get a second look from casual viewers.

Engraved Money Photoshop Action

Think of the portraits you’ve seen on printed money. Remember the iconic green stripes and portrait effects? You can add the effect to your photos with these custom actions. Use them to create fun designs for posters, blog posts, social media, and more.

Aquarelle Photoshop Action

Aquarelle is an artistic option for adding abstract watercolor effects to your images. With these color presets, you can easily tailor a photo’s palette to fit your style.

Sketch Painting Photoshop Action

Sketch paintings are a great way to be creative. Apply their aesthetic to the photos of your choice by using this Photoshop action pack. They work with just a single click and are well-suited to editors of all skill levels. You can create spectacular artwork in moments.

Pixel Sorting Photoshop Action

Pixel effects give the illusion of motion in still images. The Pixel Sorting Photoshop action pack allows you to add this effect to your top shots. This effect works best when applied to an action photo.

Fake Blueprint Photoshop Action

Have you ever wanted to create blueprint designs for your photo collection? It’s a terrific way to create modern, geometric designs. Now you can, with these Photoshop actions. Blueprint images are artsy and add a dose of contemporary cool to any wall or workspace.

Code Art Photoshop Actions

Code Art is an excellent choice for adding coding effects to your photographs. It works with portraits and full-body photos and includes ten color effects. Apply the action, and your image file will be layered and organized accordingly. This one’s a real time-saver.

Pencil Art Photoshop Actions

This action pack replicates the look of a pencil drawing in your photographs. Once the action generates the graphical elements, you can adjust them to suit your needs. It is non-destructive and includes six paper textures, eight color corrections, 28 brushes, and 14 patterns.

Mixed Art Photoshop Action

Another great option is the Mixed Art action pack. This Photoshop action can be applied with just one click, resulting in a look that replicates a watercolor painting.

Retro Art Photoshop Action

If you want to add a retro aesthetic to your photos, you’ll want to add the Retro Art Photoshop action kit to your toolbox. It adds an exciting artistic effect to your photos.

Chroma Art Photoshop Action

The Chroma Art Photoshop Action makes it easy to transform your photos into pieces of art that look like mixed-media artwork. It also comes with ten color presets that can be applied with a single click.

Drawing Art Animation Photoshop Action

The Drawing Art Animation Photoshop action adds a cool effect to your photographs. It is a time-saver that makes it easy to give your images the look of a hand-drawn piece of art.

Grunge Art Photoshop Action

This Grunge Art Photoshop action adds a dark and gritty look to your photographs. With a few clicks, you can give your images a real moody look with minimal effort. While this action works as expected, the results can be different each time to create a truly unique effect.

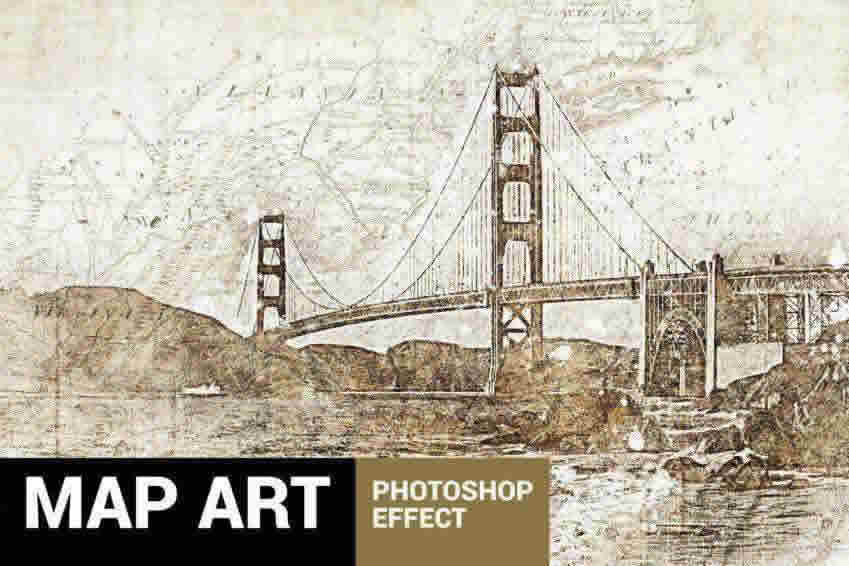

Piratum Map Art Photoshop Action

The Piratum Map Art Photoshop action offers a highly unique effect. When applied to your photos, it turns the image into a vintage-looking graphic, like a hand-drawn map. The result is an old-style graphic with tons of geographical details.

Sketch Art Photoshop Action

The Sketch Art Photoshop Action transforms your photo into a realistic painting while keeping the underlying sketch visible. It automatically creates all the necessary layers, which can be adjusted manually. The download includes ten brushes, five textures, and a quick start guide.

Spray Paint Photoshop Action

The Spray Paint Photoshop action is a set of 10 different effects you can apply to your photos immediately. Each effect creates the look of spray paint art. The result mimics edgy street art.

Pixel Art Photoshop Action

The Pixel Art Photoshop Action is another fantastic choice for your creative projects. It adds a retro, pixelated appearance to any image you apply it to. The result is a photo that looks like it came from the 1980s or 90s. Once used, you can adjust individual layers and gradient masks to refine the look further.

Blood Art Photoshop Action

The Blood Art Photoshop Action will turn any photo you choose into a moody and distinct artwork. Once applied, it adds a sort of cloudy blood effect. It comes with ten color effects.

Pixel Art Photoshop Actions

Another option is the Pixel Art Photoshop Action. This action turns your photo into a retro-looking image that looks like it came from a vintage video game. It comes with 21 actions, 32 tones, various color palettes, and more.

Ink Art Photoshop Action

The Ink Art Photoshop Action turns your photos into something that looks like it’s been hand-drawn. The result mimics Sumi-e-styled ink wash artwork. After you apply the effect, you can tweak various settings to customize the outcome.

Street Art Photoshop Action

You might also want to consider the Street Art Photoshop Action. As its name would suggest, this action transforms your images into street art. It works best with portraits and inanimate objects, and the result makes it appear as though a graffiti artist had drawn parts of the photo.

Modern Art Photoshop Action

Another option is this Modern Art Photoshop action pack. This action combines the look of real photography with watercolor and acrylic paint effects, creating a truly unique look. To use it, all you need to do is fill the subject of your photo with a color and then click play on the action.

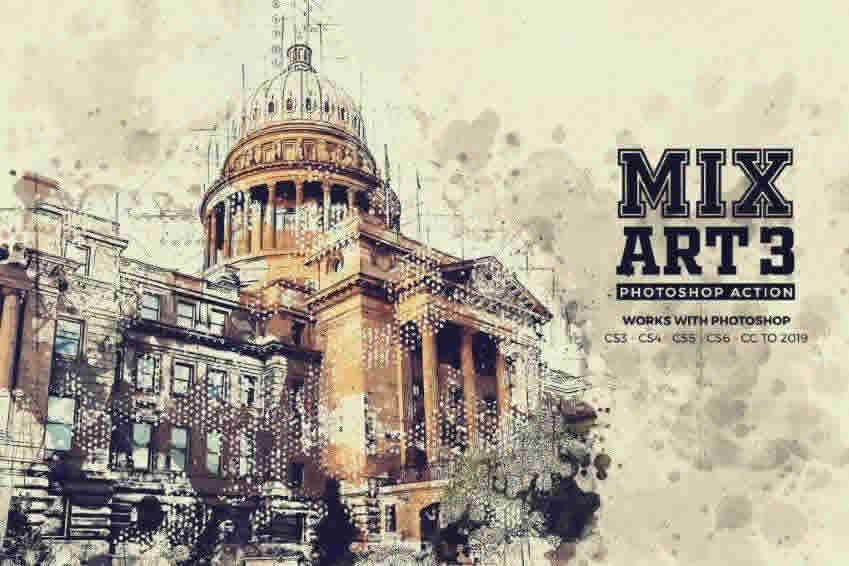

Mix Art Photoshop Action

As its name suggests, the Mix Art Photoshop Action pack makes it easy to turn ordinary photos into pieces of mixed-media art. It comes with ten color presets, is easy to use, and allows for extra customization once applied.

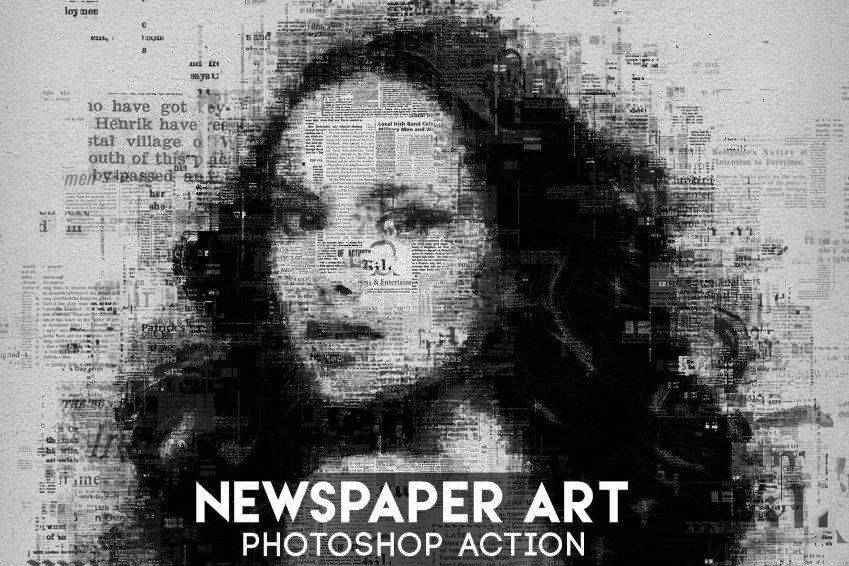

Newspaper Art Photoshop Action

Give your images the appearance of newspaper print with the Newspaper Art Photoshop Action. It will turn your image into an abstract piece of art by adding letters and tidbits of newspaper graphics into geometric patterns that make up the subject of your photo.

Digital Art Photoshop Action

What an excellent effect this action creates! The Digital Art Photoshop Action turns your photographs into images that look as though they’ve been hand-painted. The result is highly realistic, with all the textures expected from an oil or acrylic painting. It works best on portraits and inanimate objects and can be customized to suit your needs.

Oil Paint Animation Photoshop Action

Instead of endlessly adjusting settings, use the Oil Paint Animation Photoshop action set to give your photographs the appearance of being manually painted.

Vintage Art Photoshop Action

This Photoshop action set combines splashes, brushes, and colors to create an artistic vintage look. It’s easy to apply and creates a professional look with minimal effort.

Street Art Photoshop Action

Here’s another action that brings the look of street art to your photographs. It includes 11 graffiti tags, five rugged wall styles, and ten color effects to create many different looks.

Concept Mix Art Photoshop Action

The Concept Mix Art Photoshop Action would be an excellent addition to your collection. It turns your photos into mixed media pieces with the look of watercolor splashes and pen marks. Use it on portraits, figures, and inanimate objects.

Fine Art Mobile Photoshop Action (and Lightroom Presets)

The Fine Art Mobile Photoshop Action comes with 11 different presets, making it easy to give your photos a modern look.

Watercolor Splash Art Photoshop Action

Last in our collection is the Watercolor Splash Art Photoshop Action. This set gives your photo a watercolor look with plenty of splatter effects. Use it on any image, and customize the final layers the action creates to match your style.

How to Install Photoshop Actions

- Download and unzip the action file.

- Launch Photoshop.

- Go to

Window > Actions. - Select

Load Actionsfrom the menu and go to the folder where you saved the unzipped action file to select it. - The Action will now be installed.

- To use the newly installed action, locate it in the

Actionpanel. - Click the triangle to the left of the action name to see the list of available actions.

- Click the action you want to play and press the play button at the bottom of the

Actionspanel.

Art Effect Photoshop Action FAQs

-

What are art effects Photoshop actions?

Art effects actions are pre-set edits for Photoshop that add various artistic styles to your photos.

-

Are these actions compatible with all versions of Photoshop?

Most actions are designed to work with a wide range of Photoshop versions, but checking their compatibility with your specific version is advisable.

-

Can I apply these actions to different types of image files, such as RAW or JPEG?

These actions can be used with various image file types, including RAW and JPEG. However, the level of adjustment may vary based on the file format.

-

Are these actions suitable for both digital and traditional art styles?

These art effect actions can improve photos in ways that suit both digital and traditional artistic styles, offering versatility to artists.

-

Can I customize or combine multiple actions to create unique effects?

Certainly! You can adjust the opacity and layer blending modes and even combine multiple actions to achieve customized and distinctive artistic results.

Conclusion

These Photoshop actions offer a wide range of artistic possibilities, from turning a photo into a watercolor painting to giving it the appearance of an oil canvas.

These actions control elements like color, texture, and style but leave plenty of room for your own creative touches. Enjoy!

Related Topics

Protecting your brand in the age of AI – CyberTalk

Mark Dargin is an experienced security and network architect/leader. He is a Senior Strategic Security Advisor, advising Fortune 500 organizations for Optiv, the largest pure-play security risk advisory organization in North America. He is also an Information Security & Assurance instructor at Schoolcraft College in Michigan. Mark holds an MS degree in Business Information Technology from Walsh College and has had dozens of articles published in the computing press. He holds various active certifications, including the CRISC, CISSP, CCSP, PMP, GIAC GMON, GIAC, GNFA, Certified Blockchain Expert, and many other vendor related certifications.

In this timely and relevant interview, Senior Strategic Security Advisor for Optiv, Mark Dargin, shares insights into why organizations must elevate brand protection strategies, how to leverage AI for brand protection and how to protect a brand from AI-based threats. It’s all here!

1. For our audience members who are unfamiliar, perhaps share a bit about why this topic is of increasing relevance, please?

The internet is now the primary platform used for commerce. This makes it much easier for brand impersonators, and counterfeiters to achieve their goals. As a result, security and brand protection are essential. According to the U.S. Chamber of Commerce, counterfeiting of products costs the global economy over 500 billion each year.

Use of emerging technologies, such as artificial intelligence (AI) and deepfake videos — which are used to create brand impersonations — has increased significantly. This AI software can imitate exact designs and brand styles. Deepfake videos are also occasionally used to imitate a brand’s spokesperson and can lead to fraudulent endorsements.

Large language models (LLMs), such as ChatGPT, can also be used to automate phishing attacks that spoof well-known brands. I expect for phishing attacks that spoof brand names to increase significantly in sophistication and quantity over the next several years. It is essential to stay ahead of technological advancements for brand protection purposes.

2. How can artificial intelligence elevate brand protection/product security? What specific challenges does AI address that other technologies struggle with?

Performing manual investigations for brand protection can require a lot of time and resources to manage effectively. It can significantly increase the cost for an organization.

AI is revolutionizing brand protection by analyzing vast quantities of data, and identifying threats like online scams and counterfeit products. This allows brands to shift from reacting to threats to proactively safeguarding their reputation.

AI can increase the speed of identifying brand spoofing attacks and counterfeiting. Also, it can dramatically shorten the time from detection to enforcement by intelligently automating the review process and automatically offering a law enforcement recommendation.

For example, if a business can identify an online counterfeiter one month after the counterfeiter started selling counterfeit goods vs. six months later, then that can have a significant, positive impact on an organization’s revenue.

3. In your experience, what are the most common misconceptions or concerns that clients express regarding the integration of AI into brand protection strategies? How do you address these concerns?

If used correctly, AI can be very beneficial for organizations in running brand protection programs. AI technologies can help to track IP assets and identify infringers or copyright issues. It is important to note that AI is an excellent complement to, but cannot fully replace, human advisors.

There are concerns amongst security and brand protection leaders that AI will cause their investigative teams to rely solely on AI solutions vs. using human intuition. While tools are important, humans must also spend an adequate amount of time outside of the tools to identify bad actors, because AI tools are not going to catch everything. Also, staff must take the time to ensure that the information sent to the tool is correct and within the scope of what is required. The same goes for the configuration of settings. At a minimum, a quarterly review should be completed for any tools or solutions that are deployed.

Leaders must ensure that employees do not solely rely on AI-based tools and continue to use human intuition when analyzing data or identifying suspicious patterns or behaviors. Consistent reminders and training of employees can help aid in this ongoing process.

Training in identifying and reporting malicious use of the brand name and counterfeiting should be included for all employees. It is not just the security team that is responsible for protecting the brand; all employees should be part of this ongoing plan.

4. Can AI-based brand protection account for regional, local or otherwise business-specific nuances related to brand protection and product security? Ex. What if an organization offers slightly different products in different consumer markets?

Yes, AI brand protection solutions can account for these nuances. Many organizations in the same industry are working together to develop AI-based solutions to better protect their products. For example, Swift has announced two AI-based experiments, in collaboration with various member banks, to explore how AI could assist in combating cross-border payments fraud and save the industry billions in fraud-related costs.

We will continue to see organizations collaborate to develop industry-specific AI strategies for brand protection based on the different products and services offered. This is beneficial because attackers will, at times, target specific industries with similar tactics. Organizations need to account for this. Collaboration will help with protection measures, even in simply deciding on which protection measures to invest in most heavily.

5. Reflecting on your interactions with clients who are exploring AI solutions for brand protection, what are the key factors that influence their decision-making process? (ex. Budget, organizational culture, perceived ROI).

From my experience, the key factor that influences decision-making is the perceived return on investments (ROI). Once the benefits and ROI are explained to leaders, then it is less difficult to obtain a budget for investing in an AI brand protection solution. Many organizations are concerned about their brand name being used inappropriately on the dark web and this can hurt an organization’s reputation. Also, I have found that AI security solutions that can help aid an organization in achieving compliance with PCI, GDPR, HITRUST, etc., are more likely to receive approval and support from the board.

Building a culture of trust should not begin when change is being implemented; but rather in a much earlier phase of planning or deciding on which changes need to be made. If an organization has a culture that is not innovative, or leaders who do not train employees properly on using AI security tools or who are not transparent about the risks of it, then any investment in AI will face increased challenges.

AI’s high level of refinement means it can reduce the time and increase the scope of responsibility for individuals and teams performing investigations, enabling them to focus on other meaningful tasks. Investigations that were once mundane become more interesting due to the increased number of unique findings that AI is able to provide.

Due to the time saved by using AI in identifying attacks, investigators will have more time to pursue legal implications; ensuring that threat actors or brand impersonators are given legal warnings or charged with a crime. This can potentially discourage the recurrence of an attack from the specific source that receives the warning.

6. Could you share insights from your experience integrating AI technologies designed for brand protection into comprehensive cyber security frameworks? Lessons learned or recommendations for CISOs?

Security and brand protection leaders are seeing criminals use artificial intelligence to attack or impersonate brand names and they can stay ahead of those threats by operationalizing the NIST AI Risk Management Framework (AI RMF), and by mapping, measuring, and managing AI security risks. The fight moving forward in the future is AI vs AI. It is just as important to document and manage the risks of implementing AI as is to document the risk of attackers using AI to attack your brand name or products.

Leaders need to start preparing their workforce to see AI tools as an augmentation rather than substitution. Whether people realize it or not, AI is already a part of our daily lives, from social media, to smartphones, to spell check, to Google searches.

At this time, a task that was a challenge before can be done a lot faster and more efficiently with the help of AI. I am seeing more leaders who are motivated to educating security teams on the potential uses of AI for protecting the brand and in preventing brand-based spoofing attacks. I see this in the increased investments in AI-capable security solutions that they are making.

7. Would you like to speak to Optiv’s partnership with Check Point in relation to using AI-based technologies for brand protection/product security? The value there?

Attackers target brands from reputable companies because they are confident that these companies have a solid reputation for trustworthiness. Cyber criminals also know that it is difficult for companies, even large companies, to stop such brand impersonations by themselves, if they do not have appropriate tools to aid them.

Optiv and Check Point have had a strong partnership over many years. Check Point has a comprehensive set of AI solutions that I had the luxury of testing at the CPX event this year. Check Point offers a Zero-Phishing AI engine that can block potential brand spoofing attempts, which impersonate local and global brands across multiple languages and countries. It uses machine learning, natural language processing, and image processing to detect brand spoofing attempts. This provides security administrators with more time to focus on other security-related tasks or can alert them when something suspicious occurs within the environment.

The value in using AI solutions from vendors such as Check Point is the reduction in time spent detecting attacks and preventing attacks. In effect, this can empower organizations to focus on the business of increasing sales.

8. Can you share examples of KPIs/metrics that executives should track to measure the effectiveness of AI-powered brand protection initiatives and demonstrate ROI to stakeholders?

Generative AI projects concerning brand protection should be adaptable to specific threats that organizations may have within their environments at specific times. KPIs related to adaptability and customization might include the ease of fine-tuning models, or the adaptability of protection safeguards based on a specific input. The more customizable the generative AI project is, the better it can align with your specific protection needs, based on the assessed threats.

Organizations need to measure KPIs for the AI brand protection solutions that they have deployed. They should track how many attacks are prevented, how many are detected, and how many are successful. These reports should be reviewed on a monthly basis, at the least, and trends should be identified. For example, if successful attacks are increasing over a span of three months, that would be a concern. Or if you see the number of attacks attempted decreasing, that could also be something to look into. In such cases, consider investigating, as to ensure that your tools are still working correctly and not missing other attempted attacks.

9. In looking ahead, what emerging AI-driven technologies or advancements do you anticipate will reshape the landscape of brand protection and product security in the near future? How should organizations prepare? What recommendations are you giving to your clients?

Attackers will be increasing their use of AI to generate large-scale attacks. Organizations need to be prepared for these attacks by having the right policies, procedures, and tools in place to prevent or reduce the impact. Organizations should continually analyze the risk they face from AI brand impersonation attacks using NIST or other risk-based frameworks.

Security and brand leaders should perform a risk assessment before recommending specific tools or solutions to business units, because this will ensure you have the support needed for a successful deployment. It also increases the chance for approval of any unexpected expenses related to it.

I expect that there will be an increase in the collaboration between brands and AI-capable eCommerce platforms to jointly combat unauthorized selling and sharing of data and insights, leading to more effective enforcement. When it comes to brand protection, this will set the stage for more proactive and preventative approaches in the future, and I encourage more businesses to collaborate on these joint projects.

Blockchain technologies can complement AI in protecting brands, with their ability to provide security and transparent authentication. I expect that blockchain will be utilized more in the future in helping brands and consumers verify the legitimacy of a product.

10. Is there anything else that you would like to share with our executive-level audience?

As the issue of brand protection gains prominence, I expect that there will be regulatory changes and the establishment of global standards aimed at protecting brands and consumers from unauthorized reselling activities. Organizations need to stay on top of these changes, especially as the number of brand attacks and impersonations is expected to increase in the future. AI and the data behind it are going to continue to be important factors in protecting brand names and protecting businesses from brand-based spoofing attacks.

It is essential to embrace innovation and collaboration in brand protection and to ensure that authenticity and integrity prevail, given the various threats that organizations face. Let’s be clear that one solution will not solve all problems related to brand protection. Rather, the use of various technologies, along with human intuition, strong leadership, solid processes, and collaboratively created procedures are the keys to increased protection.

How the EU AI Act and Privacy Laws Impact Your AI Strategies (and Why You Should Be Concerned)

Artificial intelligence (AI) is revolutionizing industries, streamlining processes, improving decision-making, and unlocking previously unimagined innovations. But at what cost? As we witness AI’s rapid evolution, the European Union (EU) has introduced the EU AI Act, which strives to ensure these powerful tools are developed and used…

Yandong Liu, Co-Founder & CTO at Connectly – Interview Series

Yandong Liu is the Co-founder and CTO at Connectly.ai. He previously worked at Strava as a CTO. Yandong Liu attended Carnegie Mellon University. Founded in 2021, Connectly is the leader in conversational artificial intelligence (AI). Using proprietary AI models, Connectly’s platform automates how businesses communicate with…

Building LLM Agents for RAG from Scratch and Beyond: A Comprehensive Guide

LLMs like GPT-3, GPT-4, and their open-source counterpart often struggle with up-to-date information retrieval and can sometimes generate hallucinations or incorrect information. Retrieval-Augmented Generation (RAG) is a technique that combines the power of LLMs with external knowledge retrieval. RAG allows us to ground LLM responses in…

Industry experts call for tailored AI rules in post-election UK

As the UK gears up for its general election, industry leaders are weighing in on the potential impact on technology and AI regulation. With economic challenges at the forefront of political debates, experts argue that the next government must prioritise technological innovation and efficiency to drive…

Atomos Ninja Ultra with Connect Simplify Remote Production – Videoguys

Simplified Remote Production with Tones and Colours

Tones and Colours, a leading Sri Lankan media production company, is renowned for delivering visually stunning projects. In their quest to enhance workflow efficiency, they integrated the Ninja Ultra with Atomos Connect into their production setup.

Streamlining Workflow with Ninja Ultra

During a commercial shoot for fashion outlet Hameedia, the team utilized the Ninja Ultra as a primary viewing tool alongside the director’s monitor. The Ninja’s bright, high-definition display and built-in monitoring tools enabled Shenick Tissera, the creative director, to make real-time adjustments. “It was a hectic shoot where every moment mattered. The Ninja allowed me to monitor exposure levels accurately and use our LUTs for a final look preview,” he stated.

ProRes RAW for High-Quality Recording

Tasked with creating a launch video for the Sri Lankan cricket jersey by fashion brand Moose, the team employed a RED Komodo camera and a Sony FX3 connected to the Ninja Ultra. This setup allowed them to record in ProRes RAW, capturing two high-quality angles within a tight six-hour deadline. “Time management was crucial, and ProRes RAW helped us match the colours between the cameras quickly,” Shenick added.

Enhanced Image Quality and Productivity

Recording in ProRes RAW significantly improved image quality, boosted on-set productivity, and minimized post-production time. Cinematographer Shadim Sadiq praised ProRes for capturing intricate details and enhancing colour grading during post-production. “ProRes allowed us to push the colours further, capturing as much detail as possible,” he said.

Efficient Post-Production with Camera to Cloud

The Ninja Ultra, combined with the Atomos Connect module, revolutionized the team’s post-production process. Editor Jaden Daniel could remotely access files via Camera to Cloud, directly to Frame.io. “As a video editor, time is my most valuable resource. This system has saved me tons of time,” Jaden affirmed. This seamless integration enabled real-time editing, ensuring the team could stay ahead even during filming.

Atomos technology has redefined Tones and Colours’ production approach, empowering them to implement their creative vision efficiently. “No matter where my team is in the world, footage transfer is seamless and efficient,” Jaden concluded.

Conclusion

By integrating Ninja Ultra with Atomos Connect, Tones and Colours optimized their workflow, enhanced image quality, and significantly reduced post-production time. This innovative setup has empowered the team to deliver high-quality content more efficiently than ever before.

[embedded content]