The lucky players who took a chance on 2021’s Before Your Eyes were rewarded with a well-written, highly emotional narrative adventure with a unique mechanical twist. Players control a departed soul traversing the afterlife who relives his entire life, and it can be played entirely by blinking, utilizing webcams to capture their optical input (though the best version is on PlayStation VR2). I loved it, writing in my review, “It’s a concept I’d love to see further explored in a follow-up, and I couldn’t be happier that something like this exists.”

That sentiment was shared by Graham Parkes, who wrote Before Your Eyes and now serves as creative director and writer at Nice Dream. He and Oliver Lewin, co-founder, producer, and studio director at Nice Dream, formed part of the team that created the game, Goodbye World Games, itself a loose collective of designers. Goodbye World spent seven years developing Before Your Eyes, a cycle Parkes describes as a winding road of twists, turns, and, in his words, “so many dark days.” By the time it launched, Parkes says he was just happy to get the game out the door. He and Oliver didn’t anticipate how much of a success, especially critically, it would be. “Honestly, I’m still sometimes kind of shocked by it,” says Parkes.

Before Your Eyes has clearly resonated with players. Lewin reveals that a university student recently contacted him to say they had created a musical theater adaptation of the game for their school. “We still see so many people kind of contributing their own creativity and imagination to it,” says Lewin. “And it sort of continues to live on in that way, so that’s always really motivating and inspiring for us.”

Before Your Eyes

This motivation fueled Graham, Oliver, and Before Your Eyes’ other core team members’ ambitions to create their own studio after shipping the game. The team’s goal: create smaller games that emphasize narrative.

“Oli and I have always loved narrative games and [are] really just excited by the potential for games as the next narrative medium,” says Parkes. “I think that it’s just about being so surprised by the success of [Before Your Eyes] and seeing that there is an audience that likes these shorter, focused narrative experiences and that being something that we’ve always really loved and believed in. And so it’s like, ‘Oh, we have this shot to do that again’ and potentially build a studio around delivering those things.”

Thus, Nice Dream was born. The Los Angeles-based indie studio includes Bela Messex (lead designer/lead programmer), Richard Beare (lead engineer), Dillon Terry (audio lead, composer, designer), and Elisa Marchesi (3D artist). As the team explored its debut project, it held regular pitch meetings, tossing out ideas, arguing about what to do next, and creating prototypes. One thing they agreed on, though, was to pursue eye-tracking again. While creating Before Your Eyes, the team dreamed up other creative uses for the technology that ultimately didn’t fit with the game’s design or story. Before Your Eyes still managed to use blinking and closing your eyes to create magical moments in a more metaphorical sense. But Graham points out that the idea of controlling something with your face has always been conveyed in fiction as a trigger of power – to create genuine magic.

“If you watch Eleven in Stranger Things or different characters with psychic abilities, often they’ll be blinking, or they’ll be closing their eyes and doing things like that, and this kind of literalizes that and makes you really feel like you’re doing something magic,” says Parkes. “So I think that was kind of our initial thing that we were creating prototypes around, like ‘Okay, what if this is a psychic kid story?’”

Goodnight Universe

Birth of a Premise

Around this time, Messex had his first child, a girl named Io. For the next few months, Messex would bring Io to the office, and Parkes says her presence began to influence the team. Originally, Nice Dream played with the idea of beginning its game with players controlling the psychic character as a baby before they presumably matured. But as it prototyped the concept of a psychic-powered infant while also being exposed to Io regularly, they became increasingly delighted by the infant perspective, and the narrative and mechanical potential began to click.

Lewin also believes that having a baby protagonist fits how video games usually work in terms of progression. Babies, like most video game characters, develop rapidly and acquire specific, crucial skills as they experience life. You’re also just dropped into a world with no knowledge of how it works, meeting strangers who you rely on to learn and survive and obtain a lot of knowledge just poking at things and seeing what happens.

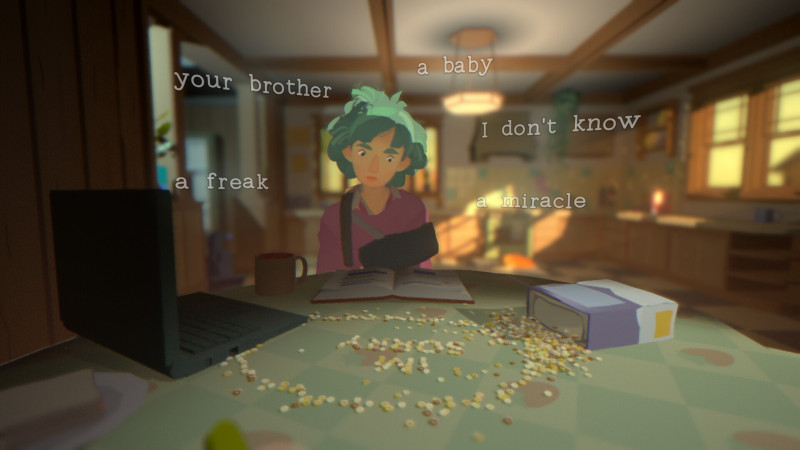

Nice Dream had its protagonist for Goodnight Universe. The team used Io for reference, with Terry recording a large bank of baby noises while playing with her that players will hear in-game. As for its story, Goodnight Universe stars Isaac, a six-month-old baby born with psychic powers and a heightened intelligence and awareness of his abilities and the world around him. He’s the latest addition to a family that, though loving, has become emotionally detached from each other. The family is unaware of Isaac’s powers, and despite his powerful mind, he’s still limited by his infantile inability to communicate verbally. However, the family’s troubles cause him to realize that he must keep his powers a secret.

“[Isaac] then realizes that it’s very important to his mother especially that he be a normal baby because he was born prematurely, and they had all these health scares when he was first born,” says Parkes. “And so he kind of takes the lesson that he needs to keep his powers and his differences secret from his family. And his decision to hide himself is sort of mirrored in what he’s learning about each of the family members and the ways that they’re hiding themselves from each other.”

To this end, Issac becomes a secret helper by observing his family’s issues and using his powers to ease their burdens. His abilities include moving objects with telekinesis and using telepathy to read family members’ minds to gain insights into their thoughts and feelings. Nice Dream states that additional abilities will become available as the game progresses but doesn’t specify them other than that you’ll be using your face to activate them. Parkes describes using blinking for smaller interactions, such as an early sequence where Isaac discovers his powers while watching a kid’s show he loathes. By blinking, he can change the channel to something more preferable (think that one scene in X2 with the mutant kid watching TV we all vividly remember).

Making Faces

An expanded version of Before Your Eyes’ blinking tech will allow a broader range of facial inputs to activate your powers. The team is still conceptualizing how this feature will work exactly, but it is exploring using mouth shapes, such as smiling and frowning, and other gestures.

“We did kind of want to use all parts of the buffalo on this one,” says Parkes. “I felt like with Before Your Eyes, it was very much like, ‘Okay, the tech can do a lot more than just blink’…So we are trying to have fun with the camera. That’s part of the fun for us is just being like, ‘Okay, cool, what else can this weird controller do for us?’”

Like Before Your Eyes, Goodnight Universe can be played with traditional controls. Nice Dream states that it noticed how many Before Your Eyes players enjoyed playing the game with traditional controls instead of using the webcam. It recognizes this and says it’s designing Goodnight Moon to be a wholly enjoyable game whether you’re interacting with your face or your controller. The team says the face-tracking isn’t as intrinsically tied to Goodnight Universe’s storytelling and themes as it was with Before Your Eyes, so it feels more like an optional play mode this time.

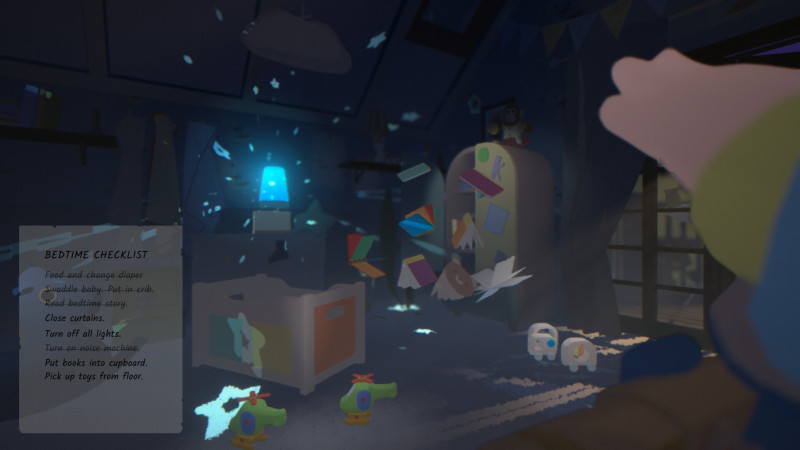

Nice Dream also describes Goodnight Universe as more mechanically dense, featuring more traditional puzzle-solving. For example, players use their powers to complete a checklist of creative tasks inspired by titles like Untitled Goose Game. For other sequences, it examined on-rail shooter sequences and hints at similarly designed gameplay moments. “There may be a motorized crib,” teases Lewin. But in between instances of performing psychic-powered tasks, Lewin says there are segments where you’ll experience the humble reality of simply being a baby.

If you’re still emotionally recovering from Before Your Eyes, Nice Dream describes Goodnight Universe as a lighter, wackier adventure by comparison. “We didn’t want to just try to break everyone’s hearts again,” says Parkes. That doesn’t mean the story won’t have heavy or heartfelt moments, but given that Isaac’s abduction by a shady government agency is the central plot point, it’s a more comedic but tonally balanced adventure.

Goodnight Universe will be longer than Before Your Eyes but similarly brief overall, with Nice Dream targeting a roughly two-to-four-hour experience (though this is still being determined). Graham says he prefers making an experience that can be finished in one sitting after realizing that many players who tried Before Your Eyes finished it. Additionally, Graham himself is an avid gamer and with so many great games releasing all of the time, he says he’s more likely to gravitate towards shorter experiences to enjoy more of them.

Goodnight Universe also features narrative branching based on decision-making moments. While the game isn’t about creating dramatic story differences, expect your actions to have a ripple effect on the events. Nice Dream states the ending, while still a focused conclusion, can have different variations based on choices, but the extent of this is unclear and still evolving.

Growing Up

As I spoke with Parkes and Lewin, their excitement for Goodnight Universe was palpable. Besides the inherent joy (and anxiety) of discussing the game in depth for the first time, it’s clear that Before Your Eyes’ success has galvanized the team to create something bigger and bolder while still adhering to the design philosophies that made the game work.

Lewin says the team was worried players wouldn’t understand or resonate with Before Your Eyes’ unique premise and mechanics. But after its positive reception, Nice Dream now feels emboldened to get wilder in Goodnight Universe without the fear of people not “getting it.” Parkes adds that the team feels calmer about what it can achieve now that it’s on the other side of Before Your Eyes. While it does feel the inherent pressure of making a follow-up – even a spiritual one, in this case – it’s starting from experience now.

Goodnight Universe is off to a good start; it’s one of the seven games currently being showcased as part of the Tribeca Film Festival’s annual games selection. On top of that, the game now has an adorable unofficial mascot in Io, now a toddler, so that’s pretty cool.

Before Your Eyes floored us with its originality and writing, and we’re excited to see how Goodnight Universe matures over the coming months.