AI is coming to institutional investing. A JP Morgan survey shows that 61% of traders see artificial intelligence as the most influential technology in their industry in the coming years – far outdistancing other choices, such as blockchain-based trading or quantum computing. For many, though, AI is simply a…

Lollipop Chainsaw RePop Launches This September, Remastered Features Revealed

Developer-publisher Dragami Games has revealed that Lollipop Chainsaw RePop, its remaster of the 2012 zombie action game, will launch September 25 on PlayStation, Xbox, Switch, and PC. Dragami revealed this release date in RePop’s first trailer, which shows off some of the remastered gameplay and the characters players will meet in the game. Lollipop Chainsaw represents a collaboration between Goichi “Suda51” Suda (No More Heroes, Killer7), and Guardians of the Galaxy director and now DC film head James Gunn. At the time of Lollipop Chainsaw’s release, Gunn was known primarily for directing the films Slither and Super, and writing the two live-action Scooby Doo films and Zach Snyder’s Dawn of the Dead remake. We spoke with Suda51 recently about his career and whether or not he keeps up with James Gunn.

Alongside the trailer, Dragami has also released various details related to the remaster of Chainsaw Lollipop, including word that PlayStation 5, Xbox Series X/S, and PC players can enjoy the game with 4K resolution and 60 FPS (the PlayStation 4, Xbox One, and Switch versions support 1080p resolution and 30 FPS). Dragami says RePop has shorter load times, improved camera and keystroke response, increased attack speed, and faster movement speed, too.

Plus, the Chainsaw Blaster now has “auto rock on,” an auto-fire mode, and an increased maximum ammunition count, while combos are now usable from the start of the game. Nick Roulette has received some changes, and so have minigames. Plus, a new auto-QTE feature is on by default now.

Check all of it out for yourself in the Chainsaw Lollipop RePop gameplay trailer below:

[embedded content]

Lollipop Chainsaw RePop will be available on September 25 for the PlayStation 5, Xbox Series X/S, Switch, and PC.

For more, read Game Informer’s original Lollipop Chainsaw review here.

Are you going to be playing Chainsaw Lollipop RePop this September? Let us know in the comments below!

The Legend Of Zelda: Majora’s Mask Part 18 | Super Replay

After The Legend of Zelda: Ocarina of Time reinvented the series in 3D and became its new gold standard, Nintendo followed up with a surreal sequel in Majora’s Mask. Set two months after the events of Ocarina, Link finds himself transported to an alternate version of Hyrule called Termina and must prevent a very angry moon from crashing into the Earth over the course of three constantly repeating days. Majora’s Mask’s unique structure and bizarre tone have earned it legions of passionate defenders and detractors, and one long-time Zelda fan is going to experience it for the first time to see where he lands on that spectrum.

Join Marcus Stewart and Kyle Hilliard today and each Friday on Twitch at 1:00 p.m. CT as they gradually work their way through the entire game until Termina is saved. Archived episodes will be uploaded each Saturday on our second YouTube channel Game Informer Shows, which you can watch both above and by clicking the links below.

Part 1 – Plenty of Time

Part 2 – The Bear

Part 3 – Deku Ball Z

Part 4 – Pig Out

Part 5 – The Was a Bad Choice!

Part 6 – Ray Darmani

Part 7 – Curl and Pound

Part 8 – Almost a Flamethrower

Part 9 – Take Me Higher

Part 10 – Time Juice

Part 11 – The One About Joey

Part 12 – Ugly Country

Part 13 – The Sword is the Chicken Hat

Part 14 – Harvard for Hyrule

Part 15 – Keeping it Pure

Part 16 – Fishy Business

Part 17 – Eight-Legged Freaks

[embedded content]

If you enjoy our livestreams but haven’t subscribed to our Twitch channel, know that doing so not only gives you notifications and access to special emotes. You’ll also be granted entry to the official Game Informer Discord channel, where our welcoming community members, moderators, and staff gather to talk games, entertainment, food, and organize hangouts! Be sure to also follow our second YouTube channel, Game Informer Shows, to watch other Replay episodes as well as Twitch archives of GI Live and more.

AI in casino games: A whole new world waiting to be dealt

AI is in pretty much everyone’s conversations right now, with people using it (successfully and unsuccessfully) for a vast range of different things. Let’s face it: we’ve got stars in our eyes when it comes to AI right now – but what’s it doing to one…

EU AI legislation sparks controversy over data transparency

The European Union recently introduced the AI Act, a new governance framework compelling organisations to enhance transparency regarding their AI systems’ training data. Should this legislation come into force, it could penetrate the defences that many in Silicon Valley have built against such detailed scrutiny of…

Strategic patch management & proof of concept insights for CISOs – CyberTalk

Augusto Morales is a Technology Lead (Threat Solutions) at Check Point Software Technologies. He is based in Dallas, Texas, and has been working in cyber security since 2006. He got his PhD/Msc in Telematics System Engineering from the Technical University of Madrid, Spain and he is also a Senior Member of the IEEE. Further, he is the author of more than 15 research papers focused on mobile services. He holds professional certifications such as CISSP and CCSP, among others.

One of the burdens of CISO leadership is ensuring compliance with endpoint security measures that ultimately minimize risk to an acceptable business level. This task is complex due to the unique nature of each organization’s IT infrastructure. In regulated environments, there is added pressure to implement diligent patching practices to meet compliance standards.

As with any IT process, patch management requires planning, verification, and testing among other actions. The IT staff must methodically define how to find the right solution, based on system’s internal telemetry, processes and external requirements. A Proof of Concept (PoC) is a key element in achieving this goal. It demonstrates and verifies the feasibility and effectiveness of a particular solution.

In other words, it involves creating a prototype to show how the proposed measure addresses the specific needs. In the context of patch management, this “prototype” must provide evidence that the whole patching strategy works as expected — before it is fully implemented across the organization. The strategy must also ensure that computer resources are optimized, and software vulnerabilities are mitigated effectively.

Several cyber security vendors provide patch management, but there is no single one-size-fits-all approach, in the same way that there is for other security capabilities. This makes PoCs essential in determining the effectiveness of a patching strategy. The PoC helps in defining the effectiveness of patching strategy by 1) discovering and patching software assets 2) identifying vulnerabilities and evaluating their impact 3) generating reports for compliance and auditing.

This article aims to provide insights into developing a strategic patch management methodology by outlining criteria for PoCs.

But first, a brief overview of why I am talking about patch management…

Why patch management

Patch management is a critical process for maintaining the security of computer systems. It involves the application of functional updates and security fixes provided by software manufacturers to remedy identified vulnerabilities in their products. These vulnerabilities can be exploited by cyber criminals to infiltrate systems, steal data, or take systems hostage.

Therefore, patch management is essential to prevent attacks and protect the integrity and confidentiality of all users’ information. The data speaks for itself:

- There are an average of 1900 new CVEs (Common Vulnerabilities and Exposures) each month.

- 4 out of 5 cyber attacks are caused by software quality issues.

- 50% of vulnerabilities are exploited within 3 weeks after the corresponding patch has been released.

- On average, it takes an organization 120 days to remediate a vulnerability.

Outdated systems are easy targets for cyber attacks, as criminals can easily exploit known vulnerabilities due to extensive technical literature and even Proof-of-Concept exploits. Furthermore, successful attacks can have repercussions beyond the compromised system, affecting entire networks and even spreading to other business units, users and third parties.

Practical challenges with PoC patch management

When implementing patch management, organizations face challenges such as lack of visibility into devices, operating systems, and versions, along with difficulty in correctly identifying the level of risk associated with a given vulnerability in the specific context of the organization.

I’ll address some relevant challenges in terms of PoCs below:

1) Active monitoring: PoCs must establish criteria for quickly identifying vulnerabilities based on standardized CVEs and report those prone to easy exploitation based on up-to-date cyber intelligence.

2) Prioritization: Depending on the scope of the IT system (e.g. remote workers’ laptops or stationary PCs), the attack surface created by the vulnerability may be hard to recognize due to the complexity of internal software deployed on servers, end-user computers, and systems exposed to the internet. Also, sometimes it is not practical to patch a wide range of applications with an equivalent sense of urgency, since it will cause bandwidth consumption spikes. And in case of errors, it will trigger alert fatigue for cyber security personnel. Therefore, other criteria is needed to identify and to quickly and correctly patch key business applications. This key detail has been overlooked by some companies in the past, with catastrophic consequences.

3) Time: To effectively apply a patch, it must be identified, verified, and checked for quality. This is why the average patch time of 120 days often extends, as organizations must balance business continuity against the risk of a cyber attack. The PoC process must have ways to collect consistent and accurate telemetry, and to apply compensation security mechanisms in case the patch process fails or cannot be completely rolled out because of software/OS incompatibility, drop in performance and conflict with existing endpoint controls (e.g. EDR/Antimalware). Examples of these compensation controls include: full or partial system isolation, process/socket termination and applying or suggesting security exclusions.

4) Vendor coordination: PoCs must ensure that software updates will not introduce new vulnerabilities. This situation has happened in the past. As an example, CVE-2021-30551 occurred in the Chrome Browser, where the fix inadvertently opened up another zero-day vulnerability (CVE-2021-30554) that was exploited in the wild.

Another similar example is Apple IOS devices with CVE-2021-1835, where this vulnerability re-introduced previously fixed vulnerabilities by allowing unauthorized user access to sensitive data, without the need for any sophisticated software interaction. In this context, a PoC process must verify the ability to enforce a defense in depth approach by, for example, applying automatic anti-exploitation controls.

Improving ROI via consolidation – The proof is in the pudding?

In the process of consolidating security solutions, security posture and patch management are under continuous analysis by internal experts. Consolidation aims to increase the return on investment (ROI).

That said, there are technical and organizational challenges that limit the implementation of a patch and vulnerability management strategy under this framework, especially for remote workers. This is because implementing different solutions on laptops, such as antimalware, EDR, and vulnerability scanners, requires additional memory and CPU resources that are not always available. The same premise applies to servers, where workloads can vary, and any unexpected increase or latency in service can cause an impact on business operations. The final challenge is software incompatibility that, together with legacy system usage, can firmly limit any consolidation efforts.

Based on the arguments above, consolidation is feasible and true after demonstrating it by the means of a comprehensive PoC. The PoC process should validate consolidation via a single software component a.k.a. endpoint agent and a single management platform. It should help cyber security practitioners to quickly answer common questions, as described below:

- How many critical vulnerabilities exist in the environment? What’s the breakdown?

- Which CVEs are the most common and what are their details?

- What is the status of a specific critical CVE?

- What’s the system performance? What/how it can be improved?

- How does threat prevention works in tandem with other security controls? Is containment possible?

- What happens if patching fails?

Failure in patch management can be catastrophic, even if just a small percentage fail. The PoC process must demonstrate emergency mitigation strategies in case a patch cannot be rolled out or assets are already compromised.

Managing this “mitigation” could limit the ROI, since extra incident response resources could be needed, which may involve more time, personnel and downtime. So, the PoC should demonstrate that the whole patch management will maintain a cyber-tolerance level that could be acceptable in conjunction with the internal business processes, the corresponding applicable regulations, and economic variables that keep the organization afloat.

Check Point Software Technologies offers Harmony Endpoint, a single agent that strengths patch management capabilities and hence, minimizes risks to acceptable levels. It also provides endpoint protection with advanced EPP, DLP, and XDR capabilities in a single software component, ensuring that organizations are comprehensively protected from cyber attacks while simplifying security operations and reducing both costs and effort.

The Future of Cybersecurity: AI, Automation, and the Human Factor

In the past decade, along with the explosive growth of information technology, the dark reality of cybersecurity threats has also evolved dramatically. Cyberattacks, once driven primarily by mischievous hackers seeking notoriety or financial gain, have become far more sophisticated and targeted. From state-sponsored espionage to corporate…

The Friday Roundup – Resolve VFX Tips & Using Markers

The #1 PROBLEM with VFX – DaVinci Resolve Fusion Tips Regular readers will know that I usually include something each week from Casey Faris somehow related to the subject of DaVinci Resolve. What you may have also noticed is that over the past few months Casey…

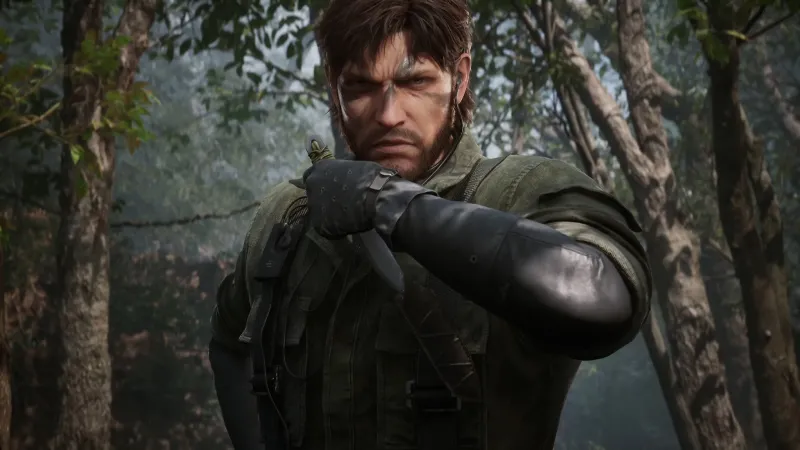

Latest Metal Gear Solid Delta: Snake Eater Trailer Shows All Gameplay

The 2024 Summer Xbox Showcase offered our first substantial look at the remake of Metal Gear Solid 3: Snake Eater’s (renamed Metal Gears Solid Delta: Snake Eater) gameplay, and the game looks great. The footage thankfully didn’t spoil any major story beats for the Metal Gear Solid 3 newcomers, but we saw lots of sneaking, eating, and CQC.

[embedded content]

Other details, like whether series creator Hideo Kojima is involved in any way (unlikely) and a release date, are still unknown. But we’re still excited to commence the virtuous mission again, hopefully soon.

Playground Games’ Fable Gets 2025 Release Window In First Gameplay Trailer

Fable will launch sometime next year, developer Playground Games has revealed. It did so during today’s Xbox Games Showcase with a new trailer that features quick snippets of gameplay starring our heroine.

In the trailer, we see our hero waltzing through a large medieval town while a narrator discusses an old threat returning to the world. Our hero wants to save Albion, and to do so, she needs to enlist the help of Humphry, who is the one narrarating the trailer.

While there appears to be gameplay in the trailer, there’s no U.I. and no combat – it’s mostly just our hero protagonist walking and running through various fantastical locations. We do see some glimpses of combat, but it looks cinematic so it’s hard to tell if it’s actual combat gameplay.

Check it out for yourself in the Fable gameplay trailer below:

[embedded content]

“What does it mean to be a hero,” the trailer’s description reads. “Humphry, once one of the greatest, will be forced out of retirement when a mysterious figure from his past threatens Albion’s very existence.”

Microsoft revealed Fable was coming back in 2020, with a reveal trailer from Forza Horizon developer Playground Games. We then got our next look at the game with a more narrative-focused trailer starring Richard Ayoade last year, and today’s new trailer is our first look at the game since then.

Fable launches on Xbox Series X/S (and presumably PC) in 2025.

What do think of this new trailer? Let us know in the comments below!