Charles Fisher, Ph.D., is the CEO and Founder of Unlearn, a platform harnessing AI to tackle some of the biggest bottlenecks in clinical development: long trial timelines, high costs, and uncertain outcomes. Their novel AI models analyze vast quantities of patient-level data to forecast patients’ health…

AI Learns from AI: The Emergence of Social Learning Among Large Language Models

Since OpenAI unveiled ChatGPT in late 2022, the role of foundational large language models (LLMs) has become increasingly prominent in artificial intelligence (AI), particularly in natural language processing (NLP). These LLMs, designed to process and generate human-like text, learn from an extensive array of texts from…

Princess Peach Showtime Review, Contra: Operation Galuga Impressions | All Things Nintendo

This week on All Things Nintendo, we give our impressions of several recent releases. The headliner of this episode is Kyle Hilliard’s review of Princess Peach: Showtime, but Brian also has impressions of Contra: Operation Galuga and qomp2. And, of course, we’ll catch up on all the latest news out of the world of Nintendo.

[embedded content]

If you’d like to follow Brian on social media, you can do so on his Instagram/Threads @BrianPShea or Twitter @BrianPShea. You can follow Kyle on Twitter: @KyleMHilliard and BlueSky: @KyleHilliard.

The All Things Nintendo podcast is a weekly show where we celebrate, discuss, and break down all the latest games, news, and announcements from the industry’s most recognizable name. Each week, Brian is joined by different guests to talk about what’s happening in the world of Nintendo. Along the way, they’ll share personal stories, uncover hidden gems in the eShop, and even look back on the classics we all grew up with. A new episode hits every Friday!

Be sure to subscribe to All Things Nintendo on your favorite podcast platform. The show is available on Apple Podcasts, Spotify, Google Podcasts, and YouTube.

00:00:00 – Introduction

00:01:25 – Star Wars: Battlefront Classic Collection Woes

00:08:19 – Tears of the Kingdom Figma Announced

00:16:30 – Tears of the Kingdom and Mario Wonder GDC Panels

00:27:14 – Metal Gear Solid Master Collection Vol. 2 Update from Konami

00:34:33 – GameInformer.com Reader Voted Greatest Game of All Time Update

00:39:04 – Princess Peach: Showtime! Review

01:02:29 – Contra: Operation Galuga Impressions

01:12:22 – qomp2 Impressions

If you’d like to get in touch with the All Things Nintendo podcast, you can email AllThingsNintendo@GameInformer.com, messaging Brian on Instagram (@BrianPShea), or by joining the official Game Informer Discord server. You can do that by linking your Discord account to your Twitch account and subscribing to the Game Informer Twitch channel. From there, find the All Things Nintendo channel under “Community Spaces.”

For Game Informer’s other podcast, be sure to check out The Game Informer Show with hosts Alex Van Aken, Marcus Stewart, and Kyle Hilliard, which covers the weekly happenings of the video game industry!

Artificial Intelligence in Broadcasting Industry: BBC Looking to Build Its Own AI Model – Technology Org

The BBC, Britain’s national broadcaster, is exploring the possibility of constructing and training its own artificial intelligence model…

Want to prevent a 7-figure disaster? Read these 8 AI books – CyberTalk

EXECUTIVE SUMMARY:

As artificial intelligence (AI) continues to rapidly advance, the risks posed by malicious exploitation or the misuse of AI systems looms large. For cyber security leaders, getting ahead of AI-based risks has become mission-critical. No one wants to contend with a potentially disastrous situation involving AI.

Whether it’s preventing the misuse of generative AI models, like ChatGPT, defending against adversarial machine learning attacks or understanding how to leverage AI-based cyber security tools, cyber security professionals should make AI literacy a top priority.

One of the best ways to build knowledge? Read AI books written by top experts in the field. In this article, discover eight essential AI books that should be on the reading list of any cyber security professional who aims to prevent a 7-figure AI disaster.

8 must-read AI books

1. The Alignment Problem, by Brian Christian. In this award-winning, captivating and clear treatise, the author unpacks the immense challenge that is ensuring alignment between advanced AI systems and human ethics. Mike Krieger, cofounder of Instagram, says “This is the book on artificial intelligence we need right now.”

2. You Look Like a Thing and I Love You, by Janelle Shane. This book came out in 2019, before the release of now ubiquitous chatbots. However, it’s a smart and funny introduction to how AI works and how to get the most out of AI. If you’re looking for an easy on-ramp into the subject, this is it.

The author also maintains a blog on AI Weirdness, which may be of interest to the time-constrained.

3. Top Questions That CISOs Should be Asking About AI (and Answers), by CyberTalk.org. Although this is an eBook, we found it worth including, as it provides evidence-backed strategies and tactics for elevating your organization’s use of AI (and ensuring its security). Download here.

4. Artificial Intelligence for Cybersecurity, as edited by Mark Stamp, Corrado Aaron Visaggio, Francesco Mercaldo and Fabio Di Troia. This technical manual describes how AI techniques can be applied to enhance anomaly detection, threat intelligence and adversarial machine learning capabilities.

5. Hands-on Artificial Intelligence for Cybersecurity, by Alessandro Parisi. In this handbook, you’ll see how you can infuse AI capabilities into smart cyber security mechanisms. After reading, you will be able to establish a strong cyber security posture, using AI.

6. AI for Defense and Intelligence, by Dr. Patrick T. Biltgen. This book tackles scaling AI in the cloud, customizing AI for unique mission applications and the issues endemic to the defense and intelligence sector. Given the government agency focus, the piece is perfect for those who support national security.

7. Life 3.0: Being Human in the Age of Artificial Intelligence, by Swedish-American physicist Max Tegmark. This book prompts readers to immerse themselves in the most important conversations of our times. Readers tend to find this piece both engaging and empowering.

8. AI, Machine Learning and Deep Learning, edited by Fei Hu, and Xiali Hei. Since the aforementioned concepts are all somewhat new to those in the cyber security field, this book aims to provide a comprehensive picture of challenges and solutions that professionals face today. Explore how to overcome AI attacks with AI-based tools.

Further thoughts

Build policies and programs to mitigate AI-based threats before they potentially cause a 7-figure – or even larger – disaster.

In cyber security, knowledge is a powerful line of defense. Equip yourself with new knowledge (via these essential AI books) as you work to protect your organization from AI risks.

For more insights into AI books and other cyber security must-reads, please see CyberTalk.org’s past coverage. Lastly, subscribe to the CyberTalk.org newsletter for timely insights, cutting-edge analyses and more, delivered straight to your inbox each week.

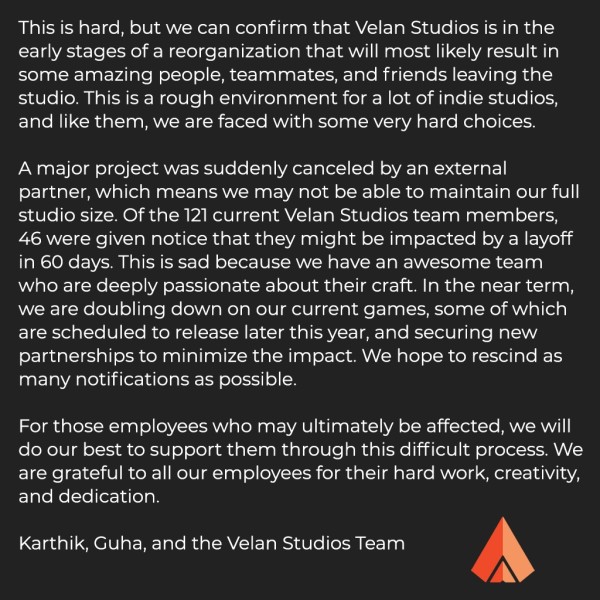

Knockout City Developer Velan Studios May Potentially Suffer Significant Layoffs

Velan Studios, the team behind Knockout City, has announced it is entering a reorganization that will likely result in layoffs.

In a post to X (formerly known as Twitter), the studio’s co-founders Guha and Karthik Bala revealed the team was working on a big project that an outside partner suddenly canceled. As a result, it might not be able to maintain its current team size; 46 out of 121 employees were given notice they may be laid off in the next 60 days.

“This is a rough environment for a lot of indie studios, and like them, we are faced with some very hard choices,” Velan writes in the post. Should the worst come, Velan states it will do its best to support affected staff members. You can read the full post below.

Velan Studios developed 2020’s Mario Kart Live: Home Circuit , 2021 dodgeball competitive multiplayer game, Knockout City, and 2023’s Hot Wheels Rift Rally. Knockout City, an EA Originals title, is perhaps the studio’s best-known title, which earned a generally positive reception and was supported through multiple seasons. Velan later transformed it into a free-to-play experience after ending its publishing relationship with EA. Unfortunately, the game would shut down roughly a year after this transition and only two years after launch.

These potential job cuts join a string of other disheartening 2024 layoffs, which now total more than 8,000 in just the first two months of the year. EA laid off roughly 670 employees across all departments, resulting in the cancellation of Respawn’s Star Wars FPS game. PlayStation laid off 900 employees across Insomniac, Naughty Dog, Guerrilla, and more, closing down London Studio in the process, too. The day before, Until Dawn developer Supermassive Games announced it laid off 90 employees.

At the end of January, we learned Embracer Group had canceled a new Deus Ex game in development at Eidos-Montréal and laid off 97 employees in the process. Also in January, Destroy All Humans remake developer Black Forest Games reportedly laid off 50 employees and Microsoft announced it was laying off 1,900 employees across its Xbox, Activision Blizzard, and ZeniMax teams, as well. Outriders studio People Can Fly laid off more than 30 employees in January, and League of Legends company Riot Games laid off 530 employees.

Lords of the Fallen Publisher CI Games laid off 10 percent of its staff, Unity will lay off 1,800 people by the end of March, and Twitch laid off 500 employees.

We also learned that Discord had laid off 170 employees, that layoffs happened at PTW, a support studio that’s worked with companies like Blizzard and Capcom, and that SteamWorld Build company, Thunderful Group, let go of roughly 100 people. Dead by Daylight developer Behaviour Interactive also reportedly laid off 45 people, too.

The Legend Of Zelda: Majora’s Mask Part 8 | Super Replay

After The Legend of Zelda: Ocarina of Time reinvented the series in 3D and became its new gold standard, Nintendo followed up with a surreal sequel in Majora’s Mask. Set two months after the events of Ocarina, Link finds himself transported to an alternate version of Hyrule called Termina and must prevent a very angry moon from crashing into the Earth over the course of three constantly repeating days. Majora’s Mask’s unique structure and bizarre tone have earned it legions of passionate defenders and detractors, and one long-time Zelda fan is going to experience it for the first time to see where he lands on that spectrum.

Join Marcus Stewart and Kyle Hilliard today and each Friday on Twitch at 2 p.m. CT as they gradually work their way through the entire game until Termina is saved. Archived episodes will be uploaded each Saturday on our second YouTube channel Game Informer Shows, which you can watch both above and by clicking the links below.

Part 1 – Plenty of Time

Part 2 – The Bear

Part 3 – Deku Ball Z

Part 4 – Pig Out

Part 5 – The Was a Bad Choice!

Part 6 – Ray Darmani

Part 7 – Curl and Pound

[embedded content]

If you enjoy our livestreams but haven’t subscribed to our Twitch channel, know that doing so not only gives you notifications and access to special emotes. You’ll also be granted entry to the official Game Informer Discord channel, where our welcoming community members, moderators, and staff gather to talk games, entertainment, food, and organize hangouts! Be sure to also follow our second YouTube channel, Game Informer Shows, to watch other Replay episodes as well as Twitch archives of GI Live and more.

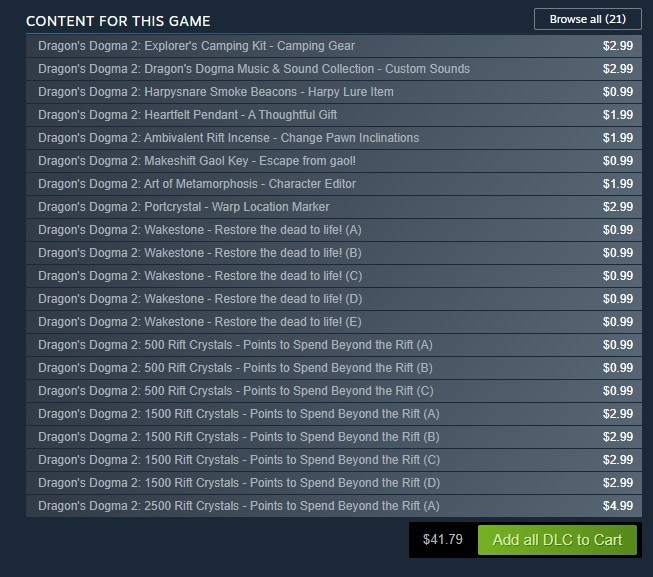

Capcom Addresses Dragon’s Dogma 2 PC Performance And Microtransaction Backlash

Dragon’s Dogma 2 is one of the year’s most anticipated games, and by most accounts, the experience lives up to the hype. The game has earned rave reviews from most critics, including a 9 out of 10 from us. But now that it’s out in the wild, Steam players have bombarded the RPG with negative reviews due to its problematic launch performance and day-one microtransactions.

In terms of performance problems, Steam players have reported issues such as framerate drops, freezes, and crashes. Capcom has already acknowledged these issues in a Steam blog post where it says, “To all those looking forward to this game, we sincerely apologize for any inconvenience,” and adds, “We are investigating/fixing critical problems such as crashes and freezing. We will be addressing crashes and bug fixes starting from those with the highest priority in patches in the near future.”

In regards to the frame rate issues, Capcom writes,

“A large amount of CPU usage is allocated to each character and calculating the impact of their physical presence in various areas. In certain situations where numerous characters appear simultaneously, the CPU usage can be very high and may affect the frame rate. We are aware that in such situations, settings that reduce GPU load may currently have a limited effect; however, we are looking into ways to improve performance in the future.”

The console versions have their own frame rate issues, as noted in our review by writer Jesse Vitelli who noted, “However, large-scale battles are where you will see the performance on consoles take a big hit. When I had multiple enemies on screen, and a pawn would cast a big spell, the frame rate would dip tremendously.”

The game’s microtransactions have also drawn much of players’ ire. In addition to selling Rift Crystals, the game’s currency, most fans are upset that the game sells useful exploration gear such as Portcrystals, fast-travel points that you can place in a chosen destination (though you still need another item, Ferrystones, to use it, which isn’t for sale). Capcom is also selling the Art of Metamorphosis book, an item that allows players to redesign their character and revive items (Wakestones). You can purchase all of these items at once in the “A Boon for Adventurers – New Journey Pack” bundle, which comes included in the game’s Deluxe Edition.

Capcom addressed these complaints in the same blog post, pointing out that the following items can be obtained through normal gameplay:

- Art of Metamorphosis – Character Editor

- Ambivalent Rift Incense – Change Pawn Inclinations

- Portcrystal – Warp Location Marker

- Wakestone – Restore the dead to life!

- 500 Rift Crystals / 1500 Rift Crystals / 2500 Rift Crystals – Points to Spend Beyond the Rift

- Makeshift Gaol Key – Escape from gaol!

- Harpysnare Smoke Beacons – Harpy Lure Item

If you want extra quantities of any of those items, you have the choice of paying real money for them instead of getting them the old-fashioned way, so they basically serve as optional convenience skips. While it’s absolutely understandable why players would be annoyed regardless, this is actually quite normal for Capcom titles. The recent Resident Evil and Monster Hunter games, for example, all offer microtransactions of similar scope and have faced comparatively minimal, if any, pushback.

Still, fans are taken aback by their appearance in Dragon’s Dogma 2, and without context for how rare or easy-to-obtain these items are in the game, some players believe the game’s intentionally challenging/inconvenient design was done to push players towards these purchases to ease the experience. Others simply cite the age-old argument that full-priced games ($69.99 in Dragon’s Dogma 2’s case) shouldn’t charge additional money for in-game items at all.

Combined with the aforementioned performance issues, disgruntled players have flooded the game with bad reviews to the point that it currently has a “Mostly Negative” Steam rating just hours after launch. Despite this initial chilly reception, it doesn’t appear that Capcom will be altering its monetization plans for now.

Dragon’s Dogma 2 is available now for PlayStation 5, Xbox Series X/S, and PC.

UN passes first global AI resolution

The UN General Assembly has adopted a landmark resolution on AI, aiming to promote the safe and ethical development of AI technologies worldwide. The resolution, co-sponsored by over 120 countries, was adopted unanimously by all 193 UN member states on 21 March. This marks the first…

AI GPTs for PostgreSQL Database: Can They Work?

Artificial intelligence is a key point of debate right now. ChatGPT has reached 100 million active users in just the first two months. This has increased focus on AI’s capabilities, especially in database management. The introduction of ChatGPT is considered a major milestone in the Artificial…