The UK public is complacent when it comes to personal action on climate change and, without intervention, meaningful…

Weird Shift in Russian Tank Losses – Why the T-80 is Overtaking the T-72? – Technology Org

The T-72 is the main battle tank of Russia. Not just because pretty much every modern tank is…

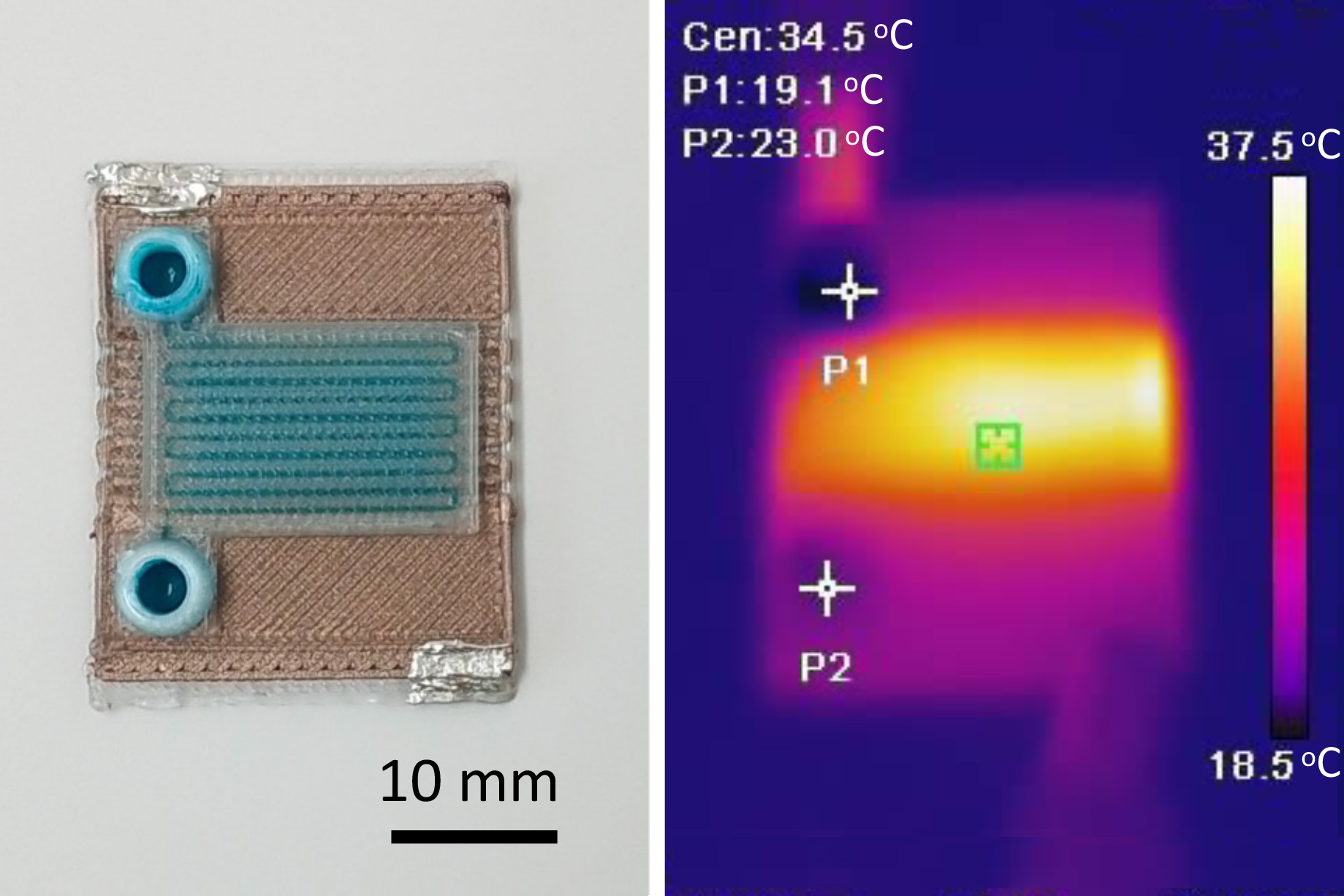

Scientists 3D print self-heating microfluidic devices

MIT researchers have used 3D printing to produce self-heating microfluidic devices, demonstrating a technique which could someday be used to rapidly create cheap, yet accurate, tools to detect a host of diseases.

Microfluidics, miniaturized machines that manipulate fluids and facilitate chemical reactions, can be used to detect disease in tiny samples of blood or fluids. At-home test kits for Covid-19, for example, incorporate a simple type of microfluidic.

But many microfluidic applications require chemical reactions that must be performed at specific temperatures. These more complex microfluidic devices, which are typically manufactured in a clean room, are outfitted with heating elements made from gold or platinum using a complicated and expensive fabrication process that is difficult to scale up.

Instead, the MIT team used multimaterial 3D printing to create self-heating microfluidic devices with built-in heating elements, through a single, inexpensive manufacturing process. They generated devices that can heat fluid to a specific temperature as it flows through microscopic channels inside the tiny machine.

Their technique is customizable, so an engineer could create a microfluidic that heats fluid to a certain temperature or given heating profile within a specific area of the device. The low-cost fabrication process requires about $2 of materials to generate a ready-to-use microfluidic.

The process could be especially useful in creating self-heating microfluidics for remote regions of developing countries where clinicians may not have access to the expensive lab equipment required for many diagnostic procedures.

“Clean rooms in particular, where you would usually make these devices, are incredibly expensive to build and to run. But we can make very capable self-heating microfluidic devices using additive manufacturing, and they can be made a lot faster and cheaper than with these traditional methods. This is really a way to democratize this technology,” says Luis Fernando Velásquez-García, a principal scientist in MIT’s Microsystems Technology Laboratories (MTL) and senior author of a paper describing the fabrication technique.

He is joined on the paper by lead author Jorge Cañada Pérez-Sala, an electrical engineering and computer science graduate student. The research will be presented at the PowerMEMS Conference this month.

An insulator becomes conductive

This new fabrication process utilizes a technique called multimaterial extrusion 3D printing, in which several materials can be squirted through the printer’s many nozzles to build a device layer by layer. The process is monolithic, which means the entire device can be produced in one step on the 3D printer, without the need for any post-assembly.

To create self-heating microfluidics, the researchers used two materials — a biodegradable polymer known as polylactic acid (PLA) that is commonly used in 3D printing, and a modified version of PLA.

The modified PLA has mixed copper nanoparticles into the polymer, which converts this insulating material into an electrical conductor, Velásquez-García explains. When electrical current is fed into a resistor composed of this copper-doped PLA, energy is dissipated as heat.

“It is amazing when you think about it because the PLA material is a dielectric, but when you put in these nanoparticle impurities, it completely changes the physical properties. This is something we don’t fully understand yet, but it happens and it is repeatable,” he says.

Using a multimaterial 3D printer, the researchers fabricate a heating resistor from the copper-doped PLA and then print the microfluidic device, with microscopic channels through which fluid can flow, directly on top in one printing step. Because the components are made from the same base material, they have similar printing temperatures and are compatible.

Heat dissipated from the resistor will warm fluid flowing through the channels in the microfluidic.

In addition to the resistor and microfluidic, they use the printer to add a thin, continuous layer of PLA that is sandwiched between them. It is especially challenging to manufacture this layer because it must be thin enough so heat can transfer from the resistor to the microfluidic, but not so thin that fluid could leak into the resistor.

The resulting machine is about the size of a U.S. quarter and can be produced in a matter of minutes. Channels about 500 micrometers wide and 400 micrometers tall are threaded through the microfluidic to carry fluid and facilitate chemical reactions.

Importantly, the PLA material is translucent, so fluid in the device remains visible. Many processes rely on visualization or the use of light to infer what is happening during chemical reactions, Velásquez-García explains.

Customizable chemical reactors

The researchers used this one-step manufacturing process to generate a prototype that could heat fluid by 4 degrees Celsius as it flowed between the input and the output. This customizable technique could enable them to make devices which would heat fluids in certain patterns or along specific gradients.

“You can use these two materials to create chemical reactors that do exactly what you want. We can set up a particular heating profile while still having all the capabilities of the microfluidic,” he says.

However, one limitation comes from the fact that PLA can only be heated to about 50 degrees Celsius before it starts to degrade. Many chemical reactions, such as those used for polymerase chain reaction (PCR) tests, require temperatures of 90 degrees or higher. And to precisely control the temperature of the device, researchers would need to integrate a third material that enables temperature sensing.

In addition to tackling these limitations in future work, Velásquez-García wants to print magnets directly into the microfluidic device. These magnets could enable chemical reactions that require particles to be sorted or aligned.

At the same time, he and his colleagues are exploring the use of other materials that could reach higher temperatures. They are also studying PLA to better understand why it becomes conductive when certain impurities are added to the polymer.

“If we can understand the mechanism that is related to the electrical conductivity of PLA, that would greatly enhance the capability of these devices, but it is going to be a lot harder to solve than some other engineering problems,” he adds.

“In Japanese culture, it’s often said that beauty lies in simplicity. This sentiment is echoed by the work of Cañada and Velasquez-Garcia. Their proposed monolithically 3D-printed microfluidic systems embody simplicity and beauty, offering a wide array of potential derivations and applications that we foresee in the future,” says Norihisa Miki, a professor of mechanical engineering at Keio University in Tokyo, who was not involved with this work.

“Being able to directly print microfluidic chips with fluidic channels and electrical features at the same time opens up very exiting applications when processing biological samples, such as to amplify biomarkers or to actuate and mix liquids. Also, due to the fact that PLA degrades over time, one can even think of implantable applications where the chips dissolve and resorb over time,” adds Niclas Roxhed, an associate professor at Sweden’s KTH Royal Institute of Technology, who was not involved with this study.

This research was funded, in part, by the Empiriko Corporation and a fellowship from La Caixa Foundation.

MIT group releases white papers on governance of AI

Providing a resource for U.S. policymakers, a committee of MIT leaders and scholars has released a set of policy briefs that outlines a framework for the governance of artificial intelligence. The approach includes extending current regulatory and liability approaches in pursuit of a practical way to oversee AI.

The aim of the papers is to help enhance U.S. leadership in the area of artificial intelligence broadly, while limiting harm that could result from the new technologies and encouraging exploration of how AI deployment could be beneficial to society.

The main policy paper, “A Framework for U.S. AI Governance: Creating a Safe and Thriving AI Sector,” suggests AI tools can often be regulated by existing U.S. government entities that already oversee the relevant domains. The recommendations also underscore the importance of identifying the purpose of AI tools, which would enable regulations to fit those applications.

“As a country we’re already regulating a lot of relatively high-risk things and providing governance there,” says Dan Huttenlocher, dean of the MIT Schwarzman College of Computing, who helped steer the project, which stemmed from the work of an ad hoc MIT committee. “We’re not saying that’s sufficient, but let’s start with things where human activity is already being regulated, and which society, over time, has decided are high risk. Looking at AI that way is the practical approach.”

“The framework we put together gives a concrete way of thinking about these things,” says Asu Ozdaglar, the deputy dean of academics in the MIT Schwarzman College of Computing and head of MIT’s Department of Electrical Engineering and Computer Science (EECS), who also helped oversee the effort.

The project includes multiple additional policy papers and comes amid heightened interest in AI over last year as well as considerable new industry investment in the field. The European Union is currently trying to finalize AI regulations using its own approach, one that assigns broad levels of risk to certain types of applications. In that process, general-purpose AI technologies such as language models have become a new sticking point. Any governance effort faces the challenges of regulating both general and specific AI tools, as well as an array of potential problems including misinformation, deepfakes, surveillance, and more.

“We felt it was important for MIT to get involved in this because we have expertise,” says David Goldston, director of the MIT Washington Office. “MIT is one of the leaders in AI research, one of the places where AI first got started. Since we are among those creating technology that is raising these important issues, we feel an obligation to help address them.”

Purpose, intent, and guardrails

The main policy brief outlines how current policy could be extended to cover AI, using existing regulatory agencies and legal liability frameworks where possible. The U.S. has strict licensing laws in the field of medicine, for example. It is already illegal to impersonate a doctor; if AI were to be used to prescribe medicine or make a diagnosis under the guise of being a doctor, it should be clear that would violate the law just as strictly human malfeasance would. As the policy brief notes, this is not just a theoretical approach; autonomous vehicles, which deploy AI systems, are subject to regulation in the same manner as other vehicles.

An important step in making these regulatory and liability regimes, the policy brief emphasizes, is having AI providers define the purpose and intent of AI applications in advance. Examining new technologies on this basis would then make clear which existing sets of regulations, and regulators, are germane to any given AI tool.

However, it is also the case that AI systems may exist at multiple levels, in what technologists call a “stack” of systems that together deliver a particular service. For example, a general-purpose language model may underlie a specific new tool. In general, the brief notes, the provider of a specific service might be primarily liable for problems with it. However, “when a component system of a stack does not perform as promised, it may be reasonable for the provider of that component to share responsibility,” as the first brief states. The builders of general-purpose tools should thus also be accountable should their technologies be implicated in specific problems.

“That makes governance more challenging to think about, but the foundation models should not be completely left out of consideration,” Ozdaglar says. “In a lot of cases, the models are from providers, and you develop an application on top, but they are part of the stack. What is the responsibility there? If systems are not on top of the stack, it doesn’t mean they should not be considered.”

Having AI providers clearly define the purpose and intent of AI tools, and requiring guardrails to prevent misuse, could also help determine the extent to which either companies or end users are accountable for specific problems. The policy brief states that a good regulatory regime should be able to identify what it calls a “fork in the toaster” situation — when an end user could reasonably be held responsible for knowing the problems that misuse of a tool could produce.

Responsive and flexible

While the policy framework involves existing agencies, it includes the addition of some new oversight capacity as well. For one thing, the policy brief calls for advances in auditing of new AI tools, which could move forward along a variety of paths, whether government-initiated, user-driven, or deriving from legal liability proceedings. There would need to be public standards for auditing, the paper notes, whether established by a nonprofit entity along the lines of the Public Company Accounting Oversight Board (PCAOB), or through a federal entity similar to the National Institute of Standards and Technology (NIST).

And the paper does call for the consideration of creating a new, government-approved “self-regulatory organization” (SRO) agency along the functional lines of FINRA, the government-created Financial Industry Regulatory Authority. Such an agency, focused on AI, could accumulate domain-specific knowledge that would allow it to be responsive and flexible when engaging with a rapidly changing AI industry.

“These things are very complex, the interactions of humans and machines, so you need responsiveness,” says Huttenlocher, who is also the Henry Ellis Warren Professor in Computer Science and Artificial Intelligence and Decision-Making in EECS. “We think that if government considers new agencies, it should really look at this SRO structure. They are not handing over the keys to the store, as it’s still something that’s government-chartered and overseen.”

As the policy papers make clear, there are several additional particular legal matters that will need addressing in the realm of AI. Copyright and other intellectual property issues related to AI generally are already the subject of litigation.

And then there are what Ozdaglar calls “human plus” legal issues, where AI has capacities that go beyond what humans are capable of doing. These include things like mass-surveillance tools, and the committee recognizes they may require special legal consideration.

“AI enables things humans cannot do, such as surveillance or fake news at scale, which may need special consideration beyond what is applicable for humans,” Ozdaglar says. “But our starting point still enables you to think about the risks, and then how that risk gets amplified because of the tools.”

The set of policy papers addresses a number of regulatory issues in detail. For instance, one paper, “Labeling AI-Generated Content: Promises, Perils, and Future Directions,” by Chloe Wittenberg, Ziv Epstein, Adam J. Berinsky, and David G. Rand, builds on prior research experiments about media and audience engagement to assess specific approaches for denoting AI-produced material. Another paper, “Large Language Models,” by Yoon Kim, Jacob Andreas, and Dylan Hadfield-Menell, examines general-purpose language-based AI innovations.

“Part of doing this properly”

As the policy briefs make clear, another element of effective government engagement on the subject involves encouraging more research about how to make AI beneficial to society in general.

For instance, the policy paper, “Can We Have a Pro-Worker AI? Choosing a path of machines in service of minds,” by Daron Acemoglu, David Autor, and Simon Johnson, explores the possibility that AI might augment and aid workers, rather than being deployed to replace them — a scenario that would provide better long-term economic growth distributed throughout society.

This range of analyses, from a variety of disciplinary perspectives, is something the ad hoc committee wanted to bring to bear on the issue of AI regulation from the start — broadening the lens that can be brought to policymaking, rather than narrowing it to a few technical questions.

“We do think academic institutions have an important role to play both in terms of expertise about technology, and the interplay of technology and society,” says Huttenlocher. “It reflects what’s going to be important to governing this well, policymakers who think about social systems and technology together. That’s what the nation’s going to need.”

Indeed, Goldston notes, the committee is attempting to bridge a gap between those excited and those concerned about AI, by working to advocate that adequate regulation accompanies advances in the technology.

As Goldston puts it, the committee releasing these papers is “is not a group that is antitechnology or trying to stifle AI. But it is, nonetheless, a group that is saying AI needs governance and oversight. That’s part of doing this properly. These are people who know this technology, and they’re saying that AI needs oversight.”

Huttenlocher adds, “Working in service of the nation and the world is something MIT has taken seriously for many, many decades. This is a very important moment for that.”

In addition to Huttenlocher, Ozdaglar, and Goldston, the ad hoc committee members are: Daron Acemoglu, Institute Professor and the Elizabeth and James Killian Professor of Economics in the School of Arts, Humanities, and Social Sciences; Jacob Andreas, associate professor in EECS; David Autor, the Ford Professor of Economics; Adam Berinsky, the Mitsui Professor of Political Science; Cynthia Breazeal, dean for Digital Learning and professor of media arts and sciences; Dylan Hadfield-Menell, the Tennenbaum Career Development Assistant Professor of Artificial Intelligence and Decision-Making; Simon Johnson, the Kurtz Professor of Entrepreneurship in the MIT Sloan School of Management; Yoon Kim, the NBX Career Development Assistant Professor in EECS; Sendhil Mullainathan, the Roman Family University Professor of Computation and Behavioral Science at the University of Chicago Booth School of Business; Manish Raghavan, assistant professor of information technology at MIT Sloan; David Rand, the Erwin H. Schell Professor at MIT Sloan and a professor of brain and cognitive sciences; Antonio Torralba, the Delta Electronics Professor of Electrical Engineering and Computer Science; and Luis Videgaray, a senior lecturer at MIT Sloan.

Your Eyes Talk to Your Ears. Scientists Know What They’re Saying – Technology Org

Scientists can now pinpoint where someone’s eyes are looking just by listening to their ears. “You can actually…

Hello Games Celebrates 10 Years Of No Man’s Sky With New Trailer

Ten years ago, at Geoff Keighley’s awards show (then known as the VGX Awards), the development team at Hello Games revealed No Man’s Sky. The ambitious space-exploration title promised a universe of possibilities and has since become the poster child for bouncing back from a challenging launch period.

To celebrate the 10th anniversary of the first teaser trailer, Sean Murray and Hello Games returned to Geoff Keighley’s stage, now called The Game Awards, to give a brief retrospective and tease what’s next. After a rundown of the last 10 years of active development of No Man’s Sky, including several free updates, improvements, and expansions, the trailer teases big plans for 2024, with something that appears to involve more intricate space stations within the game.

Check out the No Man’s Sky 10-Year Anniversary Trailer for yourself below:

[embedded content]

No Man’s Sky was released in 2016 to mixed reviews but received myriad updates and improvements in the years since, delivering something beyond what was even promised in the lead-up to launch. Today, it is widely praised by critics and fans and is put forward as the foremost model for how to improve a game after a rocky launch.

The First Descendant Gets A Full Release Next Summer

The First Descendant is a free-to-play looter shooter from Nexon. There have been beta sessions before now, but the game is getting a full, proper launch next Summer. The exact date is up in the air still, but according to a blog post from the developers, it will be announced as soon as they can pin it down. Check out the latest trailer below.

[embedded content]

In The First Descendant, you play as, you guessed, Descendants – a group of unique characters that each have distinct abilities and movesets. Your goal is to defend the planet from invaders called Colossi using the game’s third-person shooting and RPG mechanics. The new trailer gives us a cinematic look at one of those fights, this time showcasing an entirely new Descendant.

There’s no gameplay in this trailer, but the team assures fans that they’re working to refine said gameplay, based on feedback from the nearly two million players who checked out their last open beta session. The main purpose of that beta was to test crossplay features, which makes sense, since the game is launching on Xbox, PlayStation, and PC. Check it out for free when it launches simultaneously on all of those platforms in Summer 2024.

The Finals, Embark Studio’s Free-To-Play Shooter, Is Out Right Now

The Finals, the free-to-play, team-based shooter from Embark Studios, is out right now. The team surprise launched it during The Game Awards 2023, and you can download and hop into the first-person shooter action right now on PlayStation 5, Xbox Series X/S, and PC via Steam.

With tonight’s surprise launch, Season 1 of The Finals has begun, sending players into the glitz, glam, and neon lights of Las Vegas. This new arena is filled with slot machines, boxers, UFOs, and more, according to a press release.

Check out The Finals surprise launch trailer for yourself below:

[embedded content]

“We started Embark to re-imagine how games are made and what they can become,” creative director Gustav Tilleby writes in a press release. “The Finals is our first game to release, and a great embodiment of that vision. The Finals isn’t another battle royale, military sim, or tactical FPS – there are plenty of those out there. It’s an entirely new take on shooters.”

Tilleby adds that it’s been amazing and humbling to see the community’s response to The Finals. The team says now that the game is live, it is laser-focused on ensuring a great experience for the community while delivering surprises along the way.

The Finals is out right now on PlayStation 5, Xbox Series X/S, and PC via Steam.

Are you hopping into The Finals tonight? Let us know in the comments below!

Tales of Kenzera: Zau Is A Magical Metroidvania Inspired By Bantu Myth

The Game Awards are packed with eager looks at highly anticipated games, but the ceremony is full of plenty of surprises too. One of them is a flashy Metroidvania called Tales of Kenzera: Zau, the debut game from Surgent Studios.

Zau is a boy on a quest to bring his father back from the dead. Guided by Kalunga, the God of Death, he uses his ability as a warrior shaman to defeat the ancestral spirits that roam the land of Kenzera. His main tools are a pair of magical masks; with the Moon mask he can manipulate time and crystalize enemies, and the Sun mask allows him to launch fiery spears. Studio founder Abubakar Salim says that the two masks are reminiscent of the Devil May Cry series with the way they allow the player to flip between ranged and melee modes.

The world is inspired by Bantu lore and imagery, with bright colors and mythical beasts. Additionally, the game will be scored by Nainita Desai, a British composer who made the music for Half Mermaid’s Telling Lies and Immortality.

Salim founded Surgent in 2019, but most gamers would currently recognize him as the voice of Bayek from Assassin’s Creed Origins. According to him, the passing of his father motivated him to create a new project – he wanted to tell a story of grief in the form of a video game. With assistance from the Ridley Scott Creative Group and Critical Role, five years later, Tales of Kenzera: Zau tells that story.

The trailer ended with the news that Tales of Kenzera: Zau will be coming to PlayStation, Xbox, Switch, and PC on April 23, 2024.

The Game Awards Reaction, GTA 6, Avatar Review | GI Show

We’re officially past The Game Awards, and host Marcus Stewart and former GI editor Jason Guisao are unpacking all of the show’s highs and lows. They’re also diving into Grand Theft Auto VI’s big debut trailer. Editor-in-Chief Matt Miller also makes a rare appearance to discuss his review of Avatar: Frontiers of Pandora.

[embedded content]

The Game Informer Show is a weekly gaming podcast covering the latest video game news, industry topics, exclusive reveals, and reviews. Join host Alex Van Aken every Thursday to chat about your favorite games – past and present – with Game Informer staff, developers, and special guests from around the industry. Listen on Apple Podcasts, Spotify, or your favorite podcast app.

The Game Informer Show – Podcast Timestamps:

00:00:00 – Intro

00:02:36 – Avatar: Frontiers of Pandora Review

00:22:34 – Grand Theft Auto VI Trailer

00:45:32 – The Game Awards Round-Up

01:26:19 – Housekeeping and Listener Questions