The Russian S-400 missile system is designed for air defence, but Russia is so desperate to destroy Ukraine…

Guide to PTZOptics Auto-Tracking Features for PTZ Cameras – Videoguys

In the ever-evolving landscape of video production and live streaming, PTZOptics takes the lead with the MOVE 4K camera, introducing game-changing features for content creators. Learn how the built-in auto-tracking capabilities, coupled with Presenter Lock™ technology, revolutionize subject capture, even in dynamic environments.

[embedded content]

Unlock the Power of Auto-Tracking: Explore the MOVE 4K’s advanced auto-tracking features, eliminating the need for additional software. Discover how its computer vision processing capabilities intelligently follow subjects, providing seamless pan, tilt, and zoom functions for optimal capture.

Presenter Lock™ Technology for Precision: Dive into the Presenter Lock™ technology that sets the MOVE 4K apart. Maintain focus on specific subjects, even in crowded settings, with subject locking capabilities. Seamlessly switch focus between presenters, adding a professional touch to live productions.

User-Friendly Interface: Navigate the enhanced Web UI of the MOVE 4K, designed for both novices and professionals. Enjoy easy access to features and settings, complemented by in-depth tutorials for a comprehensive user experience. Empower yourself to experiment and optimize setups with confidence.

Auto-Tracking Control Options: Tailor auto-tracking movements with precision using various control methods. Initiate auto-tracking with an IR remote control, an IP-connected PTZ joystick controller, or the camera’s web interface. Choose the method that aligns with your preferences and production setup.

Clean Output with Customizable Visuals: Enhance viewer experience by turning off display information and disabling bounding boxes. Maintain a clean, distraction-free visual output during live streaming or recording. Explore how these customizable options adapt to diverse production styles.

In conclusion, the PTZOptics MOVE 4K camera stands at the forefront of video production technology, offering a seamless blend of innovation, accessibility, and customization. Explore the potential of this advanced camera to revolutionize your content creation journey and meet the high standards of today’s dynamic video landscape.

Read the full article from PTZOptics HERE

PlayStation, Xbox, And Nintendo Year In Reviews Are Now Live

Update, 12/13/2023:

Yesterday, PlayStation and Xbox released yearly video game retrospectives for individual players, highlighting how much PlayStation 5 and Xbox Series X/S they played in 2023, which games they played most, and more. Now, a day later, Nintendo has released its 2023 Year In Review feature.

Sign in to your Nintendo account and enjoy the look back at your year of Switch gaming, and let us know what your most-played Switch game was in 2023 in the comments below.

The original story continues below…

Update, 12/12/2023:

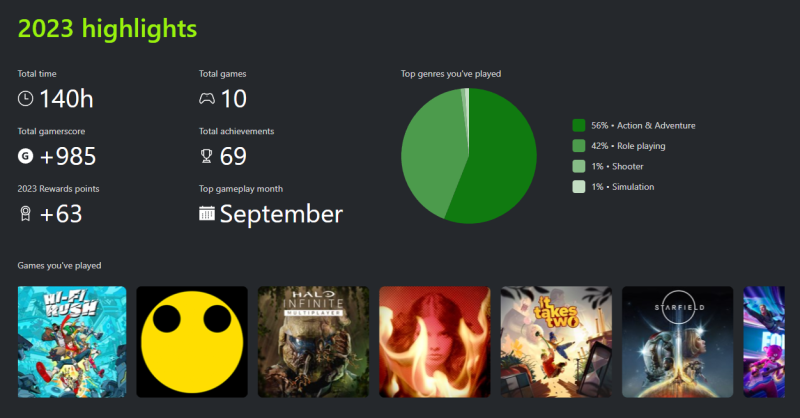

Following Sony’s lead, Microsoft has published its yearly retrospective as well, allowing Xbox and PlayStation fans alike to take a look back at the games they played this year. You can check out the Xbox Year In Review right here. For an idea of what to expect, here are some of my stats:

The original story continues below…

When the year comes to a close, many spend time reflecting on the past 12 months of their lives, the decisions they made, and the places they went. In recent years, companies like Spotify have capitalized on this tradition, turning it into their Wrapped celebration, which has users all over the world sharing their stats, blurring the lines between an internet trend and a viral marketing campaign. It’s no surprise that other companies would want to jump on that bandwagon, and one of the main participants is Sony, with their annual PlayStation Wrap-Up. You can check out this year’s version right here.

Those who participate will get a quick tour through their 2023 on PlayStation, including their total hours played, their top games, and even which controller they used most often. You can see some of my stats below – including how much time I spent revisiting Ezio’s games for an Assassin’s Creed retrospective earlier this year.

PlayStation has had a good 2023. Between Spider-Man 2’s critical success, the generally well-received launch of Final Fantasy XVI as a console exclusive, and new DLC modes coming to The Last of Us: Part II and God of War: Ragnarök, it’s finished the year strongly. This was follows their recent release of the PlayStation Portal – you can read out thoughts on the device here.

What was your most played PlayStation or Xbox game of the year? Let us know in the comments!

More Than Half Of All Call Of Duty: Modern Warfare II Players Used The Game’s Graphical Accessibility Settings

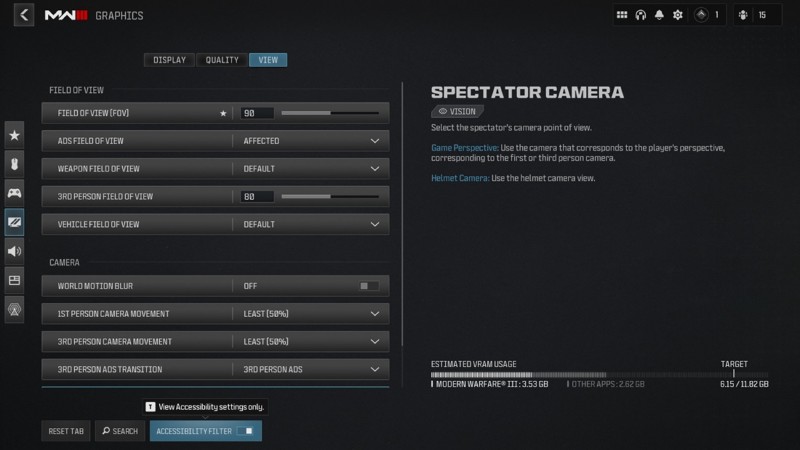

Developer Sledgehammer Games and publisher Activision Blizzard have released a detailed look at the new accessibility options that launched with Call of Duty: Modern Warfare III, the best-selling game of last month. In it, the companies reveal some interesting statistics that show accessibility options and features are very popular with players and useful for everyone that plays Call of Duty games.

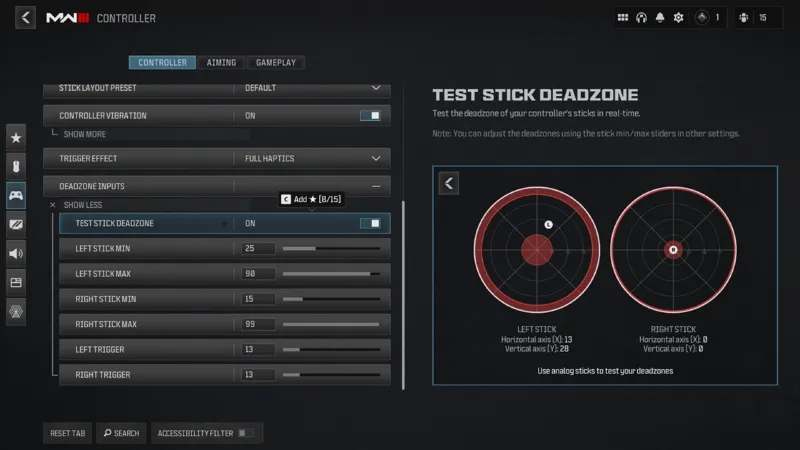

For example, more than 40 percent of players customized their analog stick deadzone input in last year’s Call of Duty: Modern Warfare II. More than half of all players in that game adjusted graphical accessibility settings, too, like motion blur, field of view, and camera movement.

For this year’s entry, Sledgehammer says, “Modern Warfare III provides even more ways to customize the visual experience for all players,” like the High Contrast mode available in the campaign that “enables players with vision impairment or color blindness to see their allies and enemies more clearly.” In this mode, allies are outlined with a blue-green color and enemies are outlined in red, and these visual markers change in real-time as you play.

Other new accessibility features in this year’s Call of Duty include a major update to the settings menu of the game. Sledgehammer says settings are now tagged to better identify specific features, such as tags related to settings for motor, vision, audio, and cognitive adjustments. Plus, these tags can be filtered.

“Major updates to controller settings in Modern Warfare III provide more detail for players looking to customize their inputs,” the blog reads. “These changes are also better reflected in the menu to give our community a visual preview of how these changes will impact on their controls.”

One example of this is that the deadzone inputs menu now highlights an approximation of how left or right thumb sticks will react to tweaking with a visual preview on the screen.

Another new addition in Modern Warfare III is a new accessibility preset called “Low Motor Strain.” This preset reduces the physical effort required when playing, with few button presses or holds required and sensitivity increased. “This preset joins existing presets which include simplified controls, audio/visual support, visual support, and motion reduction, making it faster for anyone who needs accessibility features to jump into the game,” according to the blog.

For more, read Game Informer’s Call of Duty: Modern Warfare III review and then read about how it’s already the second best-selling game of 2023. Check out Game Informer’s Call of Duty: Modern Warfare II review after that.

Have you been using any of these features in Call of Duty: Modern Warfare III? Let us know in the comments below!

Deep neural networks show promise as models of human hearing

Computational models that mimic the structure and function of the human auditory system could help researchers design better hearing aids, cochlear implants, and brain-machine interfaces. A new study from MIT has found that modern computational models derived from machine learning are moving closer to this goal.

In the largest study yet of deep neural networks that have been trained to perform auditory tasks, the MIT team showed that most of these models generate internal representations that share properties of representations seen in the human brain when people are listening to the same sounds.

The study also offers insight into how to best train this type of model: The researchers found that models trained on auditory input including background noise more closely mimic the activation patterns of the human auditory cortex.

“What sets this study apart is it is the most comprehensive comparison of these kinds of models to the auditory system so far. The study suggests that models that are derived from machine learning are a step in the right direction, and it gives us some clues as to what tends to make them better models of the brain,” says Josh McDermott, an associate professor of brain and cognitive sciences at MIT, a member of MIT’s McGovern Institute for Brain Research and Center for Brains, Minds, and Machines, and the senior author of the study.

MIT graduate student Greta Tuckute and Jenelle Feather PhD ’22 are the lead authors of the open-access paper, which appears today in PLOS Biology.

Models of hearing

Deep neural networks are computational models that consists of many layers of information-processing units that can be trained on huge volumes of data to perform specific tasks. This type of model has become widely used in many applications, and neuroscientists have begun to explore the possibility that these systems can also be used to describe how the human brain performs certain tasks.

“These models that are built with machine learning are able to mediate behaviors on a scale that really wasn’t possible with previous types of models, and that has led to interest in whether or not the representations in the models might capture things that are happening in the brain,” Tuckute says.

When a neural network is performing a task, its processing units generate activation patterns in response to each audio input it receives, such as a word or other type of sound. Those model representations of the input can be compared to the activation patterns seen in fMRI brain scans of people listening to the same input.

In 2018, McDermott and then-graduate student Alexander Kell reported that when they trained a neural network to perform auditory tasks (such as recognizing words from an audio signal), the internal representations generated by the model showed similarity to those seen in fMRI scans of people listening to the same sounds.

Since then, these types of models have become widely used, so McDermott’s research group set out to evaluate a larger set of models, to see if the ability to approximate the neural representations seen in the human brain is a general trait of these models.

For this study, the researchers analyzed nine publicly available deep neural network models that had been trained to perform auditory tasks, and they also created 14 models of their own, based on two different architectures. Most of these models were trained to perform a single task — recognizing words, identifying the speaker, recognizing environmental sounds, and identifying musical genre — while two of them were trained to perform multiple tasks.

When the researchers presented these models with natural sounds that had been used as stimuli in human fMRI experiments, they found that the internal model representations tended to exhibit similarity with those generated by the human brain. The models whose representations were most similar to those seen in the brain were models that had been trained on more than one task and had been trained on auditory input that included background noise.

“If you train models in noise, they give better brain predictions than if you don’t, which is intuitively reasonable because a lot of real-world hearing involves hearing in noise, and that’s plausibly something the auditory system is adapted to,” Feather says.

Hierarchical processing

The new study also supports the idea that the human auditory cortex has some degree of hierarchical organization, in which processing is divided into stages that support distinct computational functions. As in the 2018 study, the researchers found that representations generated in earlier stages of the model most closely resemble those seen in the primary auditory cortex, while representations generated in later model stages more closely resemble those generated in brain regions beyond the primary cortex.

Additionally, the researchers found that models that had been trained on different tasks were better at replicating different aspects of audition. For example, models trained on a speech-related task more closely resembled speech-selective areas.

“Even though the model has seen the exact same training data and the architecture is the same, when you optimize for one particular task, you can see that it selectively explains specific tuning properties in the brain,” Tuckute says.

McDermott’s lab now plans to make use of their findings to try to develop models that are even more successful at reproducing human brain responses. In addition to helping scientists learn more about how the brain may be organized, such models could also be used to help develop better hearing aids, cochlear implants, and brain-machine interfaces.

“A goal of our field is to end up with a computer model that can predict brain responses and behavior. We think that if we are successful in reaching that goal, it will open a lot of doors,” McDermott says.

The research was funded by the National Institutes of Health, an Amazon Fellowship from the Science Hub, an International Doctoral Fellowship from the American Association of University Women, an MIT Friends of McGovern Institute Fellowship, and a Department of Energy Computational Science Graduate Fellowship.

Approaching 1 Million: Legendary Bayraktar TB2 Set New Record – Technology Org

Today, Baykar is developing new weapons for their TB2 to achieve maximum efficiency when destroying targets on the…

Microsoft unveils 2.7B parameter language model Phi-2

Microsoft’s 2.7 billion-parameter model Phi-2 showcases outstanding reasoning and language understanding capabilities, setting a new standard for performance among base language models with less than 13 billion parameters. Phi-2 builds upon the success of its predecessors, Phi-1 and Phi-1.5, by matching or surpassing models up to…

Bethesda Announces New-Gen Update For Fallout 4 Coming Next Year

Update, 12/13/23:

Bethesda announced last year that Fallout 4 would be receiving a free new-gen update for PlayStation 5 and Xbox Series X/S in 2023. However, as the year comes to a close, Bethesda has delayed the update to next year.

“Than you for your patience with us as we work on the Fallout 4 next-gen update,” a tweet from the official Fallout account reads. “We know you’re excited, and so are we! But we need a bit more time and look forward to an exciting return to the Commonwealth in 2024.”

Thank you for your patience with us as we work on the Fallout 4 next-gen update. We know you’re excited, and so are we! But we need a bit more time and look forward to an exciting return to the Commonwealth in 2024.

— Fallout (@Fallout) December 13, 2023

The original story continues below…

Original story, 10/25/23:

Bethesda has revealed that Fallout 4 will receive a free new-gen update next year.

Announced as part of the company’s larger celebration for the Fallout series’ 25th anniversary, Fallout 4’s new-gen update will come to PlayStation 5, Xbox Series X/S, and Windows PC systems. It will include performance mode features for high frame rates, quality features for 4K resolution gameplay, bug fixes, and additional bonus Creation Club content. As for when to expect this update, Bethesda did not reveal a specific date, just that it would be coming sometime in 2023.

Elsewhere in the 25th Anniversary blog post, Bethesda announced the return of Spooky Scorched, the limited-time Halloween event for Fallout 76. It will run through November 8. There’s also a new 25th Anniversary challenge coming to the game.

“Each day from October 25 to November 8, players can visit the Atomic Shop for a free daily item,” the blog post reads. “These free goodies range from handy consumables for your next sojourn through Appalachia to brand-new items, so be sure to check into the Store each day to see what’s available.”

Here’s a look at the first reward, only available in the Atomic Shop tomorrow, October 25:

You can also complete special challenges during the Fallout 25th Anniversary event in Fallout 76 to unlock even more rewards. Active Prime Gaming subscribers can also claim a special 25th Anniversary Bundle for Fallout 76 starting November 2. You’ll have until February 2, 2023, to claim it. In addition to Lunchboxes and Bubblegum, this bundle contains the Vault Boy portrait, Shooting Target Suite, and Lincoln’s Repeater Lever Action Skin.

For more about this 25th Anniversary celebration, be sure to check out Bethesda’s full blog post. If you’ve never played Fallout 4 and are curious to do so thanks to this upcoming new-gen update, be sure to read our thoughts on it in Game Informer’s Fallout 4 review.

Are you going to jump back into Fallout 4 when this new-gen update is out? Let us know in the comments below!

Advanced Image Robotics Showcases the AIR One Remote Video Production – Videoguys

At the 2023 NAB Show in New York City, ProductHUBTV interviewed Nick Nordquist of Advanced Image Robotics, showcasing their innovative AIR One™ platform at the Broadfield Booth. Founded in 2020, Advanced Image Robotics aims to empower creators and producers by allowing them to focus on content creation without the need for IT expertise.

AIR One™ stands out as the first professional video production platform tailored for OTT livestreaming. Born out of years of experience in shooting multicamera live events, it introduces a remote system that streamlines equipment, elevates video quality, and significantly cuts production costs.

The compact and lightweight AIR One™ cloud-native cinematic camera unit is designed for convenience. Whether fitting under an airplane seat, mounting high, or blending seamlessly into a location, it provides unprecedented control. Setting up and initiating shooting takes only minutes, making it an ideal choice for efficient workflows.

With the unique iOS® app, AIR One™ offers intuitive control at your fingertips, eliminating the need for joysticks and bulky consoles. Pan and zoom with precision smoothness, bringing ultimate control to your craft.

The AIRcloud™ platform further enhances the workflow by providing seamless integration from camera to viewer. With direct internet connectivity, the need for an OB truck is minimized. Operating at ultra-low latency, the team can manage multiple cameras onsite or remotely. Directors can make decisions from anywhere, fostering a united workflow. AIRcloud™ integrates with familiar tools such as vMix®, Premiere Pro®, and more, enabling tasks like switching, distribution, editing, communications, adding graphics, and outputting results to any CDN.

Discover how AIR One™ from Advanced Image Robotics is reshaping the landscape of live event production, offering unprecedented control, efficiency, and cost-effectiveness for content creators and producers.

Watch the full video below:

[embedded content]